Check out my first novel, midnight's simulacra!

Architecture

Stored Programs

The stored-program model of Von Neumann suggests a Turing-equivalent physical device that, using modern manufacturing and materials, is at once:

- Fast (usefully many transitions of a Turing machine can be emulated per unit time),

- Reliable (the probability of undetected and detected errors can be meaningfully bounded), and

- Reprogrammable at a purely symbolic, as opposed to physical, level

Digital, finite transformations will be performed by processing units connected to memories (stores) and I/O devices.

- By digital, we mean that irrational numbers cannot be faithfully reproduced in variables, nor analog signals in samples -- we can work directly (without approximation) only with countable sets (see the real computing of Blum et al for another perspective).

- By finite, we mean that a given store can contain only a finite set of strings having finite Shannon Entropy -- essentially, finite integers. By combining the two, our domain becomes finite sets of finite algorithmic complexity theory (or so I understand things to work) -- the so-called computable numbers.

- Processing units expose simple functional building blocks (maps from finite sets of integers to finite sets of integers) and communication primitives through which connected devices might be manipulated. An instruction set architecture (ISA) defines the syntax and semantics of these finite languages.

- Memories provide finite storage areas parameterized any number of ways (cost, burst rate, sustained rate, latency, reliability, etc). Arbitrary finite binary strings can be placed into memories by the processor.

- I/O devices provide samples from the world external to this computing system.

Arbitrary finite strings in any non-empty alphabet are sufficient to encode any finite, discrete information, including programs expressed in the ISA.

In the sequential execution model, each processing unit contains a program counter. The processor fetches the first instruction from memory, as indexed by the program counter. The program counter is then incremented. The instruction's operands, if any, are fetched, and it is executed. Execution might result in changes to the architectural state (including the program counter) of the processor, to memory, and/or to I/O devices. These changes are committed, and the next instruction is fetched. Each instruction might correspond to many clock cycles. This model is dominant among general-purpose microprocessors; for other models, see dataflow architectures and transport-triggered architectures. Out-of-order processors combine stored, serialized programs with data flow-based triggering, yielding "restricted data flow architectures".

Parallelism

Bit-level parallelism

Register-level parallelism

- See the SIMD page.

Instruction Level parallelism

Memory Level parallelism

Thread Level parallelism

Hardware

Memory Hierarchies

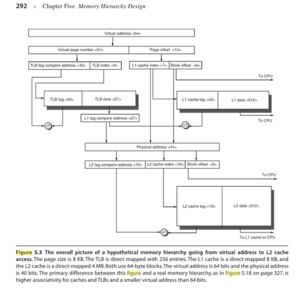

Processor clocking and even instruction set design is intimately related to access times of the lowest-level cache of the main memory.

- Stores: large, off-chip memories. System DRAM provides a volatile store (this is typically what's meant by "main memory"), while ferromagnetic and optical disks provide non-volatile, slower, larger stores (often called "secondary memory"). As stores get larger, access times usually increase; taken to an extreme, the Internet is just a highly unreliable, massively-distributed store exhibiting wildly varying access times: a memory for all mankind.

- Caches: Submemories. Performance-critical components of a store's abstraction -- like any abstraction's internals, their user oughtn't need know them to make use of the abstraction, but might require intimate knowledge to make full and efficient use! SRAM close to or on the chip serves as (often multiple-level) caches for main system DRAM. A section of DRAM is often used as a "page cache" for system disk (system DRAM as a whole, however, is *not* a cache of disk. Swap is a part of virtual memory implementation and not germane to discussion of cache). Caches take advantage of predictable access patterns (temporal clustering, spatial clustering, software and hardware prefetching) to provide faster access to a subset of the memory abstraction. In exchange, they add complexity, draw power, and often consume both large swaths of a system's transistor budget and prime real estate. In the absence of accurate prediction (very random patterns, woefully undersized cache, etc), the memory system is said to be thrashing, and performance can be substantially worsened relative to an uncached memory. Usually, however, caches lead to drastic performance improvements.

- In a computer architecture context, "cache" usually refers only to the (possibly-multilevel) caches of main memory.

- In an OS context, "cache" might refer to any store.

- Data units: Generally known as registers, this memory is directly available to execution units. It is capable of being updated every cycle, although such a supply might be impossible. Read and write ports directly connects the register file to combinatorial hardware and main memory. On some architectures, instructions can directly reference memory.

Memory hierarchies are highly discretized. Storage offered by different memories usually involves quantitative shifts measured in orders of magnitude, as does time required to access them -- nanoseconds for small on-die SRAMs, microseconds for uncached access to system DRAM, milliseconds for hard drive accesses, fractions of a second for network transfers (hey, it still beats the post office).

Caches

Given the tradeoff and potentialities involved in cache design, and the wide gap between access times of different stores, caches are often multitiered. Current processors often support three levels of hardware cache; almost all disks provide a few megabytes of DRAM as a cache well before, and for different purposes than, the operating system introduces a page cache. Among the levels of a multitiered cache, two levels can be:

- inclusive: the lower level is a strict subset of data available in the higher level

- exclusive: the caches share no data

- unrelated: data might be present in both caches

Exclusive caches minimize redundancy between cache levels, but force complexity in multiprocessor systems and even in uniprocessor systems with different line lengths across cache levels. Similarly, the main memory's cache is often split into an instruction cache and data cache, with their own ports (and possibly different implementations and sizes), yielding improved access times and fewer structural hazards (instructions can be fetched, while in another unit data are accessed, without structural hazards or expensive logic). A unified cache can make more complete use of the memory available, however, especially for very small programs, effectively trading the risk of conflicts for greater capacity.

Reads are the common case -- 1 instruction cache hit (barring trace caches, etc) for every instruction issued, and loads usually substantially outweighing stores for data cache (or unified cache) access. They're also faster (assuming the data is in cache): the processor needn't specify the width of the load, and the data can be loaded while the tag is being checked -- if the tag doesn't match, no harm is done. Writes must wait for the tag to be verified. Write-combining can be performed in unflushed write buffers or dirty lines of a write-back cache, saving memory bandwidth. Writing policies (configured on the x86 via MTRRs or PATs) include:

- Write-through: the write propagates out throughout the store abstraction (ie, from L1 cache, over an exclusive L2 cache, to system DRAM). Simple to implement, as writes need no buffers and loads resulting in evictions never force writebacks. Cache and backing store exhibit data coherence (observers never see a different value in memory than what's in cache), a useful property in the presence of multiple cores or DMA. In order to write at the full speed of the cache, writes must be buffered; for a load which overwhelms the write buffer, or in its absence, the processor will see write stalls above and beyond the cache timings even for cached data.

- Write-back: the write is only applied to the cache. Memory is updated when the line is replaced. Minimizes latency for the attached execution unit -- potential write throughput is equivalent to that of the cache. Saves memory bandwidth and power, but requires a coherency protocol (with its own state requirements). Minimally, a dirty bit indicates that the line need be written back upon eviction. In the presence of multiple, independent caches at the same level of a store abstraction, full cache coherency protocols such as MESI are used. Write-back caches also tend to employ write buffers (so that reads might be prioritized; see below), also known as "victim buffers" by AMD (not to be concerned with victim caches, small fast direct-mapped stores into which all evicted lines go, not just dirty ones -- the goal of this latter is to minimize the impact of conflict misses, not eliminate blocking on writebacks).

A cache hit occurs when data being referenced is valid in the cache; otherwise the reference is a cache miss. A bit is typically devoted to determining validity of a cacheline. The cache is initialized so that all lines are marked invalid. As lines are loaded, they become valid. In the absence of cache coherency protocols and context switches, lines once valid will remain valid (but might change address); other processors' writes might invalidate lines in a multicore system. Misses are categorized into four types:

- Compulsory miss, if the data had not yet been referenced. It has otherwise been evicted and is a...

- Coherence miss, if another processor invalidated this line

- Capacity miss, if the eviction (and thus miss) would have occurred despite full associativity.

- Conflict miss, otherwise. A fully-associative cache, by definition, never suffers conflict misses.

If there are no suitable invalid lines for a cache miss, there will be a cache replacement. In the absence of direct mapping (ie, n-associativity where n>1), a non-trivial replacement policy decides which member of a set to evict. Page faults are managed by the operating system, due to the high miss latencies and importance of making a good eviction decision. These algorithms are widely varied, and beyond the scope of this article. Architectural policies for cache replacement are primarily measured by their preservation of temporal locality, and include:

- LRU: This can be and is implemented several ways (high-speed stack, use of lg2(n) bits to track only the one truly least recently used element ("True LRU"), the 2Q algorithm...I've got an idea that uses indexes into permutation tables). Most expensive, in terms of space and time. Maximizes use of temporal locality -- for m<n references which go unused, n-m reused references will remain in cache. Every access results in an update, and some implementations require multiple updates per access. Fundamentally exponential state requirements generally preclude precise LRU for large n.

- Pseudo-LRU: Uses n-1 bits to weigh paths in a full binary tree of height lg2(n). Exhibits O(1) selection time, but requires an O(1) update on every access (like LRU). Tracks some patterns well, others poorly (FIXME find better analysis).

- FIFO: The oldest (as opposed to least-recently-used) line is evicted. This can be implemented in O(1) selection and update time via n bits per set (it's simply a counter modulo 2^n). Updates are only performed upon a replacement, as opposed to every access.

- Random: Since complexity is traded off against preservation of temporal locality, programs exhibiting little temporal locality (randomly-accessed databases) are best served by the simplest solution surjective onto members of a set. This can be done with a pseudorandom replacement policy. Such a cache usually allows programmer control of seeding, to support debugging and profiling.

Write misses are relatively rare; when they do occur, either a line is evicted and the standard write policy is invoked, or in a "non-write-allocating" policy the missing caches are untouched. This is rare for write-back caches of volatile memory, since data written therein is usually read. It makes more sense in a non-volatile memory's cache (H&P4 C-14 says this is also true for volatile write-through, but I find their reasoning to be flawed due to write buffers). Note that the processor stalls earlier in the pipeline due to read misses than write misses.

Memory performance is quantized in terms of average memory access time (AMAT), equal to hit penalty + miss rate * miss penalty (this being the penalty added by the miss, not end-to-end latency for a miss; this latter would be hit penalty + miss penalty) and expressed in terms of cycles or, less commonly, absolute time (calculating or indeed defining miss penalty for out-of-order, especially superscalar, processors is difficult, and done various ways). Thus, memories can be improved by (assuming other parameters are held constant):

- Reducing hit penalty: avoid address translation (use the virtual address -- VIVT, Virtual-Index/Virtual-Tag)

- Improving hit rate: increase number of lines, increase line length, increase associativity

- Reducing miss penalty: multilevel caches, prioritizing reads over writes

- Parallelizing: interleaved/banked cache

Avoiding address translation introduces aliasing: a single cacheline worth of backing store might be represented twice in a cache, since each process (usually) has its own virtual memory. It also forces page access settings to be copied into the cache from the TLB, and PID-tagging lest cache flushes be required upon context switches. Thus, VIPT -- Virtual-Index, Physical-Tag -- translation is performed in parallel with indexing based solely upon the page offset (shared between physical and virtual addresses), and physical addresses are used beyond the lowest-level cache (PIPT, Physical-Index Physical-Tag). Adding capacity incurs transistor and power cost, and can slightly increase access penalty. Increasing associativity can increase access penalty even more, and also has hardware costs. Augmenting either can actually decrease hit rates for a given workload, due to the interactions between addresses and cache geometry. There's four rules of thumb here:

- Associativity beyond 8-way is useless, except for very small (a few K at most) caches

- For caches less than a hundred K or so, miss rate for fKb under 2-way associativity ~= miss rate for 2fKb direct mapped

- Associativity, especially beyond 2-way, is a bad idea for large caches...

- Unless it's a second-level cache or higher, in which case 2-way is just about right.

Increasing cacheline length is the only way to reduce compulsory misses (at the expense of increased latency loading misses, although this can be addressed via "early-restart" or "critical first" load schemes), but with insufficient spatial locality longer cachelines lead to increased conflict or even capacity misses and waste memory bandwidth. High-bandwidth, high-latency memories are thus well-suited to large cachelines, as are stream-oriented tasks.

Virtual Memory

See Also

- Intel's Atom uses an eight-transistor (and thus lower-voltage) SRAM technology?

- Agner Fog's Intel and AMD microarchitecture references