Check out my first novel, midnight's simulacra!

The Power, pt 1: Difference between revisions

| (118 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<b>nb: if this reads like a script, that's because it was originally going to be a video in my [[DANKTECH]] series. maybe it still will, one day.</b> | |||

hey there, my hax0rs and hax0rettes! it's been a good long time since we last | hey there, my hax0rs and hax0rettes! it's been a good long time since we last | ||

| Line 13: | Line 13: | ||

with a critical ear and watch with a scrupulous eye, but, you know, you really | with a critical ear and watch with a scrupulous eye, but, you know, you really | ||

ought be doing that all the time. if i have a general theme tonight, i suppose | ought be doing that all the time. if i have a general theme tonight, i suppose | ||

that theme is: <b>power</b>. | that theme is: <b>power</b>. in the world of huey lewis and the news, love can be measured in watts, the SI unit of power. love applied over time would furthermore be work, and measured in joules. but i'm an engineer, so this evening we'll be quantifying the power of love, but mainly the love electrons feel towards cations. | ||

in the world of huey lewis and the news, love can be measured in watts, the SI unit of | |||

power. love applied over time would furthermore be work, and measured in joules. but | |||

i'm an engineer, so this evening we'll be quantifying the power of love, but mainly | |||

the love electrons feel towards cations. | |||

| Line 114: | Line 107: | ||

[[Noctua]] release their NH-U12P, and one year later the legendary NH-D14. I | [[Noctua]] release their NH-U12P, and one year later the legendary NH-D14. I | ||

ordered one of the latter for my 2011 Sandy Bridge i7 2600K build, and it was | ordered one of the latter for my 2011 Sandy Bridge i7 2600K build, and it was | ||

like a piece of alien technology. I'd never seen anything remotely like it. | like a piece of alien technology. I'd never seen anything remotely like it. and now the kids are delidding their processors and boofing "liquid metal" and communicating exclusively through eyerolls and dick pics. you can't let the little fuckers generation gap you. | ||

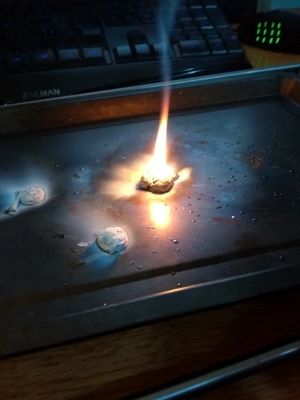

[[File:cpufire.png|right|thumb|we're gonna need a | [[File:cpufire.png|right|thumb|we're gonna need a cooler chip]] | ||

Anyway back to the ATX motherboard connector. The Pentium IV needed more | Anyway back to the ATX motherboard connector. The Pentium IV needed more | ||

juice than that available, and it wanted it in the 12 volt form. By this | juice than that available, and it wanted it in the 12 volt form. By this | ||

| Line 123: | Line 116: | ||

voltages, all of them less than 3.3V, the lowest voltage available from the | voltages, all of them less than 3.3V, the lowest voltage available from the | ||

PSU (not only do smaller processes allow less voltage, they mandate less | PSU (not only do smaller processes allow less voltage, they mandate less | ||

voltage, | voltage, lest they die. Put 2V into your modern processor, and you'll kill | ||

it immediately). So first motherboards added linear voltage regulators, | it immediately). So first motherboards added linear voltage regulators, | ||

but those dissipated the volt-amperage product difference as heat. This | but those dissipated the volt-amperage product difference as heat. This | ||

| Line 152: | Line 145: | ||

So once you have that, you want to work on the highest voltage possible, | So once you have that, you want to work on the highest voltage possible, | ||

since more current requires a thicker wire and implies more loss to | since more current requires a thicker wire and implies more loss to | ||

Joule heating. | Joule heating: <b>P = I²R</b>. | ||

So the highest voltage we can get from the PSU is 12V, so that's what the CPU | So the highest voltage we can get from the PSU is 12V, so that's what the CPU | ||

wants. And thus ATXV12 was born | wants. And thus in February 2000 ATXV12 was born, adding a 4-pin Molex Minifit | ||

Jr 39-01-2040 carrying an extra 3.3, 5, and 12V pin (plus an extra ground). Now | Jr 39-01-2040 carrying an extra 3.3, 5, and 12V pin (plus an extra ground). Now | ||

we already had 3x 3.3V pins and 4x 5V pins, so our capacities there rose 33% | we already had 3x 3.3V pins and 4x 5V pins, so our capacities there rose 33% | ||

| Line 175: | Line 166: | ||

v2.53, last updated in June 2020. | v2.53, last updated in June 2020. | ||

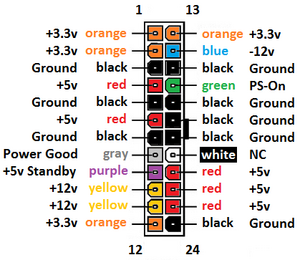

[[File:Atx12v.png|left|thumb|ATX12V 24-pin]] | |||

so anyway, back to testing your power supply. if you don't have a little ATX power | so anyway, back to testing your power supply. if you don't have a little ATX power | ||

tester, you can of course just get a copy of the ATX12V pinout (if you're using | tester, you can of course just get a copy of the ATX12V pinout (if you're using | ||

| Line 234: | Line 226: | ||

well after CMOS. | well after CMOS. | ||

[[File:Nema.png|right|thumb|some standard NEMA receptacles]] | |||

alright, so that was a bit of a digression, but back to my story! so i've got | alright, so that was a bit of a digression, but back to my story! so i've got | ||

my PSU tester out, and there's no love, no flow whatsoever. the electrons are | my PSU tester out, and there's no love, no flow whatsoever. the electrons are | ||

| Line 263: | Line 256: | ||

this ought be right back at 120, and it ought not be less than hot-to-neutral. | this ought be right back at 120, and it ought not be less than hot-to-neutral. | ||

[[File:Newfork.png| | [[File:Newfork.png|left|thumb|soon as it gets dark, we're gonna have us a time]] | ||

* <b>if neutral-to-ground is large and hot-to-ground is small</b>, hot and neutral have been reversed. many loads, including most electric ones, aren't sensitive to AC polarity, but some appliances are. fix this if appropriate. | * <b>if neutral-to-ground is large and hot-to-ground is small</b>, hot and neutral have been reversed. many loads, including most electric ones, aren't sensitive to AC polarity, but some appliances are. fix this if appropriate. | ||

* <b>if hot-to-neutral is greater than hot-to-ground</b>, neutral and ground have been reversed. note that you generally have to have some load to see this, and the more load, the greater the difference. fix this, as a dirty ground can fuck you in a great many ways. | * <b>if hot-to-neutral is greater than hot-to-ground</b>, neutral and ground have been reversed. note that you generally have to have some load to see this, and the more load, the greater the difference. fix this, as a dirty ground can fuck you in a great many ways. | ||

| Line 330: | Line 323: | ||

==An origin story== | ==An origin story== | ||

so i like to get to the bottom of questions. power is fundamentally related to | so i like to get to the bottom of questions. power is fundamentally related to | ||

energy—in fact, it's just the amount of energy delivered in some unit time. | |||

power is watts, and watts are just joules per second, and joules are work, | power is watts, and watts are just joules per second, and joules are work, | ||

joules are energy. a joule's a Newton-meter, a joule's a Pascal per cubic meter, | joules are energy. a joule's a Newton-meter, a joule's a Pascal per cubic meter, | ||

| Line 351: | Line 344: | ||

baryogenesis. we think we pretty much know what's up from that point. | baryogenesis. we think we pretty much know what's up from that point. | ||

[[File:Bindingcurve.gif|600px|center|thumb|the curve of binding energy. to the left of iron/nickel, fusion is generally exothermic, with He, C, and O being particularly favorable. to the right, fission is generally exothermic, with ²³³U, ²³⁵U, and ²³⁹Pu being particularly dank candidates (but don't count out ²³⁷Np!).<br/>do you see a pattern in the fissile isotopes?]] | |||

now if you're unfamiliar with the concept of baryogenesis, it's one of the primary mysteries in modern physics. by one mechanism or another, you have a bunch of matter, electroweak symmetry breaks, and you have a hot pho of massive fermions. thing is, all known mechanisms would produce equal numbers of matter and antimatter. either they all annihilate, or you end up with patches of both. but by all indications, the universe does contain matter, so they didn't all annihilate. and we would be able to detect the boundaries where antimatter and matter were meeting in the cosmos. why the imbalance leading to a universe that appears to be free of antimatter? worth thinking about. | |||

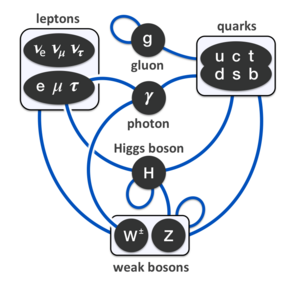

it'll behoove us to do a quick recapitulation of fundamental terminology. the money split is between bosons and fermions. bosons have integer quantum spin, fermions half-integer. another way of saying this is that a boson's total wave function is symmetric. another way of saying this is that in low-temperature, high-density regimes, bosons display Bose-Einstein statistics, and fermions Fermi-Dirac statistics (hence the names). once things get hot or sparse, quantum effects cease to dominate, and you can enjoy Maxwell-Boltzmann statistics for everyone. what really counts from our perspective is that bosons don't honor Pauli's Exclusion Principle. they can smush up into the same quantum states. not so for fermions. note that if you are a bound state of an even number of fermions, you're a boson. when we speak of bosons we usually mean the gauge bosons and the Higgs. gauge bosons are force carriers, in that we can model particle interactions as exchanges of virtual gauge bosons. the Higgs is spin 0, a scalar boson. all known gauge bosons are spin 1, vector bosons. the theorized graviton would be a spin 2 tensor boson. the composite bosons are responsible for the Bose-Einstein condensates you've probably heard of, and superfluidity and other low-temperature parlor tricks, but not particularly interesting beyond that. | |||

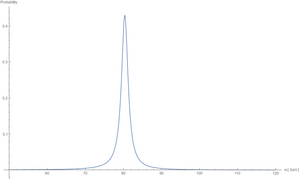

[[File:wboson.png|right|thumb|mass distribution of the charged W bosons, a [https://en.wikipedia.org/wiki/Relativistic_Breit%E2%80%93Wigner_distribution Briet-Wigner] centered around 80GeV.]] | |||

the fundamental bosons are all their own antiparticles. photons and gravitons are massless, and lack self-interaction, and it's exactly these properties that lead to the infinite reach of gravity and electromagnetism. the weak nuclear force works with two charged W bosons and a neutral Z boson, all three of them massive. the Heisenberg uncertainty relation smearing out the bookkeeping of virtual particles is inversely proportional to the energy involved, and thus the weak interaction is effective only over short ranges, and it's slow even there. we might have to tolerate massive charged bosons popping into existence, but there's no need to let them live their unseemly off-shell lives on the couch. now you might be asking "but niiiiiiiiick, we know the top quark only decays via flavor-changing weak interactions, but its average lifetime is so shooooooooooooort niiiiiiick". well, the top quark approaches 175GeV. it doesn't <i>need</i> to borrow virtual bosons at the First Bank of Heisenberg. if a 172GeV top wants an 80GeV W boson, it whips and drives the little bastard. the next down the line is the bottom quark, at a mere 4GeV. not so much of a power bottom, as it turns out. | |||

gluons are mysterious, sublime. i don't pretend to understand their ways. honestly, i don't fuck with gluons at all. | |||

[[File:forcemap.png|left|thumb|no one likes neutrinos]] | |||

so, the fermions: leptons, quarks, and composites. here's the good stuff. well, not just yet, because first we have to deal with bullshit neutrinos and the even more thoroughly bullshit antineutrinos. actually, neutrinos might be their own antiparticles, who knows, they're worthless either way. if you've heard of the search for "neutrinoless double beta decay", a bunch of very unhappy japanese and italians are trying to figure this out, morlocks in salt mines and giant tanks of ultrapure water, counting scintillations and subtracting expected tritium decays and just generally living their worst lives. there are three distinct neutrinos, all so weak-willed and devoid of identity that they oscillate freely between one another. trillions transpierce you each second, but they might as well not. neutrinos have mass, but they might as well not. i loathe neutrinos. | |||

rounding out the leptons (lepton by the way from the Greek λεπτός for "thin") are three generations of electrons and their antiparticles. the electron, muon, and tau, and here we know they are not their own antiparticles, so we have the positron, antimuon, and antitau. all but the positron and electron are unstable, but the positron and electron are our first truly stable massive particles, though they can annihilate one another yielding photons. electrons are friends. we'll be getting very close momentarily. neutrinos mainly serve to conserve energy and lepton number in decays. a purely leptonic decay always results in a lepton of lesser mass, a neutrino corresponding to the decaying lepton, and an antineutrino corresponding to the decay product. this latter negates the new lepton number; the former carries away the ghostly memory of the original lepton. the tau can decay via hadrons, but the muon hasn't sufficient mass. | |||

our final pointlike, fundamental particles are of course the quarks, all of them fermions. quarks are the sluts of the standard model, interacting with all four forces, six flavors of hot mess. you don't get quarks by themselves at any reasonable state, but hadrons begin to boil into a quark-gluon soup around the Hagedorn temperature: 150MeV (1.7 terakelvin). hadrons (from the Greek ἁδρός for "stout") are bound collections of quarks: mesons (Greek μέσος for "middle") pair a quark with an antiquark having its anticolor, while baryons (Greek βαρύς for "heavy") combine three quarks of different color (color is the tripartite charge of the strong interaction). the unstable mesons are of little interest to us, and we'll not speak of them again outside of the pion-mediated residual nuclear force. baryons, on the other hand, are quite useful if you're a fan of atoms. the proton and neutron are the lightest and second-lightest, respectively, possible baryons, and thus the stablest. indeed, protons seem completely stable, supersymmetry be damned. so that's the end result of baryogenesis. | |||

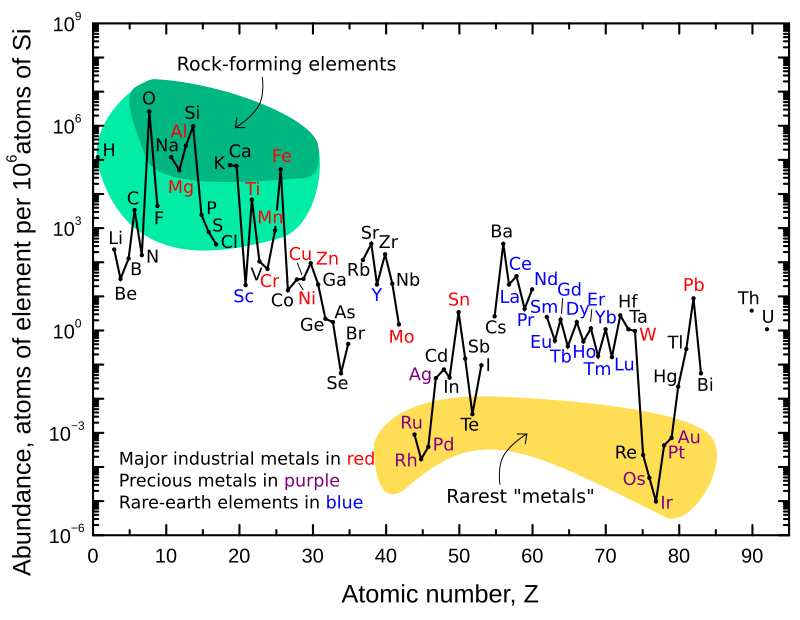

remember e= | [[File:abundances.png|center|upright|frame|atomic abundances in the earth's upper crust. note logarithmic y-axis.]] | ||

remember e=mc². for now, we'll be working in terms of energies and masses. | |||

nuclear rearrangements are energetic enough to manifest as a significant | nuclear rearrangements are energetic enough to manifest as a significant | ||

fractional mass change. here's the curve of binding energy. as it rises, | fractional mass change. here's the curve of binding energy. as it rises, | ||

things are bound more tightly, with less mass per nucleon. as it falls, | things are bound more tightly, with less mass per nucleon. as it falls, | ||

the binding is less tight, and thus nuclear potential energy is available. | the binding is less tight, and thus nuclear potential energy is available. | ||

it's these nuclear potentials which give rise to everything that follows | it's gravity and these nuclear potentials which give rise to everything that follows | ||

in the universe. there's no work that can be extracted from the thermal bath | in the universe. there's no work that can be extracted from the thermal bath | ||

of primeval leptons and bosons. | of primeval leptons and bosons (i'm leaving out relativistic jets from accretion disks until you can do something with astrophysical jets). | ||

a neutron is made up of one up and two down quarks, with a rest mass of | a neutron is made up of one up and two down quarks, with a rest mass of | ||

939.565MeV/ | 939.565MeV/c². the proton boasts two up and one down quark, for a rest mass of | ||

938.272MeV/ | 938.272MeV/c². the up is the lightest quark, and doesn't change without | ||

good reason (external energy). not so with the neutron, which as you probably | good reason (external energy). not so with the neutron, which as you probably | ||

know decays to a proton outside the nucleus, with a half-life of about 612s—the | know decays to a proton outside the nucleus, with a half-life of about 612s—the | ||

| Line 463: | Line 473: | ||

might thus expect, we see large peaks for both in our prevalence chart. | might thus expect, we see large peaks for both in our prevalence chart. | ||

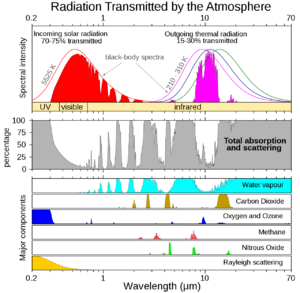

[[File:Wien.gif|left|thumb|The wavelength of peak emission is dependent on a blackbody's temperature.]] | |||

the solar temperature is generally higher than that of its planetary disk. | the solar temperature is generally higher than that of its planetary disk. | ||

by the Wien law, we know the energy per gamma is substantially higher; | by the Wien law, we know the energy per gamma is substantially higher; | ||

| Line 486: | Line 497: | ||

heavy farts for methane, methane we'll burn to power the grills in which we'll | heavy farts for methane, methane we'll burn to power the grills in which we'll | ||

cook them, frenetic dancing, magnifying glasses with which to incinerate ants, | cook them, frenetic dancing, magnifying glasses with which to incinerate ants, | ||

yes even the power of love | yes even the power of love (love being a phenomenological measure arising from the neurological statistics of <i>homo sapiens</i>)—originates in these few nuclear, gravitational, | ||

and frictional sources. | and frictional sources. | ||

| Line 495: | Line 506: | ||

110V, and it would be expensive to rip all our electric infrastructure out. | 110V, and it would be expensive to rip all our electric infrastructure out. | ||

europe had the gift of world war ii to handle that for them, and they came | europe had the gift of world war ii to handle that for them, and they came | ||

back at the more | back at the more efficient—but less safe—240V). need power for our rgb | ||

fans and rgb keyboards and rgb dinner guests etc. but i don't see anything going | fans and rgb keyboards and rgb dinner guests etc. but i don't see anything going | ||

on in my | on in my CPU—what's it doing with 280W? | ||

[ pricecan.mkv ] | [ pricecan.mkv ] | ||

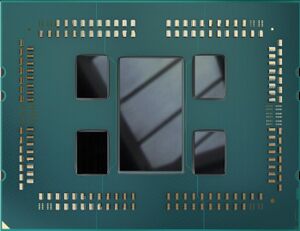

you're surely aware that the cpu is built up of transistors. my amd 3970x has | you're surely aware that the cpu is built up of transistors. my amd 3970x has | ||

15.2 gigatransistors on TSMC's 7nm process. nvidia's a100 has 54 billion. | 15.2 gigatransistors on TSMC's 7nm process and another eight on 12nm. nvidia's a100 has 54 billion. | ||

graphcore's colossus MK2 boasts 59.6 billion. samsung built a 1TB eUFS VNAND | graphcore's colossus MK2 boasts 59.6 billion. samsung built a 1TB eUFS VNAND | ||

flash with 2 teratransistors. but the reigning dance hall champion, at least | flash with 2 teratransistors. but the reigning dance hall champion, at least | ||

| Line 510: | Line 521: | ||

system can be, but we are pretty sure that we know the minimal cost of a unit of | system can be, but we are pretty sure that we know the minimal cost of a unit of | ||

irreversible computation (if you've never heard of reversible computation, | irreversible computation (if you've never heard of reversible computation, | ||

go read Feynman's Lectures on Computing, right now, but it's basically computing | go read Feynman's <i>Lectures on Computing</i>, right now, but it's basically computing | ||

using exclusively bijective functions). you've got Boltzmann's entropy formula | using exclusively bijective functions). you've got Boltzmann's entropy formula | ||

| Line 520: | Line 531: | ||

[ E = k_B T ln2 ] | [ E = k_B T ln2 ] | ||

and that's Landauer's principle, and you really ought go read john | and that's Landauer's principle, and you really ought go read john archibald | ||

wheeler's "it from bit", which is fantastic. | wheeler's <i>"it from bit"</i>, which is fantastic. | ||

k_B is the boltzmann constant 1.38 * 10^-23 J/K. so at 30C, we've got 303.15K, | k_B is the boltzmann constant 1.38 * 10^-23 J/K. so at 30C, we've got 303.15K, | ||

| Line 607: | Line 618: | ||

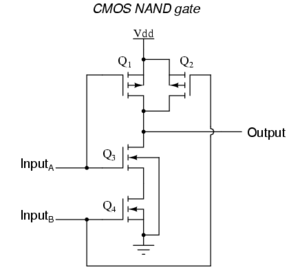

in transistors, it's probably worth doing so. let's build a 2-input NAND gate, | in transistors, it's probably worth doing so. let's build a 2-input NAND gate, | ||

which is of course along with NOR a universal boolean gate. | which is of course along with NOR a universal boolean gate. | ||

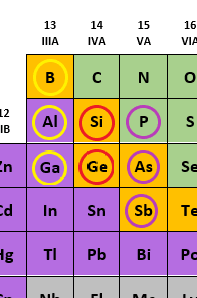

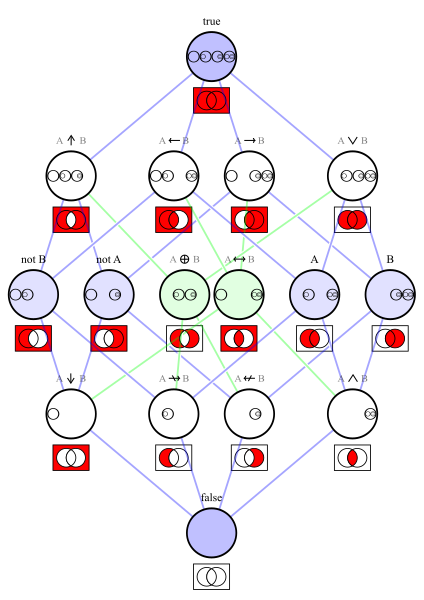

actually, it occurs to me that some of you might not be aware of the | actually, it occurs to me that some of you might not be aware of the | ||

| Line 619: | Line 628: | ||

functions, 256 ternary functions, etc., 2^2^N for n arguments. | functions, 256 ternary functions, etc., 2^2^N for n arguments. | ||

[[File:Hassebinary.png|center|frame|Hasse diagram of binary Boolean functions]] | |||

unary functions: pass, negate, const 0, const 1 | unary functions: pass, negate, const 0, const 1 | ||

| Line 633: | Line 642: | ||

negate in NAND by pairing the input with 1. 0 AND 1 is 0; 1 AND 1 is 1. | negate in NAND by pairing the input with 1. 0 AND 1 is 0; 1 AND 1 is 1. | ||

const 0 is 1 NAND A. const 1 is const 0 with a NOT. good times. | const 0 is 1 NAND A. const 1 is const 0 with a NOT. good times. | ||

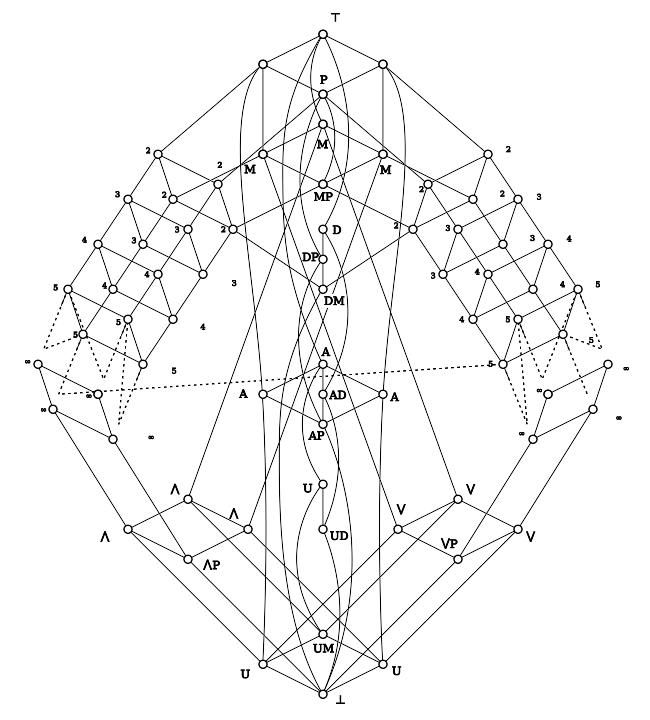

[[File:Postlattice.svg|center|frame|Post's lattice topologically orders all clones on the set {0,1}]] | |||

using similar strategies, we can build up whatever, and we can prove | using similar strategies, we can build up whatever, and we can prove | ||

this using connectives and clones, as demonstrated in Post's lattice. | this using connectives and clones, as demonstrated in Post's lattice. | ||

[[File:Cmosnand.png|right|thumb|NAND is a minimal CMOS gate]] | |||

so we can build this with two MOSFETs per input. if both A and B are low, | so we can build this with two MOSFETs per input. if both A and B are low, | ||

both pmos are on, and both nmos are off. the output gets V_DD due to the | both pmos are on, and both nmos are off. the output gets V_DD due to the | ||

| Line 732: | Line 742: | ||

polarity. when you plug a conductor in, a path is made, and now electrons can | polarity. when you plug a conductor in, a path is made, and now electrons can | ||

shuffle along. nuclei don't move, but the valence electrons do, each | shuffle along. nuclei don't move, but the valence electrons do, each | ||

representing one fundamental charge. an amp is about 6. | representing one fundamental charge. an amp is about 6.24x10¹⁸ elementary charges. | ||

to conduct more amps, you need a bigger conductor; the number of charges that | to conduct more amps, you need a bigger conductor; the number of charges that | ||

can move is directly proportional to the cross-section of the wire, and thus | can move is directly proportional to the cross-section of the wire, and thus | ||

| Line 832: | Line 842: | ||

itself. the charge is propagated along as a change to the electric field, | itself. the charge is propagated along as a change to the electric field, | ||

exploding forward far more quickly than the electrons themselves (indeed, faster | exploding forward far more quickly than the electrons themselves (indeed, faster | ||

than any massive particle will move | than any massive particle will or can move, as we know | ||

from special relativity). a final speed we want to look at is thus the signal | from special relativity). a final speed we want to look at is thus the signal | ||

propagation velocity, the speed with which this change to the field ripples out. | propagation velocity, the speed with which this change to the field ripples out. | ||

| Line 858: | Line 868: | ||

voltage matches the provided voltage, and that your wires are safe for the amps. | voltage matches the provided voltage, and that your wires are safe for the amps. | ||

[[File:Pentonville1895.jpg|right|thumb|Grinding the wind.]] | |||

so now that we know what electricity is, can we not dispense with the electric | so now that we know what electricity is, can we not dispense with the electric | ||

company? let's say we wanted to power the computer using our biological energy. | company? let's say we wanted to power the computer using our biological energy. | ||

| Line 866: | Line 877: | ||

the wind." alternatively there were "crank machines" where you had to do a | the wind." alternatively there were "crank machines" where you had to do a | ||

certain number of rpms. fucking ghastly shit. and certainly going back through | certain number of rpms. fucking ghastly shit. and certainly going back through | ||

the Corrective Labor Colonies of the Soviet Union's GULAG, the Zwangsarbeiter | the Corrective Labor Colonies of the Soviet Union's GULAG, the <i>Zwangsarbeiter</i> | ||

of | of Organization Todt, the chattel slavery of my own Southern United States, the | ||

diamond mines of Sierra Leone and Africans enslaving Africans pretty much since | diamond mines of Sierra Leone and Africans enslaving Africans pretty much since | ||

there were Africans, to Hammurabi's Code and probably the first homo sapiens | there were Africans, to Hammurabi's Code and probably the first homo sapiens | ||

| Line 894: | Line 905: | ||

biology. | biology. | ||

==What is life?== | |||

<b>what is life?</b> well, physicist schrodinger tried to answer that in | <b>what is life?</b> well, physicist schrodinger tried to answer that in | ||

1944 (he was in ireland at the time), and he said it's highly ordered | 1944 (he was in ireland at the time), and he said it's highly ordered | ||

| Line 902: | Line 914: | ||

would raise the temperature, and that denatures proteins. most enzymatic | would raise the temperature, and that denatures proteins. most enzymatic | ||

proteins base their tertiary structure on hydrogen and van der waals bonds, | proteins base their tertiary structure on hydrogen and van der waals bonds, | ||

and we're talking weak hydrogen bonds here a few kilojoules per mole. | and we're talking weak hydrogen bonds here: a few kilojoules per mole. | ||

well, a square meter receives about 1.4 kilojoules every second in full | well, a square meter receives about 1.4 kilojoules every second in full | ||

sunlight. a nutritional calorie is 4 kilojoules. and that's per mole. | sunlight. a nutritional calorie is 4 kilojoules. and that's per mole. | ||

| Line 970: | Line 982: | ||

energy budget, but it's fascinating. as one last note, if you've ever | energy budget, but it's fascinating. as one last note, if you've ever | ||

wondered why carbs and proteins are considered 4kcals a gram, and fats are | wondered why carbs and proteins are considered 4kcals a gram, and fats are | ||

9 kcals, know that it's the Atwater system, it's | 9 kcals, know that it's the Atwater system, it's digestibility-scaled (proteins | ||

have more raw energy, but we piss away 20% of the protein we take in), and | have more raw energy, but we piss away 20% of the protein we take in), and | ||

fats lack oxygen, supplying only energy-rich CH bonds (oxygen being the | fats lack oxygen, supplying only energy-rich CH bonds (oxygen being the | ||

| Line 977: | Line 989: | ||

just rub it on your gums: you can't go wrong. | just rub it on your gums: you can't go wrong. | ||

[[File:yakpower.png|thumb|left|To hell with parliamentary procedure. We've got to wrangle up some cattles!]] | [[File:yakpower.png|thumb|left|To hell with parliamentary procedure. [https://comb.io/In8G9F We've got to wrangle up some cattles!]]] | ||

so if we had enough people or yaks or whatever devoted to it, sure, we could | so if we had enough people or yaks or whatever devoted to it, sure, we could | ||

power our computer. of course, we'd need to feed them, and simply burning | power our computer. of course, we'd need to feed them, and simply burning | ||

| Line 990: | Line 1,002: | ||

food resources, it's hardly a place for power-generating yaks. there are | food resources, it's hardly a place for power-generating yaks. there are | ||

furthermore several hundred other units here, and any attempt to bring in | furthermore several hundred other units here, and any attempt to bring in | ||

the corresponding yak volume would draw some very low-pH words from | the corresponding yak volume would draw some very low-pH words from management. | ||

there'd be a great deal of yak shit needing removal, and that work simply | there'd be a great deal of yak shit needing removal, and that work simply | ||

doesn't appeal to the young urban professionals that dominate my building. so | doesn't appeal to the young urban professionals that dominate my building. so | ||

| Line 1,002: | Line 1,014: | ||

==Move 'em out== | ==Move 'em out== | ||

[[File:Beltsander.png|thumb|oh, child with sanded-off face! oh, humanity!]] | |||

now we can transport any kind of energy. mechanical energy? sure. an | now we can transport any kind of energy. mechanical energy? sure. an | ||

internal combustion engine oxidizes fuels containing chemical energy to produce | internal combustion engine oxidizes fuels containing chemical energy to produce | ||

| Line 1,008: | Line 1,021: | ||

of longitudinal and traverse waves, and the kinetic energy of displaced | of longitudinal and traverse waves, and the kinetic energy of displaced | ||

particles. pneumatics, hydraulics, telodynamics, gears, these all exist. | particles. pneumatics, hydraulics, telodynamics, gears, these all exist. | ||

london moved 7000 | fin de siècle london moved 7000 horsepower over 180 miles of pipes carrying water at | ||

800PSI. rather than electric lines from Georgia Power, we could have Municipal | 800PSI. rather than electric lines from Georgia Power, we could have Municipal | ||

Belts and Yaks, and somewhere in Georgia's blighted south there'd be tens of | Belts and Yaks, and somewhere in Georgia's blighted south there'd be tens of | ||

| Line 1,033: | Line 1,046: | ||

by absorbing and reradiating them. | by absorbing and reradiating them. | ||

[ | [[File:Greenhouse.png|left|thumb|it's getting hot in here, so take off all your clothes, and study stratospheric aerosol geoengineering.]] | ||

indeed, were it not for existing greenhouse gases, the earth's surface | indeed, were it not for existing greenhouse gases, the earth's surface | ||

would probably run about -18C. but whatever we end up doing for primary | would probably run about -18C. but whatever we end up doing for primary | ||

energies, the result will still be electricity. the United States | energies, the result will still be electricity. the United States in 2021 generated | ||

a little over 4 trillion kWh at utility scale, an average of 130 gigawatts with | a little over 4 trillion kWh at utility scale, an average of 130 gigawatts with | ||

a maximum capacity of about 1.1 terawatts. a little less than 10% of this | a maximum capacity of about 1.1 terawatts. a little less than 10% of this | ||

| Line 1,054: | Line 1,066: | ||

industrial generation, and about 80% of it comes from turbogenerators, most | industrial generation, and about 80% of it comes from turbogenerators, most | ||

of them steam-powered. ahh, the turbogenerator. | of them steam-powered. ahh, the turbogenerator. | ||

large ones hit about 2GW of output, though this will be typically reported in | large ones hit about 2GW of output, though this will be typically reported in | ||

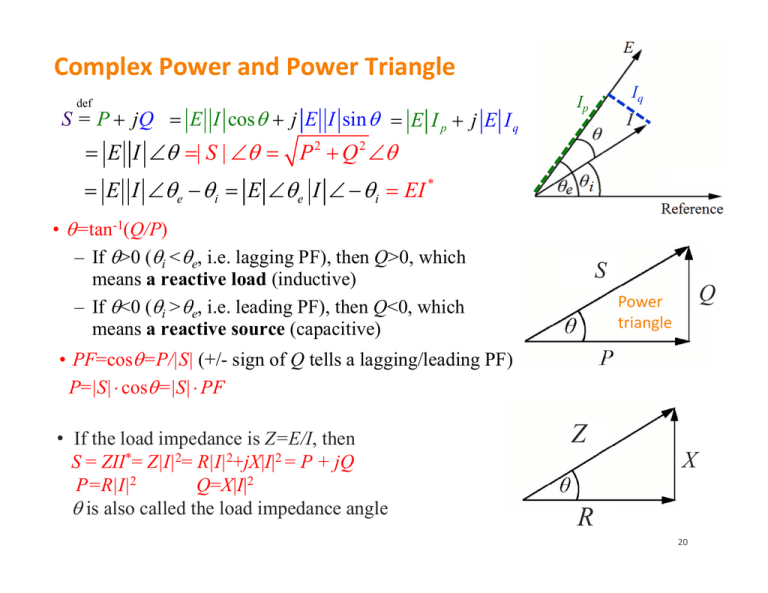

| Line 1,065: | Line 1,075: | ||

form what's called the "power triangle", with apparent power as the hypotenuse. | form what's called the "power triangle", with apparent power as the hypotenuse. | ||

the angle formed is the impedance phase angle (impedance is a complex number, | the angle formed is the impedance phase angle (impedance is a complex number, | ||

and this gives us the polar form). r represents the ratio of the voltage | and this gives us the polar form). r represents the ratio of the voltage | ||

difference to the current amplitude, and theta is the phase difference between | difference to the current amplitude, and theta is the phase difference between | ||

voltage and current. in cartesian form, impedence is R + iX, where R is | voltage and current. in cartesian form, impedence is R + iX, where R is | ||

resistance and X is reactance, the opposition presented to current by | resistance and X is reactance, the opposition presented to current by | ||

inductance and capacitance. by the way, you'll see | inductance and capacitance. by the way, you'll see doubleEs and CmpEs write | ||

the square root of negative one as 'j' instead of 'i', and this is why they | the square root of negative one as 'j' instead of 'i', and this is why they | ||

pronounce the word "jimaginary", but i was a | pronounce the word "jimaginary", but i was a cs/math major by god, and | ||

Descartes called them "imaginaires" in 1637 (<i>"...quelquefois seulement imaginaires c'est-à-dire que l'on peut toujours en imaginer autant que j'ai dit en chaque équation, mais qu'il n'y a quelquefois aucune quantité qui corresponde à celle qu'on imagine."</i>), not "jimaginaires", and Euler called them i, and Ampère maybe ought have used a different symbol for | |||

<i>intensité du courant</i> two-fucking-hundred years later. why wasn't it | |||

Descartes called them "imaginaires" in 1637, not "jimaginaires", and Euler | |||

called them i, and Ampère maybe ought have used a different symbol for | |||

"jintensité"? my wife and i used to fight about this. now she's my ex-wife. | "jintensité"? my wife and i used to fight about this. now she's my ex-wife. | ||

blame the french. | blame the french. | ||

[[File:Powertriangle.png|frame|center]] | |||

as frequency increases, inductive reactance increases, and capacitive reactance | as frequency increases, inductive reactance increases, and capacitive reactance | ||

| Line 1,111: | Line 1,113: | ||

the latter differential form is the Maxwell-Faraday version, | the latter differential form is the Maxwell-Faraday version, | ||

<b>FIXME FIXME FIXME unfinished</b> | |||

so how do we bring this electricity from producers to consumers? wires, of | |||

course. steel-core aluminum wires generally. let's look at some of the numerics here. | |||

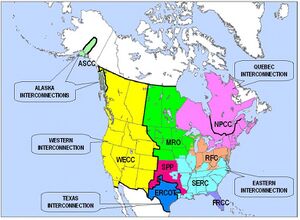

georgia has nuclear plants in Waynesboro and Baxley, about 150 and 180 | |||

miles respectively from power-hungry Atlanta. their turbines generate 26kV, | |||

which is taken up to 500kV at its generator step up units. this is three-phase | |||

alternating current. | |||

[[File:Nercmap.jpeg|thumb|right|NERC's power grids and interconnections. Each grid aims to supply 120±5% VAC at 60Hz. They're connected via HVDC.]] | |||

now, why alternating current, when most of the stuff we've encountered | |||

wants direct current? first off, we haven't been talking about things | |||

like big appliances and motors, which are often more easily implemented | |||

atop AC. i've often been told that "AC is more efficient when moving | |||

long distances". this is horseshit. <b>at a given amount of power, AC is | |||

less efficient to move</b>. for one, there's only a single phase in DC. HVAC | |||

almost always uses three wires carrying currents 120deg out of phase | |||

with one another, as it allows triple the power to be transmitted while | |||

only requiring one additional wire, and is far better for large motors. | |||

HVDC requires only one or two (the latter for bipolar current). | |||

HVAC requires more space between its wires, meaning large towers, | |||

requiring larger rights-of-way. corona lossage in HVDC is several times | |||

less than HVAC. HVDC has no charging current (necessary for overcoming | |||

parasitic capacitance, especially in underground or underwater lines), | |||

and thus suffers no reactive power loss. since there's no reactive power | |||

loss, HVDC can run as long as it likes. HVAC has a limit of a few | |||

hundred kilometers. AC's skin effect forces a maximum current density at the | |||

surface, whereas DC uses the entirety of the conductor. remember that conductor | |||

resistance is inversely proportional to cross-section; the reduced effective | |||

cross-section under AC means more loss to Joule heating (and larger, more | |||

expensive cables, and damage to insulation). the continuously varying magnetic | |||

field of AC causes long lines to act like antennae, radiating energy away. | |||

likewise there is induction loss due to crossinduction among conductors; DC is | |||

not a changing current, and thus there are no magnetic effects. the alternating | |||

field affects the insulating material, leading to dielectric loss. finally, | |||

the peak currents in AC are higher for the same rms target, requiring | |||

still more conductor material. HVDC can connect grids without synchronizing | |||

their frequencies and phases. HVDC's noise doesn't increase in bad weather. | |||

it can be controlled throughout the grid, and can use the earth as a return | |||

path. HVDC kicks HVAC's ass. | |||

there's just one problem. Faraday's Law means transformation between voltages | |||

with AC is simple. just wrap two helical coils the appropriate number of times | |||

[ ideal transformer, transformer EMF equation ] | |||

given the desired input and output voltages; AC's changing magnetic field plus | |||

induction do the rest, at very high efficiencies. you couldn't easily change | |||

large DC voltages until the development of gate-turn-off thyristors in the | |||

1980s, and such systems remain more expensive than transformers. and we absolutely | |||

need high voltages to do transmission efficiently. all the things i just listed | |||

will be overshadowed by the quadratic current term if we can't pump up the | |||

voltage. so it's not that transmission of AC is *more efficient*, it's that | |||

transformation of AC voltages can be more efficient, and used to be the only | |||

feasible scheme. once you've got a continental AC grid, the benefits of HVDC aren't | |||

worth ripping it all out. | |||

so this prompts a question: i've got ac adapters all over my house. looking around, just about every electronic device in this room has a big cuboid of a rectifier, some bigger than others, some warmer, all of them ugly and annoying. it's like i've got 1200 square feet and at least 600 of them are fucking ac adapters. batteries always supply DC, as do solar photovoltaics, and these will form the backbone of any distributed renewable power generation/distribution. if utility power distribution must be ac, why not just stick one big ac adapter on the unit's ingress, and distribute dc throughout my unit? well, a few things: as noted earlier, some devices prefer ac to dc, especially large motors (ac motors are brushless, leading to less maintenance and longer lifetimes. as far as i know, all brushless dc motors are just ac motors with an embedded inverter) such as those in your washing machine and air compressor, maybe your vacuum or old drills. it's not generally safe to drive large total powers with dc, due to the likelihood of electric arcing. alternating current self-extinguishes most arcing, since it's changing directions many times per second, allowing the broken-down insulator (air or whatever) to return to normal. you start running the risk of serious arcing around 48V of direct current; you'll rarely see consumer dc systems at more than this threshold. circuit breakers are easier to design for alternating current for the same reason. | |||

the biggest reason, though, is simply that efficient, cheap, safe dc voltage conversion, even at low voltages, wasn't available until a few decades ago. remember that the voltage a device runs on is primarily determined by its chemical composition and workload; too much voltage will lead to breakdown. insufficient voltage for a given power load will lead to more Joule heating, and require more conductor, so you want to keep voltages reasonably high even for in-home distribution. this means you're going to pretty much require a voltage change on every small appliance, and given the ubiquitous distribution of alternating current, why not take advantage of its trivial voltage conversion? remember, you can build a transformer pretty much out of things lying around the home. a buck converter is not much less complex than an entire switching-mode power supply. you're not going to easily build one without access to other electronics. linear voltage regulators are too wasteful to really consider. so moving your home to efficient dc would require acquisition of dc appliances (not so hard) and replacing all your ac adapters with dc voltage converters—or an industry-wide movement to devices supporting native dc distribution. sadly, you're likely going to be stuck with a few dozen ac adapters for the foreseeable future; i would consider a very large-scale migration to locally-generated dc a prerequisite of in-house dc distribution for all but eccentrics. | |||

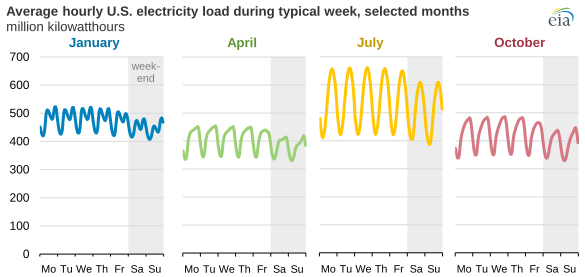

[[File:Load.png|center|600px|thumb|some patterns are immediately visible: more demand in the summer and winter (cooling and heating), less demand on weekends, demand peaks in the early evening, rapidly falling off until morning...]] | |||

local generation and microgrids are awesome, but they do lead to a final topic: supply and demand in the electric grid, which is greatly complicated by distributed generation. assuming an ac grid, there is some reference frequency (60Hz in the Americas, 50Hz in Europe) and voltage. too much demand relative to supply, and these drop (a brownout). too little demand, and they go up (wasting energy; large-scale storage has only recently become at all plausible). unfortunately, it can be difficult to add or remove supply quickly (it might require bringing an entire plant on- or offline). obviously, you can absorb a lot of variation here with better battery technology (storing overproduction, and releasing it when underproducing). | |||

==Let's do the do== | |||

alright, with this information from the previous five sections, we can pretty | |||

much conclude that our workstation is going to need to accept 120VAC/60Hz | |||

alternating current sourced from a utility. some people would have just taken this on faith, but like i said, i like to get to the bottom of things. | |||

the remaining questions are how to distribute power within the machine, and | |||

how to remove the resulting heat. | |||

so i've got an [https://www.evga.com/products/product.aspx?pn=220-T2-0850-X1 EVGA Supernova T2 850W] | |||

80+ Titanium PSU in this build, a solid unit i purchased back in 2016 (full details | |||

on the build are available [[Schwarzgerat_III|here]]). 80+ (written "80 PLUS™" in trade dress) is | |||

a matrix of minimum efficiency requirements at various percentages of rated load (note that the requirements | |||

are higher for European 240V units, for reasons we've explored above): | |||

{| class="wikitable" | |||

! 80 Plus test type | |||

! colspan="4" | 115V non-redundant | |||

! colspan="4" | 230V redundant | |||

! colspan="4" | 230V non-redundant | |||

|- | |||

! Percentage of rated load | |||

! 10% | |||

! 20% | |||

! 50% | |||

! 100% | |||

! 10% | |||

! 20% | |||

! 50% | |||

! 100% | |||

! 10% | |||

! 20% | |||

! 50% | |||

! 100% | |||

|- | |||

|style="background:#FFFFFF"| 80 Plus | |||

| | |||

| 80% | |||

| 80% | |||

| 80% | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| 82% | |||

| 85% | |||

| 82% | |||

|- | |||

|style="background:#9E6425" |80 Plus Bronze | |||

| | |||

| 82% | |||

| 85% | |||

| 82% | |||

| | |||

| 81% | |||

| 85% | |||

| 81% | |||

| | |||

| 85% | |||

| 88% | |||

| 85% | |||

|- | |||

|style="background:#A3ADAF"| 80 Plus Silver | |||

| | |||

| 85% | |||

| 88% | |||

| 85% | |||

| | |||

| 85% | |||

| 89% | |||

| 85% | |||

| | |||

| 87% | |||

| 90% | |||

| 87% | |||

|- | |||

| style="background:#F1BC47"|80 Plus Gold | |||

| | |||

| 87% | |||

| 90% | |||

| 87% | |||

| | |||

| 88% | |||

| 92% | |||

| 88% | |||

| | |||

| 90% | |||

| 92% | |||

| 89% | |||

|- | |||

|style="background:#E7E7E9"| 80 Plus Platinum | |||

| | |||

| 90% | |||

| 92% | |||

| 89% | |||

| | |||

| 90% | |||

| 94% | |||

| 91% | |||

| | |||

| 92% | |||

| 94% | |||

| 90% | |||

|- | |||

|style="background:#7B8781"| 80 Plus Titanium | |||

| 90% | |||

| 92% | |||

| 94% | |||

| 90% | |||

| 90% | |||

| 94% | |||

| 96% | |||

| 91% | |||

| 90% | |||

| 94% | |||

| 96% | |||

| 94% | |||

|- | |||

|} | |||

Two takeaways: Titanium is the only level mandating a performance level at low loads, and tested efficiency is best (for all units) at the 50% load (the true sweetest spot is generally around 75%). variation at low loads is quite pronounced, an important detail for large lab- or office-like fleets expected to sleep for significant chunks of time. prior to 80 PLUS and its testing regime, PSUs regularly achieved power levels close to .5, wasting as much power as they made available. you want to size your PSU so that the machine's internal load is around ¾ of the rated load to hit maximum efficiencies. | |||

so, we can thus expect at least the following performance levels: | |||

{| class="wikitable" | |||

! Load !! Efficiency !! Maximum draw !! Waste | |||

|- | |||

| 10% (85W) || 90% || 94.4W || 9.4W | |||

|- | |||

| 20% (170W) || 92% || 184.8W || 14.8W | |||

|- | |||

| 50% (425W) || 94% || 452.1W || 27.1W | |||

|- | |||

| 100% (850W) || 90% || 944.4W || 94.4W | |||

|- | |||

|} | |||

the 4% difference between 50% and 100% load translates to a 67.3W difference in waste: we've doubled available power, but we're wasting about 2.5x as much. this isn't a huge amount relative to the input power, and a 90% efficiency at full load is nothing to sneeze at. you're not going to save much on your power bill through proper sizing, assuming power factors of at least 0.9 across the board. for purposes of heat, though, it's no good! a 94.4W waste is similar to an adult man's thermal output, and this is just from the PSU. another thing to be cogent of is that 80 PLUS tests are performed at room temperature. the inside of your machine, especially at full load, is very likely above that, and remember that higher temperatures in this regime mean less efficient electronics. unless the ambient temperatures are around 25℃, you can expect less efficiency than the results here. this is why cases in the last decade often attempt to keep the PSU fairly thermally isolated, and why it's critical to keep your PSU egress free of dust. | |||

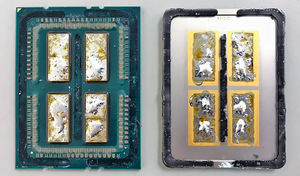

[[File:Amd3970x.jpg|right|thumb|the amd 3970x has five heat-generating dies in a quincunx.]] | |||

what is the goal of cooling? first and foremost, the active components must maintain temperatures within their operating ranges. electric devices have a threshold temperature and a maximum temperature. peak (advertised) performance is typical up to the threshold temperature. the device derates beyond that, and should not be operated above the maximum temperature. modern processors have thermal protection built in to keep temperatures from getting too high (my AMD 3970X oughtn't run over 95℃). older processors, especially AMD chips from the beginning of the century, would begin literally smoldering, reaching temperatures of hundreds of degrees, if run without cooling for even a few seconds. for processors, the resistance (and thus heat generation) is very dense; the first task of any cooling system is to remove heat from these units. modern processors typically advertise "boost" speeds; these speeds can only be reached below temperature thresholds. indeed, even the "base" clocks can only be reached with a decent cooling solution, lest the thermal protection lead to "throttling". remember that in the presence of overclocking, more heat and power are in play than at design clocks. | |||

secondly, the temperature inside the machine must be kept below thresholds. higher case temperatures will degrade performance and reduce lifetimes for peripherals, and retard the ability of the cooling system to remove heat from the processors. finally, it is desirable to do this as efficiently as possible, since the cooling itself consumes power and generates noise. leaving aside immersion cooling, this means we need to get the hot air out of the system. the ambient temperature is critical: higher ambient temperatures will mean hotter temperatures throughout, and (as we'll see below) lower heat flux (exchange with the environment). "standard ambient temperature" is 22℃. at the moment of startup, all components within a machine are equal to the ambient temperature; <i>whether using air or liquid cooling, they will never go below</i> (it is possible to reach subambient temperatures with extreme cooling methods i don't plan to cover in any detail). <b>it is completely meaningless to compare uncorrected operating temperatures at different ambient temperatures.</b> in addition, the sensors in your machine all have some degree of systemic error; it is not generally useful to compare absolute temperatures across machines or even across sensors. | |||

fun fact: system firmware boots the processor without any power-saving features, as you probably knew, and at a fixed, higher-than-default voltage (to help ensure proper booting), which i'm guessing you didn't. temperatures there will usually be noticeably higher than when idling in the operating system environment. | |||

[[File:Amd3970xdelidded.png|left|thumb|gold-plated IHS contacts, classy.]] | |||

my AMD Threadripper 3970X (Zen 2 microarchitecture, but 3rd Generation Threadripper, in a maddening disconnect) is 4 "core complex dies" (CCDs), each containing two "core complexes" (CCXen). a core complex contains four cores and their caches (Zen 3 dispenses with the CCX concept; CCDs remain octacore, but unite the L3 cache). each CCD is 74mm² containing 3.9 gigatransistors on TSMC's 7nm process. "Infinity Fabric" connects them to a central "i/o die" (IOD) is 416mm² containing 8.34 gigatransistors on TSMC's 12nm process (this is apparently borrowed from 2nd Generation EPYC). the TRX40 chipset uses a 14nm process from Global Foundries and dissipates 15W itself, talking to the CPU over 16 PCIe 4.0 lanes. the package is 4410.9mm². the five dies sum to 712mm², 16.14% of the package area. at 280W, this is an average of 0.393W/mm² or 39.33W/cm². | |||

this topology changes the heat profile of the package. we're used to seeing a single central heat element, but here we'll have five heat generators in a quincunx configuration. the first step in cooling is the heat spreader, part of the retail CPU package. the integrated heat spreader's gold-plated base is soldered directly to the dies using an indium solder, a top-of-the-line solution. delidding this processor (removing the IHS to apply superior thermal interface material) is not likely to be helpful, and the few experiments i've seen confirm that intuition. this is a large package, and one's well-advised to get a cooler that covers the entire IHS. thankfully, there now exist numerous solutions for the sizable sTRX40. | |||

the second law of thermodynamics states that heat flows from hotter bodies to adjacent colder bodies, in proportion to the difference in temperature and the thermal conductivity of the material between them. heat moves through conducting solids via conduction, largely due to free electron diffusion. as a result, good electrical conductors are usually good thermal conductors. heat begins to flow when the processor is powered on. assuming constant heat output from the processor, and constant ambient temperature, a steady state will be reached whereupon all partial derivatives of the heat flow with respect to time are 0. the amount of heat entering the spreader is equal to the amount of heat leaving the spreader; its temperatures no longer change (the derivatives with regard to <i>space</i> may be non-zero; it is not necessary that the heat spreader have the same temperatures throughout its volume). such a system is easily analyzed. imposing a change to temperature somewhere at the boundary perturbs the spreader to a new equilibrium (assuming changes are not so rapid as to render equilibrium unattainable). this is called <i>transient conduction</i>, and is more complex. | |||

Fourier's law, the heat analogue of Ohm's law, states that heat transfer is proportional to the temperature gradient and proportional to the area normal to said gradient through which heat flows: q = -k∇T, ∂Q/∂t = -k∯∇T * dS. k is the material's thermal conductivity in W/mK, ∇T is the temperature gradient in K/m, q is the local heat flux density in W/m², and dS is an orthonormal surface area element in m². at 0℃, the thermal conductivity of copper is 401 W/mK; this falls to about 390 as the temperature approaches 100℃. its nickel plating is closer to 100W/mK. there will be some small thermal resistance due to the thermal interface material (it is for this reason that applying even slightly too much TIM is counterproductive. if enough TIM is used, it effectively becomes the conductor, which is very bad). the best non-conductive TIMs have conductivity of about 8.5–10W/mK; conductive "liquid metal" TIMs can reach 40, still well below true metal conductors. | |||

atop our IHS goes more TIM (we want TIM wherever we have two rough surfaces meeting for thermal transfer), and then our real cooling story begins. we've safely conducted heat away from its source, but without further removing that heat, our IHS will eventually serve as a blanket. eventually, we want the heat out of the system entirely, mixing with and effectively being hidden by the ambient air (even this doesn't necessarily complete the solution, as anyone who's locked a few servers away in a poorly-ventilated closet can tell you). the usual solution is a large heatsink with numerous fins, maybe some heat pipes, and localized fans, combined with ingress and egress fans on the case. this results in heat dissipating from the processor complexes (and VRMs, and memory, and chipset, etc.) into the case's air, and that air being ejected, with ambient air replacing it. | |||

[[File:Ohyeah.jpg|right|thumb|[ferris bueller music intensifies]]] | |||

let's analyze this. my Caselabs Magnum T10A is 15x25.06x26.06in, or 381x637x662mm. empty, that's 0.161m³. at 25℃ and one atmosphere, dry air has a density of 1.1839kg/m³ (it gets less dense as temperature rises), suggesting 0.19kg of air in the case. its specific heat is 1.005 kJ/kg/K, which rises with temperature. assuming no replacement of air, 190.95J ought raise the temperature of the case's interior one degree. at 280W TDP, that amount of power requires 0.7s. i guess we'd better move that air! my [[Schwarzgerät_III#Cooling|custom loop]] contains about 1L of distilled water, weighing about 1kg. water's specific heat at 25℃ is 4.187kJ/kg/K. this fourfold increase in specific heat and 700+-fold increase in density work in our favor: it ought require about 4187J to raise the loop's temperature by a degree, about 22x as much power, requiring 15s at 280W. remember the all-important temperature delta: heat moves more effectively when there is a larger difference, and the liquid fosters such. it is furthermore a larger sink for sudden heat spikes. even with these advantages, however, it's no good if we can't get the liquid cooled; adding four degrees per minute is completely unsustainable. try running a watercooled system without any fans going, and you'll verify this quite quickly. furthermore, the heat doesn't propagate quickly enough through the liquid to be removed on its own; it's necessary to drive the liquid with a pump, and this can likewise be verified quickly and easily. indeed, without pumping the liquid, results are likely to be far worse than with air cooling. | |||

[ show machine running without fans, then without pumps ] | |||

another advantage is that our heat is removed from the loop at the case's boundaries (heat leakage from the tubes themselves is negligible), where we install our radiators. the hot air is thus ejected outside the system immediately, rather than transiting the interior. this keeps other components cooler, and is likely to remove the heat more quickly overall. finally, using the surface of the machine rather than the surface of the heatspreader as our release means massively more area. my machine boasts 1200mm² of radiator area, meaning greater volumes into which heat can diffuse. the upshot is lower temperatures per watt (opening up overclocking headroom and avoiding any thermal throttling), and fewer acoustic decibels per watt (meaning complete silence in which to hear the loudest fucking keyboard i can buy, CLACK CLACK CLACK). | |||

another concern with this build was power. while the Supernova can supply all its rated 850W as 12V DC, it can do only 100W as native 5V output. furthermore, SATA cables carry only 22.5W of 5V power and 54W of 12V power. i have 14 Seagate Exos 18TB hard drives, requiring peak inrush currents of 1.01 5V amps and 2.02 12V amps. that's 70.7W of 5V and 339.36W of 12V total, with each SATA power cable capable of handling only two drives within its spec (in actuality, the inrush current is only needed for a fraction of a second, and i've had no problem hanging four off a single cable...but this presumably tempts fate). with numerous other 5V draws competing, it's quite possible that i could exceed the PSU's 100W of 5V. what to do, what to do? | |||

well, again, you can consult [[Schwarzgerät_III|my writeup]] to get all the gory details. suffice to say that several 66W/12V PCIe extension cables were going unused, and were pressed into service for hard drives. PCIe 8-pin goes to Molex 2-pin 12V. splice the 12V line as it emerges from the Molex, and throw a buck converter on that fucker, bringing out a 5V level. feed it back into the Molex connector as the other two 5V pins. boom, we've got ~60W of combined 5+12V available from where there was once only 12V, <i>and it doesn't count against the PSU's 5V total</i>. and that, my droogs, is a hack like grandma used to make. | |||

==Final words== | |||

We've gone back to the big bang and seen how fourteen billion years and three generations of stars nucleosynthesized the elements we use to build and power our machines and ourselves. We've looked at the results of three and a half billion years of terrestrial evolution, probably not the only life in the universe, but definitely the only life we know. We talked about five hundred million years of pressure and desiccation, and how they gave rise to the fossil fuels that powered the Industrial Revolution and now present a bill in the form of climate change. | |||

We, as individuals or as a species, don't get billions of years, but only a brief existence, an absurd blink of the eye, our identities and senses of selves arising from a short-lived ensemble of low-entropy neurons, decaying like all things back into the lukewarm slurry of thermodynamic equilibrium. It is our duty in this interim to learn what we can, to improve our species, and to live bravely despite heat death's ineluctable execration. To <i>differentiate</i>. To put energies to use, to work and to extract work. Yet all remain destined for a night that is eternal and without name. One by one they will step down into the darkness before the footlamps. | |||

Until that time, we shall overclock. Thanks for reading. Hack on. | |||

<i>“I leave Sisyphus at the foot of the mountain. One always finds one's burden again. But Sisyphus teaches the higher fidelity that negates the gods and raises rocks. He too concludes that all is well. This universe henceforth without a master seems to him neither sterile nor futile. Each atom of that stone, each mineral flake of that night-filled mountain, in itself, forms a world. The struggle itself toward the heights is enough to fill a man's heart. One must imagine Sisyphus happy.”</i>—Albert Camus | |||

[[File:Therock.jpg|center|frame|<i>The Rock</i>, Peter Blume (1948)]] | |||

==Going deeper== | |||

Tom Hardware's massive "[https://www.tomshardware.com/reviews/power-supplies-101,4193-17.html PSU 101]" deep-dive is a fine piece of detailed technical writing. | |||

The following texts were very useful while preparing this <i>billet-doux</i>: | |||

* Stephen Ward and Robert Halstead, <i>Computation Structures</i> (1996). | |||

* David Griffiths, <i>Introduction to Elementary Particles</i> 2nd Edition (2008). | |||

* Vaclav Smil, <i>Energy in Nature and Society: General Energetics of Complex Systems</i> (2008). | |||

* Alberts et al, <i>Molecular Biology of the Cell</i> 6th Edition (2014). | |||

* Richard Feynman, <i>QED: The Strange Theory of Light and Matter</i> (1985). | |||

* Edwin Schrödinger, <i>What is Life?</i> (1944). | |||

* Paul Tipler and Ralph Llewellyn, <i>Modern Physics</i> 6th Edition (2012). | |||

* Frank Incropera et al, <i>Fundamentals of Heat and Mass Transfer</i> 6th Edition (2006). | |||

They will all be published in Heaven. | |||

Latest revision as of 19:01, 8 September 2024

nb: if this reads like a script, that's because it was originally going to be a video in my DANKTECH series. maybe it still will, one day.

hey there, my hax0rs and hax0rettes! it's been a good long time since we last got together, but i hope to make up for paucity and exiguity with a surfeit of ass-rocking quality. i rebuilt my workstation recently, and it left me with a few questions. i looked into the answers, and thought the investigation a good time, and maybe you'll likewise find it informative, entertaining, and even useful.

as always, we're gonna cover a lot of ground in an hour, all the way from a wall outlet, to the big bang, to refrigerator design, to the dance of electrons. i am *not* an expert on all of these topics, so definitely listen with a critical ear and watch with a scrupulous eye, but, you know, you really ought be doing that all the time. if i have a general theme tonight, i suppose that theme is: power. in the world of huey lewis and the news, love can be measured in watts, the SI unit of power. love applied over time would furthermore be work, and measured in joules. but i'm an engineer, so this evening we'll be quantifying the power of love, but mainly the love electrons feel towards cations.

Intro

so first i'd like to tell a little story from 2018. i had just started putting together a new machine. big plans, big dreams. i installed the psu, mated the cpu, and hung the mobo. now here i typically spin up this computational core of a new build. otherwise, if there's any problem, the first thing i'll be doing is stripping the box back down to these essentials. so i turn it on, and nothing. dead air. horror vacui. sigh. alright, let's first validate this power supply. now i've got an ATX power supply tester. you can pick one of these up for about fifteen bucks, and they're well worth it if you're fucking at all regularly with boxen.

[ demonstrate testing with PSU tester ]

actually, let's talk about ATX for just a second. ATX, "Advanced Technology eXtended", was a 1995 Intel standard for PSUs, motherboards, and chassis (chassis is borrowed from the french châsse, derived from the Latin capsa, meaning case or box, so calling your machine a box isn't just technohipster argot drivel). your rear I/O panel today has all kinds of crap undreamable in 1995, but it's still 158.75mm by 44.45mm because of a Clinton-era spec. The Advanced Technology being eXtended was that of the IBM 5170, the AT, introduced in 1984 and built around the 16-bit 286. It itself followed 1983's 5160 XT, a disappointing successor to the original 5150 IBM PC and its 4.77MHz 8088. If you're rummaging around at the dump looking for mechanical keyboard gold, and you find one of these ungainly 5-pin DIN connectors, that's from the XT.

And you're looking at an old keyboard indeed in that case, probably an IBM Model F. The famous Model M wasn't released until 1986, and the vast majority of them used the 6-pin PS/2 connector introduced in 1987. oh, man, and I could talk your heads off about keyboard technology, but it's a pretty niche area of interest, and our days are sadly numbered. It's kinda weird that ATX references the AT in its name, actually, since most of what it eXtended came from the PS/2, and you'll sometimes even come across the term "ATX/PS2 power supply". none of these IBM designs were really standards, though; they were just products, with which other vendors more or less interoperated, and often cloned. in fact, if you remember the ISA bus—the "Industry Standard Architecture" that preceded the VESA Local Bus and PCI—was just a renaming of the 8-bit XT and 16-bit AT IBM buses, in response to the proprietary MicroChannel Architecture bus introduced on the PS/2. by the way, you still have some AT naming legacy in your machine today—SATA is the serial version of the ATA, the AT Attachment, the AT's hookup to its 10 megabyte hard disk. and ATAPI is just a packet interface atop that. so raise a glass to William Lowe and Don Estridge and the IBM Boca Raton team; besieged by Compaq and Dell and Hewlett Packard, IBM lost the war, but their legacy lives on.

ATX is still the most common motherboard design for workstations, and other designs—mini-ATX, XL-ATX, SFX, etc.—are usually compatible with ATX in their common areas. so you almost certainly have the 24-pin power connector known as ATX12V.

ATX originally included three cables total:

- 4-pin 18 AWG AMP 1-480424-0 / Molex 15-24-4048 + AMP 61314-1 contacts

- 4-pin 20 AWG AMP 171822-4 "Berg" connector for floppies

- 20-pin Molex Mini-fit Jr. motherboard connector

and suggested that most of the PSU's power ought be on 5V and 3.3V rails.

Well, the Pentium 4 came out, and the NetBurst microarchitecture is of course famous for being lavish with heat and power. Now understand that this is all relative. The most power-hungry Pentium III consumed 34.5W, and most of them ate 20 to 25. The 8086 wanted 1.87W. The PC AT came with a 192W power supply for the entire machine, up from the XT's 130W. The hungriest Athlon was, I believe, the 1400MHz T-Bird at 72W. Opterons maxed out at 89W on the 800-series SledgeHammers. Well, the Prescott P4 and its 31 pipeline stages at 3.8GHz had a TDP of 115W, about 25% more than the audacious SledgeHammer 850 (though admittedly the latter was running at about half the clocks).

Well, my AMD Threadripper 3970X has a TDP of 280W, almost 3 times that. You have this story of computers steadily taking less power. Well, they certainly do for the same amount of work. But we give them bigger and bigger problems—Parkinson's Law, "work expands to fill the time available for its completion." And indeed Gustafson's law indirectly formalizes this concept—Amdahl's Law says "the reduction in total time due to increased parallelism is bounded by the intrinsically serial portion of the problem", but Gustafson frames it as "the amount of total work you can accomplish concurrently with the serial portion of the code increases with parallelism." So yeah, a transistor on a 5nm process is smaller and wastes less power than a 90nm transistor, but if you fill the same die area with those 5nm transistors, you're taking way more power, and I'll dig deeply into this later. But a chip of a given area dissipating the same amount of power will have the same average thermal density across all processes. This is known as Dennard scaling.

So yeah your top-tier processors of 2022 are faaaaaar more power-hungry than those of 2004, but we've got tremendously better cooling systems. Until 1989's 486, Intel processors had no cooling. Some 486s got a snap-on heat sink, and OEMs sometimes added 50mm fans. With the Pentium, active cooling was required, and gentlewomen were seen fainting from the vapours when the P54 Pentium Overdrive came with a fan preinstalled, the first of the "stock fans".

By 2005 you started seeing massive heatsinks with 120mm fans and heat pipes. You started seeing high-quality thermal interface material. 2007 saw the first dual tower cooler of which I'm aware, the Thermalright IFX-14 (IFX stood for Inferno Fire eXtinguisher). In 2008 you saw a little Austrian company called Noctua release their NH-U12P, and one year later the legendary NH-D14. I ordered one of the latter for my 2011 Sandy Bridge i7 2600K build, and it was like a piece of alien technology. I'd never seen anything remotely like it. and now the kids are delidding their processors and boofing "liquid metal" and communicating exclusively through eyerolls and dick pics. you can't let the little fuckers generation gap you.

Anyway back to the ATX motherboard connector. The Pentium IV needed more juice than that available, and it wanted it in the 12 volt form. By this time, processors were running at a little over a volt, much as they do today. Well, more accurately they were running at a wide number of different voltages, all of them less than 3.3V, the lowest voltage available from the PSU (not only do smaller processes allow less voltage, they mandate less voltage, lest they die. Put 2V into your modern processor, and you'll kill it immediately). So first motherboards added linear voltage regulators, but those dissipated the volt-amperage product difference as heat. This became rapidly untenable as currents increased, but power MOSFETs that could switch more quickly than bipolar transistors, and which provided synchronous rectification to replace the more resistant flyback diodes, gave rise to efficient and stable onboard buck converters. Your modern N-phase VRMs are simply N synchronous buck converter circuits which pipeline across the switching quantum. This can respond to load changes like a buck converter switching at N times the speed without the increase in switching losses that would be expected. It also allows the heat loss of the switching to be divided across N areas, and can drastically reduce ripple current.

[ DC-to-DC regulator math:

P_D = V_D(1 - D)I_0 P_S_2 = I^2_0R_DSon(1-D) P_SW = VI_0(T_rise + T_fall) / 6T (2T with Miller plate) P_leak = I_leak V P_Dbody = V_F I_0 T_no f_SW P_GDrive = Q_G V_GS f_SW dT_on = DT = D/f dT_off = (1 - D)T = (1 - D)/f D = (V_0 + (V_SWsync + V_L)) / (V_i - V_SW + V_SWsync) ΔI_L_off = integral(t_on...t_on + t_off)(V_L/Ldt = -V/L T_off = (1 - D)T)

So once you have that, you want to work on the highest voltage possible, since more current requires a thicker wire and implies more loss to Joule heating: P = I²R.

So the highest voltage we can get from the PSU is 12V, so that's what the CPU wants. And thus in February 2000 ATXV12 was born, adding a 4-pin Molex Minifit Jr 39-01-2040 carrying an extra 3.3, 5, and 12V pin (plus an extra ground). Now we already had 3x 3.3V pins and 4x 5V pins, so our capacities there rose 33% and 25% respectively. But our 12V capacity doubled, since we only had 1 pin. And given that max amperage is the same across all pins, that's a big increase in total deliverable wattage.

Note that your CPU was now taking a much greater portion of your total power than it was before. So ATX12V 1.0 likewise moved the focus of the PSU off the lower-voltage rails and onto the 12V rails, and ATX12V 2.0 in 2003 made the 12V even more of a focus, due to the recent introduction of power-hungry 12V PCI Express devices (each PCIe slot must be able to supply 75W). You tended to have multiple rails for a given voltage at this time, due to a 240 VA limit per rail up until 2007's ATX12V 2.3. Today, there's not much advantage to a multiple-rail design, and given that balancing your draws across multiple rails can be a major pain in the ass, we can be thankful for that. We're now at ATX12V v2.53, last updated in June 2020.

so anyway, back to testing your power supply. if you don't have a little ATX power tester, you can of course just get a copy of the ATX12V pinout (if you're using EPS, its primary 24-pin connector is electrically compatible with ATX12V) and a paperclip.

[ demonstrate paper clip shorting ]

short the PS_ON pin with any ground, and the PSU's fan ought start spinning. if it doesn't, it's possible that your PSU requires some actual load to turn on the fan, and you might be able to turn this off with a switch. this is applicable to passive power testers like that i just showed, as well—it's not going to require any significant load. if you've got one of the newer ATX12VO 10-pin, 12V-only PSUs, the pinout is different, but you still just need to get PS_ON connected to ground. to get all the information that one of the testers would give you, you'll then need a multimeter; it's pretty simple to test the 4 3.3V lines, the 5 5V lines, and the 2 12V lines. there's also a 5V standby line (supplying power even when the machine is "off"), and the 5V PWR_OK line (driven only when the PSU has stabilized outputs, and they're safe for use), and a -12V line you're unlikely to use unless you've got an RS-232 port.

[ demonstrate testing with multimeter ]

by the way, have you ever wondered why it's 12V, 5V, and 3.3V? i sure have. as best as i can tell, your 12V comes from the automotive world, which makes sense given that 12V is geared towards hard drive and fan motors. lead-acid batteries tend to provide right around 2V per; zinc-carbon and akaline batteries yield just about 1.5V. why is it 2V? let's look at the two reactions going on. at the cathode, we have lead dioxide PbO2 + HSO4 + 3H + 2e -> PbSO4 + 2H2O, water and lead sulfate, with 1.69, nice. that's taking lead in the +4 state to +2. at the anode, we're taking lead from the 0 state to +2, and Pb + HSO4 -> PbSO4 + H + 2e yields -0.356. so this will be driven by the reduction reaction, and we subtract the anode from the cathode to get 2.05. we can then use the Nernst equation for redox reactions to determine the voltage loss as the battery is

[ show nernst equation ]

depleted, as this sulfuric acid is converted to water and both sides become lead sulfate. it's the difference in the strong bonds of water and the weaker bonds of the reactants that lends us our energy. the battery needs a circuit to dissipate because these electrons collect, and inhibit the disassociation of sulfuric acid. wiring batteries in series gives us a voltage equal to the sum of the voltages, so 12V takes 6x 2V or 8x 1.5V batteries. this strikes a good balance between efficiency, which wants higher voltages, and cost/size of battery, which wants lower voltages (if any one of the batteries wired in series faults, the entire larger device faults).

5V is pretty much a straight outgrowth of the chemistry of early bipolar junction transistor-transistor logic, where you needed 2V+ to signal a high, couldn't take 6V without damage due to the chemical composition of your NPN transistors, and higher voltage meant less noise concerns. 5V gave you a high signal easy to reliably hold without crossing into the danger zone. CMOS moved quickly to 3.3V, since voltage is squared in the CMOS power equations (and the smaller CMOS devices allowed you to keep up frequencies with less voltage; less voltage at the same process size will generally lead to lower slew rate (the change in voltage or current), with a direct result of greater propagation delays, meaning longer cycle times, meaning lower frequency). LVTTL was introduced following this change to interoperate with CMOS more easily, but it came out well after CMOS.

alright, so that was a bit of a digression, but back to my story! so i've got my PSU tester out, and there's no love, no flow whatsoever. the electrons are silent, they're insolent. well, shit. i want to see the rest of the build working, so i throw in an old PSU i've got laying around. once again no love. well, shit! i guess we'll toss the old one and RMA the new one. this is out of the ordinary, though, so i go pull a PSU out of my living room server. i put it in the new machine. nothing. ok, well i just saw this working, so either the motherboard is lethal to PSUs, or what, this outlet is bad? alright, let's test the outlet. well, my monitor is plugged into the same pair of outlets, so that argues against the idea, but i really don't want to contemplate a monster-in-my-motherboard so fuck it, let's get down. a standard north american receptacle is the tamper-resistant 15A NEMA 5-15R, where R stands for receptacle; the corresponding plug is the NEMA 5-15P, and these are internationalized as IEC 60906-2. you'll also see 20A 120V, and for large appliances you'll see the 240V NEMA L6-30R, where the L indicates locking.

two quick facts you might not know:

- the plug's ground pin (the one in the middle) is 1/8" longer than the power blades, or at least it ought be. this is so that it makes contact before they do, grounding it before it's live. grab a nearby cable and check it out if you don't believe me.