Check out my first novel, midnight's simulacra!

CUDA: Difference between revisions

"65K" ?! |

|||

| (24 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

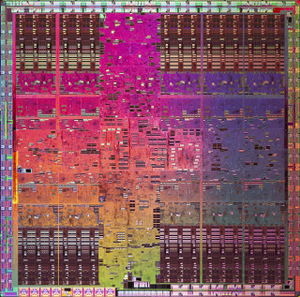

[[File:Gt200die-big.jpg|right|thumb|A "Fermi" GT200 die]] | [[File:Gt200die-big.jpg|right|thumb|A "Fermi" GT200 die]] | ||

==Hardware== | ==Hardware== | ||

NVIDIA maintains a list of [http://www.nvidia.com/object/cuda_learn_products.html supported hardware]. | NVIDIA maintains a list of [http://www.nvidia.com/object/cuda_learn_products.html supported hardware]. You'll need the "nvidia.ko" kernel module. On [[Debian]], use the <tt>nvidia-kernel-dkms</tt> package to build a module appropriate for your kernel (and automatically rebuild it upon kernel upgrades). You can also download the <tt>nvidia-kernel-source</tt> and <tt>nvidia-kernel-common</tt> packages, unpack <tt>/usr/src/nvidia-kernel.tar.bz2</tt>, and run <tt>make-kpkg modules_image</tt>. Install the resulting .deb, and modprobe nvidia. You'll see something like this in dmesg output:<pre>nvidia: module license 'NVIDIA' taints kernel. | ||

Disabling lock debugging due to kernel taint | Disabling lock debugging due to kernel taint | ||

nvidia 0000:07:00.0: enabling device (0000 -> 0003) | nvidia 0000:07:00.0: enabling device (0000 -> 0003) | ||

| Line 7: | Line 7: | ||

nvidia 0000:07:00.0: setting latency timer to 64 | nvidia 0000:07:00.0: setting latency timer to 64 | ||

NVRM: loading NVIDIA UNIX x86_64 Kernel Module 190.53 Wed Dec 9 15:29:46 PST 2009</pre> | NVRM: loading NVIDIA UNIX x86_64 Kernel Module 190.53 Wed Dec 9 15:29:46 PST 2009</pre> | ||

Once the module is loaded, CUDA should be able to find the device. See [[CUDA#deviceQuery_Output|below]] for sample outputs. Each device has a | Once the module is loaded, CUDA should be able to find the device. See [[CUDA#deviceQuery_Output|below]] for sample outputs. Each device has a [[CUDA#Compute_Capabilities|compute capability]], though this does not encompass all differentiated capabilities (see also <tt>deviceOverlap</tt> and <tt>canMapHostMemory</tt>...). Note that "emulation mode" has been removed as of CUDA Toolkit Version 3.1. | ||

==CUDA model== | ==CUDA model== | ||

===Host=== | ===Host=== | ||

| Line 31: | Line 32: | ||

===Streaming Multiprocessor=== | ===Streaming Multiprocessor=== | ||

* Each SM has a register file, fast local (''shared'') memory, a cache for constant memory, an instruction cache (ROP), a multithreaded instruction dispatcher, and some number of [[#Stream Processor|Stream Processors]] (SPs). | * Each SM has a register file, fast local (''shared'') memory, a cache for constant memory, an instruction cache (ROP), a multithreaded instruction dispatcher, and some number of [[#Stream Processor|Stream Processors]] (SPs). | ||

** | ** 8K registers for compute capability <= 1.1, otherwise | ||

** | ** 16K for compute capability <= 1.3, otherwise | ||

** 32K for compute capability <= 2.1, otherwise | |||

** 64K through at least compute capability 3.5 | |||

* A group of threads which share a memory and can "synchronize their execution to coördinate accesses to memory" (use a [[barrier]]) form a '''block'''. Each thread has a ''threadId'' within its (three-dimensional) block. | * A group of threads which share a memory and can "synchronize their execution to coördinate accesses to memory" (use a [[barrier]]) form a '''block'''. Each thread has a ''threadId'' within its (three-dimensional) block. | ||

** For a block of dimensions <D<sub>x</sub>, D<sub>y</sub>, D<sub>z</sub>>, the threadId of the thread having index <x, y, z> is (x + y * D<sub>x</sub> + z * D<sub>y</sub> * D<sub>x</sub>). | ** For a block of dimensions <D<sub>x</sub>, D<sub>y</sub>, D<sub>z</sub>>, the threadId of the thread having index <x, y, z> is (x + y * D<sub>x</sub> + z * D<sub>y</sub> * D<sub>x</sub>). | ||

* Register allocation is performed per-block, and rounded up to the nearest | * Register allocation is performed per-block, and rounded up to the nearest | ||

** 256 registers per block for compute capability <= 1.1, otherwise | ** 256 registers per block for compute capability <= 1.1, otherwise | ||

** 512 registers per block for compute capability <= 1.3 | ** 512 registers per block for compute capability <= 1.3 | ||

* A group of blocks which share a kernel form a '''grid'''. Each block (and each thread within that block) has a ''blockId'' within its (two-dimensional) grid. | * A group of blocks which share a kernel form a '''grid'''. Each block (and each thread within that block) has a ''blockId'' within its (two-dimensional) grid. | ||

** For a grid of dimensions <D<sub>x</sub>, D<sub>y</sub>>, the blockId of the block having index <x, y> is (x + y * D<sub>x</sub>). | ** For a grid of dimensions <D<sub>x</sub>, D<sub>y</sub>>, the blockId of the block having index <x, y> is (x + y * D<sub>x</sub>). | ||

| Line 84: | Line 87: | ||

** Arithmetic intensity and parallelism are paramount! | ** Arithmetic intensity and parallelism are paramount! | ||

** Memory-bound kernels require sufficiently high ''occupancy'' (the ratio of concurrently-running warps to maximum possible concurrent warps (as applied, usually, to [[#Streaming Multiprocessor|SMs]])) to hide latency. | ** Memory-bound kernels require sufficiently high ''occupancy'' (the ratio of concurrently-running warps to maximum possible concurrent warps (as applied, usually, to [[#Streaming Multiprocessor|SMs]])) to hide latency. | ||

* No branch prediction or speculation | * No branch prediction or speculation. Full predication. | ||

{| border="1" | {| border="1" | ||

! Memory type | ! Memory type | ||

| Line 162: | Line 165: | ||

===Compute Capabilities=== | ===Compute Capabilities=== | ||

The original public CUDA revision was 1.0, implemented on the NV50 chipset corresponding to the GeForce 8 series. Compute capability, formed of a non-negative major and minor revision number, can be queried on CUDA-capable cards. All revisions thus far have been | The original public CUDA revision was 1.0, implemented on the NV50 chipset corresponding to the GeForce 8 series. Compute capability, formed of a non-negative major and minor revision number, can be queried on CUDA-capable cards. All revisions thus far have been fowards-compatible, though recent CUDA toolkits will not generate code for CC1 or 2. | ||

{| border="1" class="wikitable" | |||

! Resource | |||

! 1.0 SM | |||

! 1.1 SM | |||

! 1.2 SM | |||

! 1.3 SM | |||

! 2.0 SM | |||

! 2.1 SM | |||

! 3.0 SMX | |||

! 3.5 SMX | |||

! 7.0 SM | |||

! 7.5 SM | |||

|- | |||

|CUDA cores | |||

|8 | |||

|8 | |||

|8 | |||

|8 | |||

|32 | |||

|48 | |||

|192 | |||

|192 | |||

|64/32<br/>64/8 | |||

|64/2<br/>64/8 | |||

|- | |||

|Schedulers | |||

|1 | |||

|1 | |||

|1 | |||

|1 | |||

|2 | |||

|2 | |||

|4 | |||

|4 | |||

|4 | |||

|4 | |||

|- | |||

|Insts/sched | |||

|1 | |||

|1 | |||

|1 | |||

|1 | |||

|1 | |||

|2 | |||

|2 | |||

|2 | |||

|1 | |||

|1 | |||

|- | |||

|Threads | |||

|768 | |||

|768 | |||

|1K | |||

|1K | |||

|1536 | |||

|1536 | |||

|2K | |||

|2K | |||

|2K | |||

|1K | |||

|- | |||

|Warps | |||

|24 | |||

|24 | |||

|32 | |||

|32 | |||

|48 | |||

|48 | |||

|64 | |||

|64 | |||

|64 | |||

|32 | |||

|- | |||

|Blocks | |||

|8 | |||

|8 | |||

|8 | |||

|8 | |||

|8 | |||

|8 | |||

|16 | |||

|16 | |||

|32 | |||

|16 | |||

|- | |||

|32-bit regs | |||

|8K | |||

|8K | |||

|16K | |||

|16K | |||

|32K | |||

|32K | |||

|64K | |||

|64K | |||

|64K | |||

|64K | |||

|- | |||

|Examples | |||

|G80 | |||

|G9x | |||

|GT21x | |||

|GT200 | |||

|GF110 | |||

|GF10x | |||

|GK104 | |||

|GK110 | |||

|GV100 | |||

|TU10x | |||

|- | |||

|} | |||

{| border="1" | {| border="1" | ||

! Revision | ! Revision | ||

| Line 194: | Line 308: | ||

* 1024 threads per block and <tt>blockIdx.{x,y}</tt> values ranging through 1024. | * 1024 threads per block and <tt>blockIdx.{x,y}</tt> values ranging through 1024. | ||

* Larger texture references. | * Larger texture references. | ||

* PTX 2.0 | * ''PTX 2.0'' | ||

** Efficient uniform addressing (<tt>ldu</tt>) | ** Efficient uniform addressing (<tt>ldu</tt>) | ||

** Unified address space: <tt>isspacep</tt>/<tt>cvta</tt> | ** Unified address space: <tt>isspacep</tt>/<tt>cvta</tt> | ||

| Line 214: | Line 328: | ||

* 32 SFUs per SMX, 32 TFUs per ROP | * 32 SFUs per SMX, 32 TFUs per ROP | ||

* 4 warp schedulers per SMX, capable of issuing two instructions per clock | * 4 warp schedulers per SMX, capable of issuing two instructions per clock | ||

* PTX 3.0 | * Double-precision instructions can be paired with non-DP | ||

** Previously, double-precision instructions couldn't be paired with anything | |||

* ''PTX 3.0'' | |||

** <tt>madc</tt> and <tt>mad.cc</tt> instructions | ** <tt>madc</tt> and <tt>mad.cc</tt> instructions | ||

** Cubemaps and cubearrays for the <tt>tex</tt> instruction | ** Cubemaps and cubearrays for the <tt>tex</tt> instruction | ||

| Line 223: | Line 339: | ||

** DWARF debugging symbols support | ** DWARF debugging symbols support | ||

|- | |||

| 3.5 | |||

| | |||

* 255 registers per thread | |||

* "CUDA Dynamic Parallelism", the ability to spawn threads from within device code | |||

* ''PTX 3.1'' | |||

** A funnel shift instruction, <tt>shf</tt> | |||

** Loading read-only global data through the non-coherent texture cache, <tt>ld.global.nc</tt> | |||

** 64-bit atomic/reduction operators extended to {or, xor, and, integer min, integer max} | |||

** Mipmap type support | |||

** Indirect texture/surface support | |||

** Extends generic addressing to include the const state space | |||

|- | |||

| 7.0 | |||

| | |||

* ''PTX 6.3'' | |||

* Tensor cores | |||

* Independent thread scheduling | |||

|- | |||

| 7.5 | |||

| | |||

* ''PTX 6.4'' | |||

* Integer matrix multiplication in tensor cores | |||

|- | |- | ||

|} | |} | ||

==PTX== | |||

===Syntax Coloring=== | |||

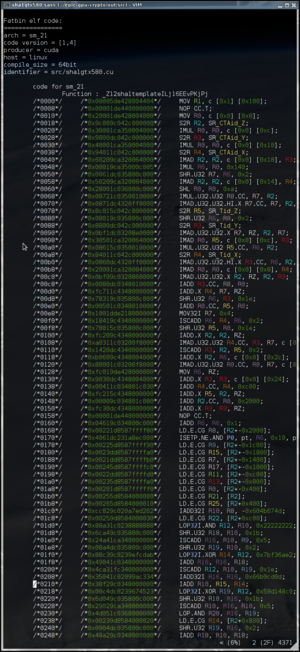

[[File:ptxcolor.png|thumb|right|PTX with syntax coloring]] | |||

I've got a [[vim]] syntax coloring file for PTX/NVIR/SASS at https://raw.github.com/dankamongmen/dankhome/master/.vim/syntax/nvir.vim. It operates by coloring all registers congruent to some integer mod 10 the same color: | |||

<pre>syn match asmReg0 "v\?R[0-9]*0\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmReg1 "v\?R[0-9]*1\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmReg2 "v\?R[0-9]*2\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmReg3 "v\?R[0-9]*3\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmReg4 "v\?R[0-9]*4\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmReg5 "v\?R[0-9]*5\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmReg6 "v\?R[0-9]*6\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmReg7 "v\?R[0-9]*7\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmReg8 "v\?R[0-9]*8\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmReg9 "v\?R[0-9]*9\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmPReg "P[0-9]\([0-9]*\)\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" | |||

syn match asmBB "BB[0-9][0-9]*\(_\d\d*\)\?" | |||

syn match asmBBNew "BB-\d\d*" | |||

syn match nvirNT ".NEXT_TRUE.*" | |||

syn match nvirNF ".NEXT_FALSE.*" | |||

syn match hexconst "0x\x\+\(\.F\|\.U\?\(I\|L\)\)\?" | |||

syn match spreg "\(ctaid\|ntid\|tid\|nctaid\).\(x\|y\|z\)"</pre> | |||

==Building CUDA Apps== | ==Building CUDA Apps== | ||

===nvcc flags=== | ===nvcc flags=== | ||

* <tt>- | Pass flags to <tt>ptxas</tt> via -X: | ||

* <tt>-X -v</tt> displays per-thread register usage | |||

* <tt>-X -abi=no</tt> disables the PTX ABI, saving registers but taking away your stack | |||

* <tt>-dlcm={cg,cs,ca}</tt> modifies cache behavior for loads | |||

* <tt>-dscm={cw,cs}</tt> modifies cache behavior for stores | |||

===SDK's common.mk=== | ===SDK's common.mk=== | ||

This assumes use of the SDK's common.mk, as recommended by the documentation. | This assumes use of the SDK's common.mk, as recommended by the documentation. | ||

| Line 280: | Line 447: | ||

* A run time limit is activated by default if the device is being used to drive a display. | * A run time limit is activated by default if the device is being used to drive a display. | ||

* Please feel free to [mailto:nickblack@acm.org send me output!] | * Please feel free to [mailto:nickblack@acm.org send me output!] | ||

{| border="1" | {| border="1" | ||

! Device name | ! Device name | ||

| Line 285: | Line 454: | ||

! MP's | ! MP's | ||

! Cores | ! Cores | ||

! Shmem/block | ! Shmem/block | ||

! Reg/block | ! Reg/block | ||

! Warp size | ! Warp size | ||

! Thr/block | ! Thr/block | ||

! Texalign | ! Texalign | ||

! Clock | ! Clock | ||

| Line 297: | Line 464: | ||

! Shared maps? | ! Shared maps? | ||

|- | |- | ||

! COLSPAN=" | ! COLSPAN="13" style="background:#eebeb6;" | Compute capability 7.0 | ||

|- | |||

| Tesla V100 | |||

| 16GB | |||

| 84 | |||

| 5376/2688/672 | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| 1.53GHz | |||

| Yes | |||

| No | |||

| Yes | |||

|- | |||

! COLSPAN="13" style="background:#8070D8;" | Compute capability 3.0 | |||

|- | |- | ||

| GeForce GTX 680 | | GeForce GTX 680 | ||

| Line 304: | Line 487: | ||

| 1536 | | 1536 | ||

| | | | ||

| | | | ||

| | | | ||

| Line 312: | Line 492: | ||

| | | | ||

| | | | ||

| Yes | |||

| No | | No | ||

| Yes | | Yes | ||

|- | |- | ||

! COLSPAN=" | ! COLSPAN="13" style="background:#ffdead;" | Compute capability 2.1 | ||

|- | |||

| GeForce GTX 560 Ti | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |||

| GeForce GTX 550 Ti | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |- | ||

| GeForce GTX 460 | | GeForce GTX 460 | ||

| Line 321: | Line 530: | ||

| 7 | | 7 | ||

| 224 | | 224 | ||

| 48k | | 48k | ||

| 32k | | 32k | ||

| 32 | | 32 | ||

| 1024 | | 1024 | ||

| 512b | | 512b | ||

| 1.35GHz | | 1.35GHz | ||

| | | Yes | ||

| No | | No | ||

| Yes | | Yes | ||

|- | |- | ||

! COLSPAN=" | | GeForce GTS 450 | ||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |||

! COLSPAN="13" style="background:#ffdead;" | Compute capability 2.0 | |||

|- | |- | ||

| GeForce GTX 580 | | GeForce GTX 580 | ||

| Line 339: | Line 560: | ||

| 16 | | 16 | ||

| 512 | | 512 | ||

| | | | ||

| | | | ||

| Line 346: | Line 565: | ||

| 1024 | | 1024 | ||

| | | | ||

| | | 1.544GHz | ||

| | | Yes | ||

| No | | No | ||

| Yes | | Yes | ||

| Line 355: | Line 574: | ||

| 14 | | 14 | ||

| 448 | | 448 | ||

| 48k | | 48k | ||

| 32k | | 32k | ||

| 32 | | 32 | ||

| 1024 | | 1024 | ||

| 512b | | 512b | ||

| 1.15GHz | | 1.15GHz | ||

| | | Yes | ||

| No | | No | ||

| Yes | | Yes | ||

| Line 371: | Line 588: | ||

| 14 | | 14 | ||

| 448 | | 448 | ||

| 48k | | 48k | ||

| 32k | | 32k | ||

| 32 | | 32 | ||

| 1024 | | 1024 | ||

| 512b | | 512b | ||

| 1.15GHz | | 1.15GHz | ||

| | | Yes | ||

| No | | No | ||

| Yes | | Yes | ||

| Line 387: | Line 602: | ||

| 15 | | 15 | ||

| 480 | | 480 | ||

| | | | ||

| | | | ||

| Line 403: | Line 616: | ||

| 14 | | 14 | ||

| 448 | | 448 | ||

| | | | ||

| | | | ||

| Line 415: | Line 626: | ||

| | | | ||

|- | |- | ||

! COLSPAN=" | ! COLSPAN="13" style="background:#efefef;" | Compute capability 1.3 | ||

|- | |- | ||

| Tesla C1060 | | Tesla C1060 | ||

| Line 421: | Line 632: | ||

| 30 | | 30 | ||

| 240 | | 240 | ||

| 16384b | | 16384b | ||

| 16384 | | 16384 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.30GHz | | 1.30GHz | ||

| Line 437: | Line 646: | ||

| 30 | | 30 | ||

| 240 | | 240 | ||

| 16384b | | 16384b | ||

| 16384 | | 16384 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.24GHz | | 1.24GHz | ||

| Line 453: | Line 660: | ||

| 30 | | 30 | ||

| 240 | | 240 | ||

| 16384b | | 16384b | ||

| 16384 | | 16384 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.48GHz | | 1.48GHz | ||

| Yes | | Yes | ||

| | | No | ||

| Yes | | Yes | ||

|- | |- | ||

| Line 469: | Line 674: | ||

| 30 | | 30 | ||

| 240 | | 240 | ||

| 16384b | | 16384b | ||

| 16384 | | 16384 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.30GHz | | 1.30GHz | ||

| Line 485: | Line 688: | ||

| 27 | | 27 | ||

| 216 | | 216 | ||

| 16384b | | 16384b | ||

| 16384 | | 16384 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.47GHz | | 1.47GHz | ||

| Line 497: | Line 698: | ||

| Yes | | Yes | ||

|- | |- | ||

! COLSPAN=" | ! COLSPAN="13" style="background:#efefef;" | Compute capability 1.2 | ||

|- | |- | ||

| GeForce GT 360M | | GeForce GT 360M | ||

| Line 503: | Line 704: | ||

| 12 | | 12 | ||

| 96 | | 96 | ||

| 16384b | | 16384b | ||

| 16384 | | 16384 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.32GHz | | 1.32GHz | ||

| Line 519: | Line 718: | ||

| 2 | | 2 | ||

| 16 | | 16 | ||

| 16384b | | 16384b | ||

| 16384 | | 16384 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.40GHz | | 1.40GHz | ||

| Line 535: | Line 732: | ||

| 12 | | 12 | ||

| 96 | | 96 | ||

| 16384b | | 16384b | ||

| 16384 | | 16384 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.424GHz | | 1.424GHz | ||

| Line 547: | Line 742: | ||

| Yes | | Yes | ||

|- | |- | ||

! COLSPAN=" | ! COLSPAN="13" style="background:#efefef;" | Compute capability 1.1 | ||

|- | |- | ||

| ION | | ION | ||

| Line 553: | Line 748: | ||

| 2 | | 2 | ||

| 16 | | 16 | ||

| 16384b | | 16384b | ||

| 8192 | | 8192 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.1GHz | | 1.1GHz | ||

| Line 569: | Line 762: | ||

| 2 | | 2 | ||

| 16 | | 16 | ||

| 16384b | | 16384b | ||

| 8192 | | 8192 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 0.92GHz | | 0.92GHz | ||

| Line 585: | Line 776: | ||

| 16 | | 16 | ||

| 128 | | 128 | ||

| 16384b | | 16384b | ||

| 8192 | | 8192 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.84GHz | | 1.84GHz | ||

| Line 601: | Line 790: | ||

| 16 | | 16 | ||

| 128 | | 128 | ||

| 16384b | | 16384b | ||

| 8192 | | 8192 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.67GHz | | 1.67GHz | ||

| Line 617: | Line 804: | ||

| 8 | | 8 | ||

| 64 | | 64 | ||

| 16384b | | 16384b | ||

| 8192 | | 8192 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.62GHz, | | 1.62GHz, | ||

| Line 634: | Line 819: | ||

| 2 | | 2 | ||

| 16 | | 16 | ||

| 16384b | | 16384b | ||

| 8192 | | 8192 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 0.88GHz | | 0.88GHz | ||

| Line 650: | Line 833: | ||

| 16 | | 16 | ||

| 128 | | 128 | ||

| 16384b | | 16384b | ||

| 8192 | | 8192 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.62GHz | | 1.62GHz | ||

| Line 666: | Line 847: | ||

| 4 | | 4 | ||

| 32 | | 32 | ||

| 16384b | | 16384b | ||

| 8192 | | 8192 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 0.95GHz | | 0.95GHz | ||

| Line 682: | Line 861: | ||

| 1 | | 1 | ||

| 8 | | 8 | ||

| 16384b | | 16384b | ||

| 8192 | | 8192 | ||

| 32 | | 32 | ||

| 512 | | 512 | ||

| 256b | | 256b | ||

| 1.40GHz | | 1.40GHz | ||

Latest revision as of 01:33, 15 August 2019

Hardware

NVIDIA maintains a list of supported hardware. You'll need the "nvidia.ko" kernel module. On Debian, use the nvidia-kernel-dkms package to build a module appropriate for your kernel (and automatically rebuild it upon kernel upgrades). You can also download the nvidia-kernel-source and nvidia-kernel-common packages, unpack /usr/src/nvidia-kernel.tar.bz2, and run make-kpkg modules_image. Install the resulting .deb, and modprobe nvidia. You'll see something like this in dmesg output:

nvidia: module license 'NVIDIA' taints kernel. Disabling lock debugging due to kernel taint nvidia 0000:07:00.0: enabling device (0000 -> 0003) nvidia 0000:07:00.0: PCI INT A -> GSI 21 (level, low) -> IRQ 21 nvidia 0000:07:00.0: setting latency timer to 64 NVRM: loading NVIDIA UNIX x86_64 Kernel Module 190.53 Wed Dec 9 15:29:46 PST 2009

Once the module is loaded, CUDA should be able to find the device. See below for sample outputs. Each device has a compute capability, though this does not encompass all differentiated capabilities (see also deviceOverlap and canMapHostMemory...). Note that "emulation mode" has been removed as of CUDA Toolkit Version 3.1.

CUDA model

Host

- A host contains zero or more CUDA-capable devices (emulation must be used if zero devices are available).

- It can run multiple CUDA processes, each composed of one or more host threads.

- A given host thread can execute code on only one device at once.

- Multiple host threads can execute code on the same device.

Device

- A device packages a streaming processor array (SPA), a memory interface, and possibly memory (global memory. device memory).

- In CUDA terminology, an integrated (vs discrete) device does not have its own global memory.

- Specially-prepared global memory is designated constant memory, and can be cached.

- Pinned (locked) host memory avoids a bounce buffer, accelerating transfers.

- Larger one-time setup cost due to device register programming for DMA transfers.

- This memory will be unswappable -- allocate only as much as is needed.

- Pinned memory can be mapped directly into CUDAspace on integrated devices or in the presence of some IOMMUs.

- "Zero (explicit)-copy" interface (can never hide all bus delays)

- Write-combining memory (configured via MTRRs or PATs) avoids PCI snoop requirements and maximizes linear throughput

- Subtle side-effects; not to be used glibly or carelessly!

- Distributes work at block granularity to Texture Processing Clusters (TPCs).

Texture Processing Cluster

Streaming Multiprocessors (SMs) are grouped into TPCs. Each TPC contains some number of SMs and a single texture processing unit, including a few filters and a cache for texture memory. The details of these texture caches have not generally been publicized, but NVIDIA optimization guides confirm 1- and 2-dimensional spatial caching to be in effect.

Streaming Multiprocessor

- Each SM has a register file, fast local (shared) memory, a cache for constant memory, an instruction cache (ROP), a multithreaded instruction dispatcher, and some number of Stream Processors (SPs).

- 8K registers for compute capability <= 1.1, otherwise

- 16K for compute capability <= 1.3, otherwise

- 32K for compute capability <= 2.1, otherwise

- 64K through at least compute capability 3.5

- A group of threads which share a memory and can "synchronize their execution to coördinate accesses to memory" (use a barrier) form a block. Each thread has a threadId within its (three-dimensional) block.

- For a block of dimensions <Dx, Dy, Dz>, the threadId of the thread having index <x, y, z> is (x + y * Dx + z * Dy * Dx).

- Register allocation is performed per-block, and rounded up to the nearest

- 256 registers per block for compute capability <= 1.1, otherwise

- 512 registers per block for compute capability <= 1.3

- A group of blocks which share a kernel form a grid. Each block (and each thread within that block) has a blockId within its (two-dimensional) grid.

- For a grid of dimensions <Dx, Dy>, the blockId of the block having index <x, y> is (x + y * Dx).

- Thus, a given thread's <blockId X threadId> dyad is unique across the grid. All the threads of a block share a blockId, and corresponding threads of various blocks share a threadId.

- Each time the kernel is instantiated, new grid and block dimensions may be provided.

- A block's threads, starting from threadId 0, are broken up into contiguous warps having some warp size number of threads.

- Distributes out-of-order work at warp granularity across SPs.

- One program counter per warp -- divergence within warp leads to serialization.

- Divergence is trivially supported with a per-warp stack; warps reconverge at immediate post-dominators of branches

- Supports some maximum number of blocks and threads (~8 and ~768 on G80).

Block sizing

FIXME: review/verify this!

How tightly can we bound the optimal block size T, given a warp size w? The number of threads per block ought almost always be a multiple of w, both to:

- facilitate coalescing (coalescing requirements are related to w/2), and

- maximize utilization of SPs within warp-granular scheduling.

A SM has r registers and s words of shared memory, allocated per-block (see above). Assuming that w threads can be supported (i.e., that none requires more than r/w registers or s/w words of shared memory), the most obvious lower bound is w itself. The most obvious upper bound, assuming arbitrary available work, is the greatest multiple of w supported by hardware (and, obviously, the SDK). A block must be scheduled to an SM, which requires:

- registers sufficient to support the block,

- shared memory sufficient to support the block,

- that the total number of threads not exceed some limit t (likely bounding the divergence-tracking stacks), and

- that the total number of blocks not exceed some limit b (likely bounding the warp-scheduling complexity).

A given SM, then, supports T values through the minimum of {r/Thrreg, s/Blkshmem, and t}; as the block requires fewer registers and less shared memory, the upper bound converges to t.

Motivations for larger blocks include:

- freedom in the b dimension exposes parallelism until t <= b * T

- larger maximum possible kernels (an absolute limit exists on grid dimensions)

- better if data can be reused among threads (e.g. in tiled matrix multiply)

Motivations for smaller blocks include:

- freedom in the t dimension exposes parallelism until t >= b * T

- freedom in the r and s dimensions exposes parallelism until r >= b * T * Thrreg or s >= b * Blkshmem.

- cheaper per-block operations(?) (__syncthreads(), voting, etc)

- support for older hardware and SDKs

- fairer distribution among SMs and thus possibly better utilization, lower latency

- relative speedup tends to 0 as work grows arbitrarily on finite SMs

- relative speedup tends to 1/Fracpar on infinitely many SMs

We can now optimize occupancy for a specific {t, b, r and s}, assuming t to be a multiple of both w and b:

- Let T = t / b. T is thus guaranteed to be the smallest multiple of w such that t == b * T.

- Check the r and w conditions. FIXME: handle reduction

- FIXME: handle very large (external) kernels

Optimizing for ranges of hardware values is left as an exercise for the reader. Occupancy is only worth optimizing if the number of warps are insufficient to hide latencies. It might be possible to eliminate latencies altogether by reusing data throughout a block via shared memory; if the algorithm permits, this is almost certainly a net win. In that case, we likely want to maximize Blkshmem. A more advanced theory would incorporate the arithmetic intensity of a kernel...FIXME

Stream Processor

- In-order, multithreaded processor: memory latencies can be hidden only by TLP, not ILP.

- UPDATE Vasily Volkov's awesome GTC 2010 paper, "Better Performance at Lower Occupancy", destroys this notion.

- Really. Go read Vasily's paper. It's better than anything you'll find here.

- Arithmetic intensity and parallelism are paramount!

- Memory-bound kernels require sufficiently high occupancy (the ratio of concurrently-running warps to maximum possible concurrent warps (as applied, usually, to SMs)) to hide latency.

- UPDATE Vasily Volkov's awesome GTC 2010 paper, "Better Performance at Lower Occupancy", destroys this notion.

- No branch prediction or speculation. Full predication.

| Memory type | PTX name | Sharing | Kernel access | Host access | Cache location | Adddressable |

|---|---|---|---|---|---|---|

| Registers | .reg | Per-thread | Read-write | None | None | No |

| Special registers | .sreg | varies | Read-only | None | None | No |

| Local memory | .local | Per-thread | Read-write | None | None | Yes |

| Shared memory | .shared | Per-block | Read-write | None | None | Yes |

| Global memory | .global | Global | Read-write | Read-write | 1.x: None

2.0+: L1 on SM, L2 on TPC(?) |

Yes |

| Constant memory | .const | Per-grid | Read | Read-write | Stream multiprocessor | Yes |

| Texture memory | .tex | Global | Read | Read-write | Texture processing cluster | texture API |

| Parameters (to grids or functions) | .param | Per-grid (or per-thread) | Read-only (or read-write) | None | None | Yes (or restricted) |

Compute Capabilities

The original public CUDA revision was 1.0, implemented on the NV50 chipset corresponding to the GeForce 8 series. Compute capability, formed of a non-negative major and minor revision number, can be queried on CUDA-capable cards. All revisions thus far have been fowards-compatible, though recent CUDA toolkits will not generate code for CC1 or 2.

| Resource | 1.0 SM | 1.1 SM | 1.2 SM | 1.3 SM | 2.0 SM | 2.1 SM | 3.0 SMX | 3.5 SMX | 7.0 SM | 7.5 SM |

|---|---|---|---|---|---|---|---|---|---|---|

| CUDA cores | 8 | 8 | 8 | 8 | 32 | 48 | 192 | 192 | 64/32 64/8 |

64/2 64/8 |

| Schedulers | 1 | 1 | 1 | 1 | 2 | 2 | 4 | 4 | 4 | 4 |

| Insts/sched | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 1 | 1 |

| Threads | 768 | 768 | 1K | 1K | 1536 | 1536 | 2K | 2K | 2K | 1K |

| Warps | 24 | 24 | 32 | 32 | 48 | 48 | 64 | 64 | 64 | 32 |

| Blocks | 8 | 8 | 8 | 8 | 8 | 8 | 16 | 16 | 32 | 16 |

| 32-bit regs | 8K | 8K | 16K | 16K | 32K | 32K | 64K | 64K | 64K | 64K |

| Examples | G80 | G9x | GT21x | GT200 | GF110 | GF10x | GK104 | GK110 | GV100 | TU10x |

| Revision | Changes |

|---|---|

| 1.1 |

|

| 1.2 |

|

| 1.3 |

|

| 2.0 |

|

| 2.1 |

|

| 3.0 |

|

| 3.5 |

|

| 7.0 |

|

| 7.5 |

|

PTX

Syntax Coloring

I've got a vim syntax coloring file for PTX/NVIR/SASS at https://raw.github.com/dankamongmen/dankhome/master/.vim/syntax/nvir.vim. It operates by coloring all registers congruent to some integer mod 10 the same color:

syn match asmReg0 "v\?R[0-9]*0\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmReg1 "v\?R[0-9]*1\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmReg2 "v\?R[0-9]*2\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmReg3 "v\?R[0-9]*3\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmReg4 "v\?R[0-9]*4\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmReg5 "v\?R[0-9]*5\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmReg6 "v\?R[0-9]*6\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmReg7 "v\?R[0-9]*7\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmReg8 "v\?R[0-9]*8\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmReg9 "v\?R[0-9]*9\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmPReg "P[0-9]\([0-9]*\)\(\.B\|\.F\|\.U\?\(I\|L\)\|\([^0-9]\)\@=\)" syn match asmBB "BB[0-9][0-9]*\(_\d\d*\)\?" syn match asmBBNew "BB-\d\d*" syn match nvirNT ".NEXT_TRUE.*" syn match nvirNF ".NEXT_FALSE.*" syn match hexconst "0x\x\+\(\.F\|\.U\?\(I\|L\)\)\?" syn match spreg "\(ctaid\|ntid\|tid\|nctaid\).\(x\|y\|z\)"

Building CUDA Apps

nvcc flags

Pass flags to ptxas via -X:

- -X -v displays per-thread register usage

- -X -abi=no disables the PTX ABI, saving registers but taking away your stack

- -dlcm={cg,cs,ca} modifies cache behavior for loads

- -dscm={cw,cs} modifies cache behavior for stores

SDK's common.mk

This assumes use of the SDK's common.mk, as recommended by the documentation.

- Add the library path to LD_LIBRARY_PATH, assuming CUDA's been installed to a non-standard directory.

- Set the CUDA_INSTALL_PATH and ROOTDIR (yeargh!) if outside the SDK.

- I keep the following in bin/cudasetup of my home directory. Source it, using sh's . cudasetup syntax:

CUDA="$HOME/local/cuda/" export CUDA_INSTALL_PATH="$CUDA" export ROOTDIR="$CUDA/C/common/" if [ -n "$LD_LIBRARY_PATH" ] ; then export "LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CUDA/lib64" else export "LD_LIBRARY_PATH=$CUDA/lib64" fi unset CUDA

- Set EXECUTABLE in your Makefile, and include $CUDA_INSTALL_PATH/C/common/common.mk

Unit testing

The DEFAULT_GOAL special variable of GNU Make can be used:

.PHONY: test

.DEFAULT_GOAL:=test

include $(CUDA_INSTALL_PATH)/C/common/common.mk

test: $(TARGET)

$(TARGET)

Libraries

Two mutually exclusive means of driving CUDA are available: the "Driver API" and "C for CUDA" with its accompanying nvcc compiler and runtime. The latter (libcudart) is built atop the former, and requires its libcuda library.

Undocumented Functions

The following unlisted functions were extracted from 3.0's libcudart.so using objdump -T:

00000000000097d0 g DF .text 000000000000020e Base __cudaRegisterShared 0000000000005410 g DF .text 0000000000000003 Base __cudaSynchronizeThreads 0000000000009e60 g DF .text 0000000000000246 Base __cudaRegisterVar 000000000000a0b0 g DF .text 0000000000000455 Base __cudaRegisterFatBinary 00000000000095c0 g DF .text 000000000000020e Base __cudaRegisterSharedVar 0000000000005420 g DF .text 0000000000000002 Base __cudaTextureFetch 000000000000a510 g DF .text 00000000000009dd Base __cudaUnregisterFatBinary 00000000000099e0 g DF .text 000000000000024e Base __cudaRegisterFunction 0000000000005820 g DF .text 000000000000001c Base __cudaMutexOperation 0000000000009c30 g DF .text 000000000000022e Base __cudaRegisterTexture

deviceQuery info

- Memory shown is that amount which is free; I've substituted total VRAM.

- Most CUDA devices can switch between multiple frequencies; the "Clock rate" output ought be considered accurate only at a given moment, and the outputs listed here are merely illustrative.

- Three device modes are currently supported:

- 0: Default (multiple applications can use the device)

- 1: Exclusive (only one application may use the device; other calls to cuCtxCreate will fail)

- 2: Disabled (no applications may use the device; all calls to cuCtxCreate will fail

- The mode can be set using nvidia-smi's -c option, specifying the device number via -g.

- A run time limit is activated by default if the device is being used to drive a display.

- Please feel free to send me output!

| Device name | Memory | MP's | Cores | Shmem/block | Reg/block | Warp size | Thr/block | Texalign | Clock | C+E? | Integrated? | Shared maps? |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Compute capability 7.0 | ||||||||||||

| Tesla V100 | 16GB | 84 | 5376/2688/672 | 1.53GHz | Yes | No | Yes | |||||

| Compute capability 3.0 | ||||||||||||

| GeForce GTX 680 | 1.5GB | 8 | 1536 | Yes | No | Yes | ||||||

| Compute capability 2.1 | ||||||||||||

| GeForce GTX 560 Ti | ||||||||||||

| GeForce GTX 550 Ti | ||||||||||||

| GeForce GTX 460 | 1GB | 7 | 224 | 48k | 32k | 32 | 1024 | 512b | 1.35GHz | Yes | No | Yes |

| GeForce GTS 450 | ||||||||||||

| Compute capability 2.0 | ||||||||||||

| GeForce GTX 580 | 1.5GB | 16 | 512 | 32 | 1024 | 1.544GHz | Yes | No | Yes | |||

| Tesla C2050 (*CB) | 3GB | 14 | 448 | 48k | 32k | 32 | 1024 | 512b | 1.15GHz | Yes | No | Yes |

| Tesla C2070 (*CB) | 6GB | 14 | 448 | 48k | 32k | 32 | 1024 | 512b | 1.15GHz | Yes | No | Yes |

| GeForce GTX 480 | 1536MB | 15 | 480 | |||||||||

| GeForce GTX 470 | 1280MB | 14 | 448 | |||||||||

| Compute capability 1.3 | ||||||||||||

| Tesla C1060 | 4GB | 30 | 240 | 16384b | 16384 | 32 | 512 | 256b | 1.30GHz | Yes | No | Yes |

| GeForce GTX 295 | 1GB | 30 | 240 | 16384b | 16384 | 32 | 512 | 256b | 1.24GHz | Yes | No | Yes |

| GeForce GTX 285 | 1GB | 30 | 240 | 16384b | 16384 | 32 | 512 | 256b | 1.48GHz | Yes | No | Yes |

| GeForce GTX 280 | 1GB | 30 | 240 | 16384b | 16384 | 32 | 512 | 256b | 1.30GHz | Yes | No | Yes |

| GeForce GTX 260 | 1GB | 27 | 216 | 16384b | 16384 | 32 | 512 | 256b | 1.47GHz | Yes | No | Yes |

| Compute capability 1.2 | ||||||||||||

| GeForce GT 360M | 1GB | 12 | 96 | 16384b | 16384 | 32 | 512 | 256b | 1.32GHz | Yes | No | Yes |

| GeForce 310 | 512MB | 2 | 16 | 16384b | 16384 | 32 | 512 | 256b | 1.40GHz | Yes | No | Yes |

| GeForce 240 GT | 1GB | 12 | 96 | 16384b | 16384 | 32 | 512 | 256b | 1.424GHz | Yes | No | Yes |

| Compute capability 1.1 | ||||||||||||

| ION | 256MB | 2 | 16 | 16384b | 8192 | 32 | 512 | 256b | 1.1GHz | No | Yes | Yes |

| Quadro FX 570 | 256MB | 2 | 16 | 16384b | 8192 | 32 | 512 | 256b | 0.92GHz | Yes | No | No |

| GeForce GTS 250 (*JR) | 1G | 16 | 128 | 16384b | 8192 | 32 | 512 | 256b | 1.84GHz | Yes | No | No |

| GeForce 9800 GTX | 512MB | 16 | 128 | 16384b | 8192 | 32 | 512 | 256b | 1.67GHz | Yes | Yes | Yes |

| GeForce 9600 GT | 512MB | 8 | 64 | 16384b | 8192 | 32 | 512 | 256b | 1.62GHz,

1.50GHz |

Yes | No | No |

| GeForce 9400M | 256MB | 2 | 16 | 16384b | 8192 | 32 | 512 | 256b | 0.88GHz | No | No | No |

| GeForce 8800 GTS 512 | 512MB | 16 | 128 | 16384b | 8192 | 32 | 512 | 256b | 1.62GHz | Yes | No | No |

| GeForce 8600 GT | 256MB | 4 | 32 | 16384b | 8192 | 32 | 512 | 256b | 0.95GHz | Yes | No | No |

| GeForce 9400M | 512MB | 1 | 8 | 16384b | 8192 | 32 | 512 | 256b | 1.40GHz | No | No | No |

(*CB) Thanks to Cameron Black for this submission! (*JR) Thanks to Javier Ruiz for this submission!

See Also

- The nouveau Wiki's CUDA page

- Honza Havlicek's guide to NVIDIA architecture

- Context switching and the PSWITCH instruction

- The gpuocelot project, hosted on Google Code.

- The NVIDIA GPU Developer Zone

- My CUBAR tools and reverse-engineered libcudest