Check out my first novel, midnight's simulacra!

A Rack of One's Own: Difference between revisions

No edit summary |

m Dank moved page A Supercomputer of One's Own to A Rack of One's Own: supercomputer is misleading |

||

(No difference)

| |||

Revision as of 16:04, 12 March 2023

dankblog! 2023-03-11, 1709 EST, at the danktower

i've never owned "enterprise-class" hardware, nor even really worked with it much. i'm of the Google school: buy COTS by lots, count on it breaking, and work around the failures. xeons and (more recently) epycs never seemed price-competitive (though i seriously considered the former for my 2016 workstation build), especially given their reduced clocks. as amd's threadripper emerged, they weren't even holding it down on core count—only recently has sapphire rapids matched my 3970x's dotriacontacore setup (13th generation intel core processors topped out at 24 cores), and they still can't match that marvelous processor's LLC sizes. it just didn't make sense. the available motherboards furthermore always seemed a few years behind with regards to USB. if you didn't intend to take advantage of those fat support deals, the only value proposition seemed to be support for massive amounts of (ECC!) memory, and the server processors' heftier memory controllers (threadripper's cap at four memory controllers was the major reason why i went with a 3970X rather than the 64-core 3990X—how is one supposed to keep all those cores fed with only four DDR4 controllers?).

with the advent of 2023, however, my storage situation was becoming untenable. i've already pushed the workstation to its limits; it might be a monstrous CaseLabs Magnum T10, but it's still hardly suitable for more than 24 3.5" drives, and the lack of physical hotswap support for half those drives was really beginning to give me a rash. seagate exos drives are outstanding from a performance and price perspective, but they're loud little fuckers, and especially during a zfs scrub or resilver they were pretty annoying in chorus (watercooling has otherwise silenced my workstation). with another hot atlanta summer in the post, i didn't look forward to keeping all 24 drives below the 60℃ mark in this configuration. furthermore, my current dayjob project benefits greatly from DDIO, present on xeons of the second and later generations (essentially, DDIO allows PCIe devices to stream directly into a directly-connected socket's LLC, bypassing DRAM entirely). i loathe lacking local access to hardware i'm using at work almost as much as i do actually visiting the office; call me old-fashioned, but i want to be able to get my hands on the pieces slinging my bits around, and more importantly to be able to experiment with system firmware settings and topologies. i additionally needed at least three 10G+ nodes in my local network, and two 100G+ nodes.

finally, my wife had resigned her position, and i hoped to fill the hole she'd ripped from my heart with expensive, heavy computing equipment, ideally racked. it could sit in my living room where i'd once envisioned a growing family laughing and warming my soul such that i no longer desired to end all humanity. hah! fuck me, i guess!

i picked up a rack, PDU, and (kinda crappy) switch off reddit's r/homelabsales for $550, delivered directly to my high-rise by a rural fellow with a truck. a SuperMicro 8048B-TRFT complete with X10Qbi quadsocket motherboard and (among other things) 4x E7-4850v2 xeons and their crappy 2.3GHz base clocks ran $999 off ebay plus $300 freight shipping. i had it delivered to my girlfriend's house to save about $40 on sales tax because i'm a cheap bastard. with a friend's help, i got it racked, and turned it on the first time in december 2022.

christ on a pogo stick, it was LOUD. the noise came from the four PWS-1K62P-1R PSUs, the three 10Krpm 80mm fans on the back, and four 9Krpm 92mm fans in the front. any one of these items rated 81dBa, and together they generated a skullrattling 91.5dBa, a noise level comparable to a subway station (by the magic of decibel addition, any given one was still about as loud as a leafblower, so i needed to address all of them if i intended to address any of them). this was unquestionably too much to work next to, and would likely cause irreperable hearing damage over just a few hours. so my first steps addressed that problem. the PSUs were replaced with four silent PWS-1K28P-SQs, dropping output from 1620W to 1280W each, still more than sufficient for my needs. noctua chromax.black NF-A9s went in the 92mm slots, and NF-A8s in the back 80mm bays. at 25mm, these were significantly less chonky than the 35mm San Ace/Nidec fans they replaced; at 1800rpm (technically closer to 1000rpm for silence), their cooling capability was far inferior to the 10Krpm monsters (see my PC Fans article for basic fan equations: tl;dr airflow grows linearly with rpm, pressure quadratically). i had to shave down the NF-A8s to get them to fit, but eventually i had all seven fans replaced. i threw a slim (and PWMless, wtf?) cooler master 92mm on the side to cool the AOM-X10QBi-A I/O card housing my 2x Intel 540 10Gbps interfaces, which was running well over 90℃ in the stock configuration. the machine was now silent. unfortunately, upon my first -j96 kernel build, it also now overheated, engaging thermal throttling and a horrendous alarm.

i'd hoped not to require watercooling, but with that dream dashed i set to work. the idea of in-case radiators was laughable, and anyway why have a rack if you're not gonna stuff it with shit? for a moment i considered immersion cooling, but due to other projects i already had two MO-RA3 420x420mm external radiators sitting around unused, whereas i had neither knowledge of nor hardware for immersion. there aren't very many LGA2011-compatible waterblocks; i was hoping for HEATKILLER IVs, but neither PerformancePCs nor TitanRig seem to be actually stocking parts as of late, so i settled for Alphacool Eisblock XPX Pro Aurora waterblocks at $50 a throw. as it turned out, i hardly needed the capability of the HEATKILLERS (my processors are rated at a mere 105W), so this was a win cashwise, and the blocks boast lovely ARGB. i went to install the XPXs, only to discover that the squarish LGA2011 socket has a rectangular cousin, the "LGA2011-Narrow", which despite its physical incompatibility apparently didn't rate a new socket designation. i raged skyward for a few moments, and set to designing custom LGA2011-Narrow mounts in OpenSCAD. i printed them up on my SLA Elegoo Saturn, and—miracle of miracles!—they worked on the first try. that's the power of actually measuring things instead of just trying to eyeball millimeter distances, i guess! i then suffered the first of three major leaks, all of them due to shoddy manufacturing on some no-name G¼ compression fittings. no matter where in my loop i employed them, no matter how solid the connections looked to the eye, these pieces of shit lived to spray neon green coolant all over my electronics, my rugs, and myself. i was stupidly ready to give them one more try (see above regarding cheap bastardhood), when i noticed they didn't even have fucking o-rings. hell, even Challenger had goddamn o-rings...until it didn't, anyway. into the trash they went, and a week later i had a phat sack of chungal (chungusy? chungy? chungesque perhaps?) Koolance Blacks, which have served without fail since installation.

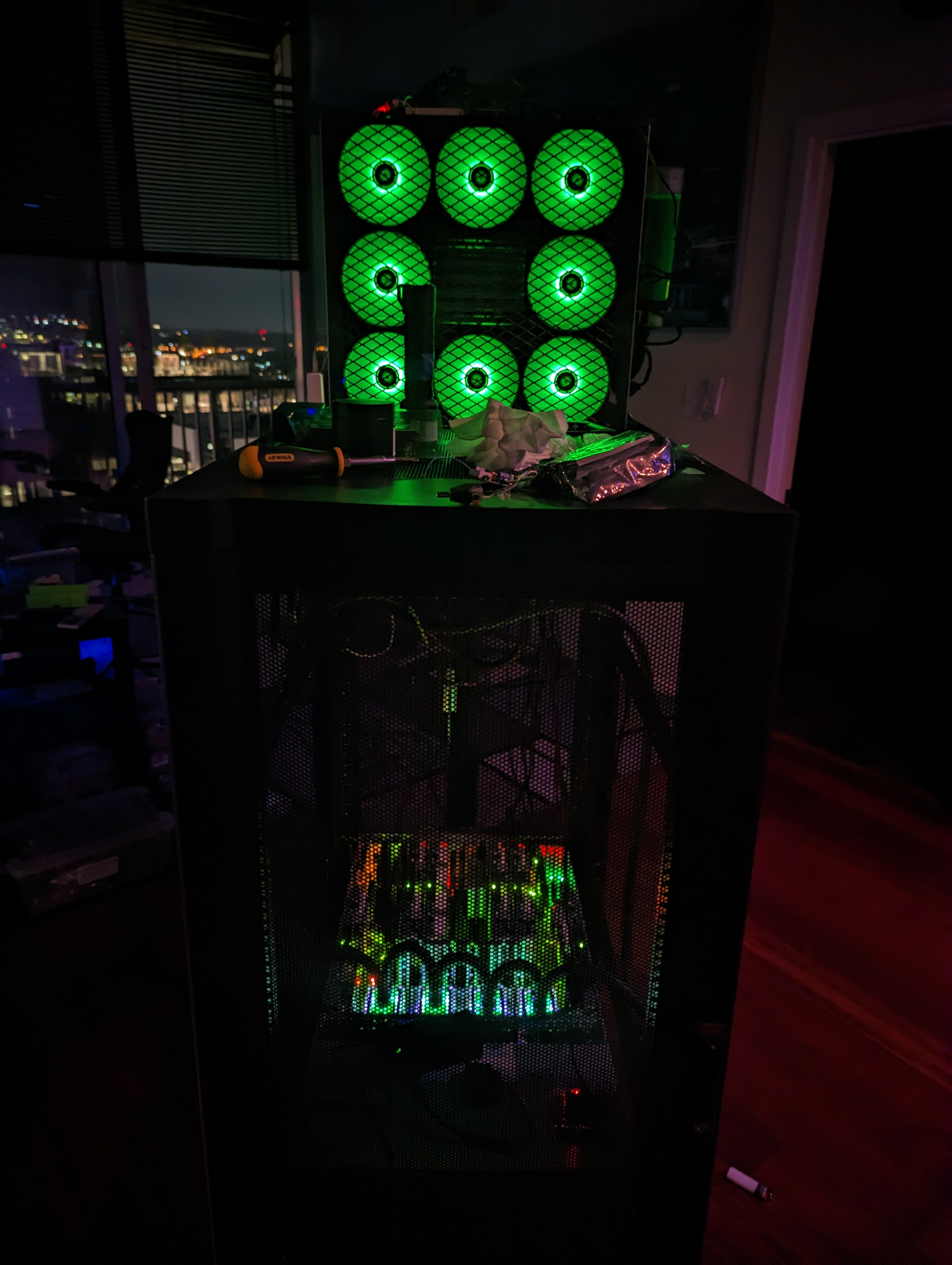

9x Arctic P14s on the MO-RA at 1200rpm plus an EKWB Dual XTOP with 2x D5s keep my ~3L of coolant barely suprambient, moving fluid at about 2.2Lpm at 4Krpm of pumping, with the four Xeons below 30 at idle, all of it silent. w00t! i'm using an ESP8266-based control, similar to my existing inaMORAta solution (but slightly improved). three sets of Koolance Black QD4 quick disconnects, an XCPC filter, and an EKWB Quantum Torque drainage fitting ensure loop maintainability across almost six meters of EKWB ZMT soft tubing. distilled water plus three bottles of EKWB Acid Green Cyrofuel concentrate and a Singularity Computing Protium reservoir complete the successful cooling story. i've drilled two ¾" (technically 20mm) holes through the roof through which the ZMT protrudes, though i'm likely to replace that steel with glass or Lexan so that the internals are visible (i've got a CODI6 lighting up the Aurora LEDs, but it's currently disabled, which is unfortunate as they look fucking awesome).

with that worked out, 'twas time to stuff the chassis like a motherfucking Caravan (as lil' wayne did before me). the motherboard offers eight slots for 8x X10QBi-MEM1 memory boards supporting 12 DDR3 DIMMs each, 4 PCIe 3.0x16 slots, and 7 PCIe 3.0x8 slots, but three of the latter slots prevent 2 memory boards from being used. two memory boards map to each NUMA zone, and any given socket requires locally-connected memory to function. the two boards contraindicated by the three x8 slots map to Zone 2 and 3 of 4. six boards of 12x 32GiB DIMMs each mean a maximum of 2304GiB RAM if all PCIe slots are to be used (though the real hit comes in terms of bandwidth, not capacity). well, 2.25TiB is still almost 10x as much memory as i've ever had in a machine, so it seemed fine for now. 72x 32GiB ECC DIMMs can be had on ebay for less than a kilodollar, and boom, after learning the difference between LRDIMMs and LDIMMs and what 4x4 means in a DIMM context i was live with over two terabytes of DDR3, and eleven PCIe slots supporting a total of 120 3.0 lanes (the dual-port Intel X540 and BPN-SAS-846A SAS3 controller used dedicated slots). how to fill them?

there are disappointingly no m.2 slots on the X10Qbi, so four x8 slots were paired up with chinesium PCBs to support 2x Intel 750 U.2 SSDs + 2x Samsung 980 M.2 SSDs, all of them NVMe (the 980s do not appear to be afflicted by the same firmware flaw which has proved so fatal for the 980 PROs, huzzah). a similarly nonamed USB 3.2 controller and an Intel AX200 WiFi6 provided valuable HID and wireless expansion capability (though i'm likely to remove the latter, read on). in went a Mellanox ConnectX-4 single-port 100Gbps (most CX4s are 50Gbps or below, but several models boast the full QSFP28 goodness, though only in a single port), directly linked with armored 850nm OM4 fiber to another CX4 in my gateway. a Chelsio T320 added another 2x 10Gbps ports, linking SFP+ to my workstation and gateway with fiber (the existing X540 connects to same using Cat6E). yes, before you ask, this actually is all necessary for running some of my code and experiments. but it was incomplete...$160 later, i have two Tesla K80s headed my way from ebay for a total of 9984 Kepler CUDA cores, 48GiB of GDDR5, and 960GB/s of bandwidth to same. the enhanced chonk of the Teslas mean i'll have to give up two x8 links; i intend to sacrifice the AX200, as i can easily throw a USB wifi adapter onto the hub i've affixed with Command strips to the inside of my rack (one other x8 is already free).

the beast at this point will still consume less than a kilowatt at full juice. i have no idea why the chassis ships with 1620W supplies, unless perhaps SAS drives consume ridiculous amounts of power? which, speaking of storage...

the original point of this hoss was to get hard drives out of my workstation, and it was time they went. sixteen 18TB exosen were removed from use, and joined eight more virgin 18TBs. the result is 3x octadrive raid6zs each boasting 144 total, 108 usable, for a total of 324TB accessible at any given time. at full jam, this is about another 200W of mixed 5V+12V (see "The Drive Problem" for more details), and generates the system's only noise (though that'll change once the K80s arrive). my office is now several degrees cooler, a happy change i thoroughly welcome.

finally, i added a Geiger counter, because i'm sexually attracted to Geiger counters.

so, that's the story of my living room supercomputer. 96 watercooled Xeon cores, 9984 CUDA cores, 2.3TiB DDR3, 2.8TB NVMe SSD, 324TB SATA3 rust, 280Gb/s networking on a mix of fiber and copper, and 4x 80PLUS Platinum 1280W PSUs, drawing a maximum of ~1200W. the entire rack (machine, lighting, cooling, and all externals) consumes under 600W most of the time:

do you think this is some kind of game, son?

previously: "transfiguration" 2023-02-11