Check out my first novel, midnight's simulacra!

CUBAR: Difference between revisions

No edit summary |

No edit summary |

||

| (18 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

[[File:TheG8.png|right|thumb|CUDA'd-up beyond all repair]] | [[File:TheG8.png|right|thumb|CUDA'd-up beyond all repair]] | ||

[[CUDA]] (and [http://en.wikipedia.org/wiki/GPGPU General-Purpose Graphics Processing Unit] programming in general) is rapidly becoming a mainstay of high-performance computing. As CUDA and OpenCL move off of the workstation, and into the server -- off of the console, and into the cluster -- the security of these systems will become critical parts of the associated [http://en.wikipedia.org/wiki/Trusted_computing_base trusted computing base]. Even ignoring the issue of multiuser security, the properties of isolation and (to a lesser extent) confidentiality are important for debugging, profiling and reproducibility. I've authored the | [[CUDA]] (and [http://en.wikipedia.org/wiki/GPGPU General-Purpose Graphics Processing Unit] programming in general) is rapidly becoming a mainstay of high-performance computing. As CUDA and OpenCL move off of the workstation, and into the server -- off of the console, and into the cluster -- the security of these systems will become critical parts of the associated [http://en.wikipedia.org/wiki/Trusted_computing_base trusted computing base]. Even ignoring the issue of multiuser security, the properties of isolation and (to a lesser extent) confidentiality are important for debugging, profiling and reproducibility. I've authored the CUBAR set of tools to investigate the security properties -- primarily the means and parameters of memory protection, and the division of protection between soft- and hardware -- of [[CUDA]] on NVIDIA hardware since the G80 architecture. This was essential information in the attempt to implement an open source CUDA software stack (see [[libcudest]]). | ||

See our 2010 paper, "[[Media:Cubar2010.pdf|My Other Computer is Your GPU]]." | |||

==Questions== | ==Questions== | ||

===Memory details=== | ===Memory details=== | ||

| Line 38: | Line 39: | ||

===Protection=== | ===Protection=== | ||

* What mechanisms, if any, exist to protect memory? At what granularities (of address and access) do they operate? | * What mechanisms, if any, exist to protect memory? At what granularities (of address and access) do they operate? | ||

** What about registers? CUDA doesn't do traditional context switches due to register preallocation. | |||

* How is memory protection split across hardware, kernelspace, and userspace? | * How is memory protection split across hardware, kernelspace, and userspace? | ||

** Any userspace protection can, of course, be trivially subverted | ** Any userspace protection can, of course, be trivially subverted | ||

| Line 44: | Line 46: | ||

* What memories, if any, are scrubbed between kernels' execution? | * What memories, if any, are scrubbed between kernels' execution? | ||

* How many different regions can be tracked? How many contexts? What behavior exists at these limits? | * How many different regions can be tracked? How many contexts? What behavior exists at these limits? | ||

===Variation=== | ===Variation=== | ||

* Have these mechanisms changed over various hardware? | * Have these mechanisms changed over various hardware? | ||

| Line 72: | Line 75: | ||

==Code== | ==Code== | ||

* GitHub project: [http://github.com/dankamongmen/ | * GitHub project: [http://github.com/dankamongmen/cubar dankamongmen:cubar] | ||

===Building=== | ===Building=== | ||

Clone the [[git]] repository. Define (either via shell export or within a new file Makefile.local): | Clone the [[git]] repository. Define (either via shell export or within a new file Makefile.local): | ||

| Line 79: | Line 82: | ||

==Tools== | ==Tools== | ||

[[File:Cudash-hyperboxl.png|right|thumb|cudash in use]] | |||

===CUBAR tools=== | ===CUBAR tools=== | ||

Only those tools which launch CUDA kernels use the Runtime API. They might be converted to the Driver API. | Only those tools which launch CUDA kernels use the Runtime API. They might be converted to the Driver API. | ||

====Driver API==== | ====Driver API==== | ||

* <tt>cudaminimal</tt> - The minimal possible CUDA program (a call to [http://developer.download.nvidia.com/compute/cuda/3_0/toolkit/docs/online/group__CUINIT_g4703189f4c7f490c73f77942a3fa8443.html#g4703189f4c7f490c73f77942a3fa8443 cuInit()]), used for <tt>strace(1)</tt> etc. | * <tt>cudaminimal</tt> - The minimal possible CUDA program (a call to [http://developer.download.nvidia.com/compute/cuda/3_0/toolkit/docs/online/group__CUINIT_g4703189f4c7f490c73f77942a3fa8443.html#g4703189f4c7f490c73f77942a3fa8443 cuInit()]), used for <tt>strace(1)</tt> etc. | ||

* <tt>cudapinner</tt> - Allocates and pins (locks) all possible shared (system) memory, using the minimum necessary number of distinct allocations. | |||

* <tt>cudaspawner</tt> - Determine the maximum number of CUcontexts supported by a device, optionally applying memory pressure proportional to ''n''. | * <tt>cudaspawner</tt> - Determine the maximum number of CUcontexts supported by a device, optionally applying memory pressure proportional to ''n''. | ||

* <tt>cudastuffer</tt> - Allocates all possible memory on a device, using the minimum necessary number of distinct allocations. | * <tt>cudastuffer</tt> - Allocates all possible memory on a device, using the minimum necessary number of distinct allocations. | ||

====Runtime API==== | ====Runtime API==== | ||

* <tt>cudadump</tt> - Driver for <tt>cudaranger</tt> and sanity checker ('''FIXME: ought be split up into two or more tools'''). For each device, it: | * <tt>cudadump</tt> - Driver for <tt>cudaranger</tt> and sanity checker ('''FIXME: ought be split up into two or more tools'''). For each device, it: | ||

| Line 92: | Line 96: | ||

* <tt>cudaranger</tt> - Attempts to perform selectable memory accesses across the specified virtual address range of a device. Calculates the number of non-zero words. | * <tt>cudaranger</tt> - Attempts to perform selectable memory accesses across the specified virtual address range of a device. Calculates the number of non-zero words. | ||

It is necessary that <tt>cudadump</tt> spawn <tt>cudaranger</tt> rather than launching the kernels itself; a kernel failure results in all libcuda calls returning an early error (CUDA_ERROR_DEINITIALIZED (4) or CUDA_ERROR_LAUNCH_FAILURE (700)) for the remainder of a process's life (ie, creating a new context usually also fails '''FIXME: provide proof'''). | It is necessary that <tt>cudadump</tt> spawn <tt>cudaranger</tt> rather than launching the kernels itself; a kernel failure results in all libcuda calls returning an early error (CUDA_ERROR_DEINITIALIZED (4) or CUDA_ERROR_LAUNCH_FAILURE (700)) for the remainder of a process's life (ie, creating a new context usually also fails '''FIXME: provide proof'''). | ||

====cudash==== | |||

The CUDA Shell (currently making use of the runtime api for kernel launches) facilitates stateful, invasive examination of the CUDA memory/process system. It has online help via the "help" command. | |||

* ''FIXME'': document commands | |||

===Other tools=== | ===Other tools=== | ||

* [http://nouveau.freedesktop.org/wiki/REnouveau REnouveau], the Reverse-Engineering-for-nouveau tool | * [http://nouveau.freedesktop.org/wiki/REnouveau REnouveau], the Reverse-Engineering-for-nouveau tool | ||

* [http://nouveau.freedesktop.org/wiki/MmioTrace MMIOtrace], in-kernel since 2.6.27 | * [http://nouveau.freedesktop.org/wiki/MmioTrace MMIOtrace], in-kernel since 2.6.27 | ||

* The x86 [[Page Attribute Tables]] can be examined | * The x86 [[Page Attribute Tables]] and [[MTRR]]s can be examined; see those pages for more detail | ||

* [[gdb]] and NVIDIA's <tt>cuda-gdb</tt> | |||

* [http://wiki.github.com/laanwj/decuda/ van der Laan's] CUBIN assembler and disassembler, <tt>decuda</tt> and <tt>cudasm</tt> | |||

* [http://nouveau.freedesktop.org/wiki/Nvtrace nvtrace] from the nouveau project | |||

* [http://0x04.net/cgit/index.cgi/nv50dis/ nv50dis], a NV50 disassembler | |||

* [http://code.google.com/p/gpuocelot/ gpuocelot], a PTX binary translator targeting CUDA and multithreaded x86 | |||

* [http://upcommons.upc.edu/pfc/bitstream/2099.1/7589/1/PFC.pdf libecuda], a CUDART replacement and PTX emulator | |||

==See Also== | ==See Also== | ||

* "[http://sites.google.com/site/cudaiap2009/materials-1/research-papers-1/benchmarkinggpustotunedenselinearalgebra Benchmarking GPUs to Tune Dense Linear Algebra]" is surprisingly rich in hardware details | * "[http://sites.google.com/site/cudaiap2009/materials-1/research-papers-1/benchmarkinggpustotunedenselinearalgebra Benchmarking GPUs to Tune Dense Linear Algebra]" is surprisingly rich in hardware details | ||

** but that's [http://www.eecs.berkeley.edu/~demmel/ James Demmel] (aka a Real American Badass) for you! | ** but that's [http://www.eecs.berkeley.edu/~demmel/ James Demmel] (aka a Real American Badass) for you! | ||

* "[http://www.eecg.toronto.edu/~myrto/gpuarch-ispass2010.pdf Demystifying the GPU Through Benchmarking]" continues with this theme. | * "[http://www.eecg.toronto.edu/~myrto/gpuarch-ispass2010.pdf Demystifying the GPU Through Benchmarking]" continues with this theme. | ||

* LWN's State of Nouveau articles ([http://lwn.net/Articles/269558 Part 1] and [http://lwn.net/Articles/270830/ Part 2]) | |||

* The [http://nouveau.freedesktop.org/wiki/IrcChatLogs Nouveau Development Companion] | |||

* This Japanese [http://pc.watch.impress.co.jp/docs/2008/0617/kaigai446.htm article] has some great diagrams | |||

* [[libcudest]], my effort to reverse engineer and reimplement libcuda.so | |||

[[CATEGORY: GPGPU]] | |||

[[CATEGORY: Projects]] | |||

Latest revision as of 23:53, 9 July 2010

CUDA (and General-Purpose Graphics Processing Unit programming in general) is rapidly becoming a mainstay of high-performance computing. As CUDA and OpenCL move off of the workstation, and into the server -- off of the console, and into the cluster -- the security of these systems will become critical parts of the associated trusted computing base. Even ignoring the issue of multiuser security, the properties of isolation and (to a lesser extent) confidentiality are important for debugging, profiling and reproducibility. I've authored the CUBAR set of tools to investigate the security properties -- primarily the means and parameters of memory protection, and the division of protection between soft- and hardware -- of CUDA on NVIDIA hardware since the G80 architecture. This was essential information in the attempt to implement an open source CUDA software stack (see libcudest).

See our 2010 paper, "My Other Computer is Your GPU."

Questions

Memory details

- What address translations, if any, are performed?

- If address translation is performed, can physical memory be multiply aliased?

- How are accesses affected by use of incorrect state space affixes?

- Compute Capability 2.0 introduces unified addressing, but still supports modal addressing

- How do physical addresses correspond to distinct memory regions?

From Lindholm et al's "NVIDIA Tesla: A Unified Graphics and Computing Architecture":

The DRAM memory data bus width is 384 pins, arranged in six independent partitions of 64 pins each. Each partition owns 1/6 of the physical address space. The memory partition units directly enqueue requests. They arbitrate among hundreds of in-flight requests from the parallel stages of the graphics and computation pipelines. The arbitration seeks to maximize total DRAM transfer efficiency, which favors grouping related requests by DRAM bank and read/write direction, while minimizing latency as far as possible. The memory controllers support a wide range of DRAM clock rates, protocols, device densities, and data bus widths. A single hub unit routes requests to the appropriate partition from the nonparallel requesters (PCI-Express, host and command front end, input assembler, and display). Each memory partition has its own depth and color ROP units, so ROP memory traffic originates locally. Texture and load/store requests, however, can occur between any TPC and any memory partition, so an interconnection network routes requests and responses. All processing engines generate addresses in a virtual address space. A memory management unit performs virtual to physical translation. Hardware reads the page tables from local memory to respond to misses on behalf of a hierarchy of translation look-aside buffers spread out among the rendering engines.

Driver details

- Is a CUDA context a true security capability?

- Can a process modify details of the contexts it creates?

- Can a process transmit its contexts to another? Will they persist if the originating process exits?

- Can a process forge another process's contexts on its own?

Protection

- What mechanisms, if any, exist to protect memory? At what granularities (of address and access) do they operate?

- What about registers? CUDA doesn't do traditional context switches due to register preallocation.

- How is memory protection split across hardware, kernelspace, and userspace?

- Any userspace protection can, of course, be trivially subverted

- Are code and data memories separated (a Harvard architecture), or unified (Von Neumann architecture)?

- In the case of Von Neumann or Modified Harvard, is there execution protection?

- What memories, if any, are scrubbed between kernels' execution?

- How many different regions can be tracked? How many contexts? What behavior exists at these limits?

Variation

- Have these mechanisms changed over various hardware?

- The "Fermi" hardware (Compute Capability 2.0) adds unified addressing and caches for global memory. Effects?

- Have these mechanisms changed over the course of various driver releases?

- Open source efforts (particularly the nouveau project) are working on their own drivers.

- What all needs be addressed by these softwares?

- How is the situation affected by multiple devices, whether in an SLI/CrossFire setup or not?

Accountability

- What forensic data, if any, is created by typical CUDA programs? Adversarial programs? Broken programs?

- What relationship exists between CPU processes and GPU kernels?

Experiments

Memory space exploration

Probe memory via attempts to read, write and execute various addresses, including:

- those unallocated within the probing context,

- those unallocated by any running context, and

- those unallocated by any existing context.

Probe addresses using the various state space affixes, and 2.0's unified addressing.

Context exploration

Determine whether CUDA contexts can be moved or shared between processes:

- fork(2) and execute cudaAlloc(3) without creating a new context

- If this works, see whether the change is reflected in the parent binary

- Ensure that PPID isn't just being checked (dubious, but possible) by fork(2)ing twice

- Transmit the CUcontext body to another process via IPC or the filesystem, and repeat the tests

Determine how many contexts can be created across a process, and across the system.

Code

- GitHub project: dankamongmen:cubar

Building

Clone the git repository. Define (either via shell export or within a new file Makefile.local):

- NVCC to point at your nvcc binary (from the CUDA Toolkit)

- CUDA to point at your cuda-dev root

Tools

CUBAR tools

Only those tools which launch CUDA kernels use the Runtime API. They might be converted to the Driver API.

Driver API

- cudaminimal - The minimal possible CUDA program (a call to cuInit()), used for strace(1) etc.

- cudapinner - Allocates and pins (locks) all possible shared (system) memory, using the minimum necessary number of distinct allocations.

- cudaspawner - Determine the maximum number of CUcontexts supported by a device, optionally applying memory pressure proportional to n.

- cudastuffer - Allocates all possible memory on a device, using the minimum necessary number of distinct allocations.

Runtime API

- cudadump - Driver for cudaranger and sanity checker (FIXME: ought be split up into two or more tools). For each device, it:

- verifies that the constant memory region can be read in its entirety by a CUDA kernel

- explores the virtual address space in its entirety via calling cudaranger with progressively smaller ranges in the case of access failures

- cudaranger - Attempts to perform selectable memory accesses across the specified virtual address range of a device. Calculates the number of non-zero words.

It is necessary that cudadump spawn cudaranger rather than launching the kernels itself; a kernel failure results in all libcuda calls returning an early error (CUDA_ERROR_DEINITIALIZED (4) or CUDA_ERROR_LAUNCH_FAILURE (700)) for the remainder of a process's life (ie, creating a new context usually also fails FIXME: provide proof).

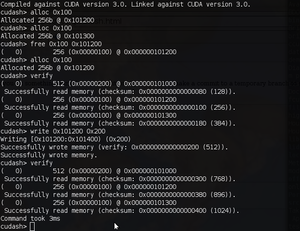

cudash

The CUDA Shell (currently making use of the runtime api for kernel launches) facilitates stateful, invasive examination of the CUDA memory/process system. It has online help via the "help" command.

- FIXME: document commands

Other tools

- REnouveau, the Reverse-Engineering-for-nouveau tool

- MMIOtrace, in-kernel since 2.6.27

- The x86 Page Attribute Tables and MTRRs can be examined; see those pages for more detail

- gdb and NVIDIA's cuda-gdb

- van der Laan's CUBIN assembler and disassembler, decuda and cudasm

- nvtrace from the nouveau project

- nv50dis, a NV50 disassembler

- gpuocelot, a PTX binary translator targeting CUDA and multithreaded x86

- libecuda, a CUDART replacement and PTX emulator

See Also

- "Benchmarking GPUs to Tune Dense Linear Algebra" is surprisingly rich in hardware details

- but that's James Demmel (aka a Real American Badass) for you!

- "Demystifying the GPU Through Benchmarking" continues with this theme.

- LWN's State of Nouveau articles (Part 1 and Part 2)

- The Nouveau Development Companion

- This Japanese article has some great diagrams

- libcudest, my effort to reverse engineer and reimplement libcuda.so