Check out my first novel, midnight's simulacra!

Io uring: Difference between revisions

Tags: mobile web edit mobile edit |

|||

| (173 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

__NOTOC__ | |||

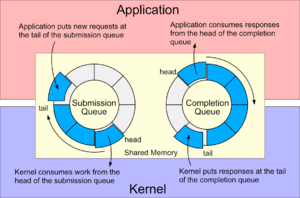

[[File:Uring 0.png|right|thumb|A nice io_uring visualization courtesy Donald Hunter]] | [[File:Uring 0.png|right|thumb|A nice io_uring visualization courtesy Donald Hunter]] | ||

Introduced in 2019 (kernel 5.1) by Jens Axboe, <tt>io_uring</tt> (henceforth uring) is a system for providing the kernel with a schedule of system calls, and receiving the results as they're generated. Whereas [[epoll]] and [[kqueue]] support multiplexing, where you're told when you can usefully perform a system call using some set of filters, uring allows you to specify the system calls themselves (and dependencies between them), and execute the schedule at the kernel [https://en.wikipedia.org/wiki/Dataflow_architecture dataflow limit]. It combines asynchronous I/O, system call polybatching, and flexible buffer management, and is IMHO the most substantial development in the [[Linux_APIs|Linux I/O model]] since Berkeley sockets (yes, I'm aware Berkeley sockets preceded Linux. Let's then say that it's the most substantial development in the UNIX I/O model to originate in Linux): | |||

* Asynchronous I/O without the large copy overheads and restrictions of POSIX AIO | |||

* System call batching/linking across distinct system calls | |||

* Asynchronous I/O without the large copy overheads and restrictions of POSIX AIO | |||

* System call batching across distinct system calls | |||

* Provide a buffer pool, and they'll be used as needed | * Provide a buffer pool, and they'll be used as needed | ||

* Both polling- and interrupt-driven I/O on the kernel side | * Both polling- and interrupt-driven I/O on the kernel side | ||

The core system calls of | The core system calls of uring are wrapped by the C API of [https://github.com/axboe/liburing liburing]. Win32 added a very similar interface, [https://learn.microsoft.com/en-us/windows/win32/api/ioringapi/ IoRing], in 2020. In my opinion, uring ought largely displace [[epoll]] in new Linux code. [[FreeBSD]] seems to be sticking with [[kqueue]], meaning code using uring won't run there, but neither did epoll (save through FreeBSD's somewhat dubious Linux compatibility layer). Both the system calls and liburing have fairly comprehensive man page coverage, including the [https://www.man7.org/linux/man-pages/man7/io_uring.7.html io_uring.7] top-level page. | ||

==Rings== | ==Rings== | ||

Central to every uring are two ringbuffers holding CQEs (Completion Queue Entries) and SQE (Submission Queue Entries) descriptors (as best I can tell, this terminology was borrowed from the [https://nvmexpress.org/specifications/ NVMe specification]). SQEs roughly correspond to a single system call: they are tagged with an operation type, and filled in with the values that would traditionally be supplied as arguments to the appropriate function. Userspace is provided references to SQEs on the SQE ring, which it fills in and submits. Submission operates up through a specified SQE, and thus all SQEs before it in the ring must also be ready to go. The kernel places results in the CQE ring. These rings are shared between kernel- and userspace. The rings must be distinct unless the kernel specifies the <tt>IORING_FEAT_SINGLE_MMAP</tt> feature (see below) | Central to every uring are two ringbuffers holding CQEs (Completion Queue Entries) and SQE (Submission Queue Entries) descriptors (as best I can tell, this terminology was borrowed from the [https://nvmexpress.org/specifications/ NVMe specification]; I've also seen it with Mellanox NICs). Note that SQEs are allocated externally to the SQ descriptor ring (the same is not true for CQEs). SQEs roughly correspond to a single system call: they are tagged with an operation type, and filled in with the values that would traditionally be supplied as arguments to the appropriate function. Userspace is provided references to SQEs on the SQE ring, which it fills in and submits. Submission operates up through a specified SQE, and thus all SQEs before it in the ring must also be ready to go (this is likely the main reason why the SQ holds descriptors to an external ring of SQEs: you can acquire SQEs, and then submit them out of order, but see <tt>IORING_SETUP_NO_SQARRAY</tt>). The kernel places results in the CQE ring. These rings are shared between kernel- and userspace. The rings must be distinct unless the kernel specifies the <tt>IORING_FEAT_SINGLE_MMAP</tt> feature (see below). | ||

uring does not generally make use of <tt>errno</tt>. Synchronous functions return the negative error code as their result. Completion queue entries have the negated error code placed in their <tt>res</tt> fields. | uring does not generally make use of <tt>errno</tt>. Synchronous functions return the negative error code as their result. Completion queue entries have the negated error code placed in their <tt>res</tt> fields. | ||

CQEs are usually 16 bytes, and SQEs are usually 64 bytes (but see <tt>IORING_SETUP_SQE128</tt> and <tt>IORING_SETUP_CQE32</tt> below). Either way, SQEs are allocated externally to the submission queue, which is merely a ring of descriptors. | CQEs are usually 16 bytes, and SQEs are usually 64 bytes (but see <tt>IORING_SETUP_SQE128</tt> and <tt>IORING_SETUP_CQE32</tt> below). Either way, SQEs are allocated externally to the submission queue, which is merely a ring of 32-bit descriptors. | ||

===System calls=== | |||

The liburing interface will be sufficient for most users, and it is possible to operate almost wholly without system calls when the system is busy. For the sake of completion, here are the three system calls implementing the uring core (from the kernel's <tt>io_uring/io_uring.c</tt>): | |||

<syntaxhighlight lang="c"> | |||

int io_uring_setup(u32 entries, struct io_uring_params *p); | |||

int io_uring_enter(unsigned fd, u32 to_submit, u32 min_complete, u32 flags, const void* argp, size_t argsz); | |||

int io_uring_register(unsigned fd, unsigned opcode, void *arg, unsigned int nr_args); | |||

</syntaxhighlight> | |||

Note that <tt>io_uring_enter(2)</tt> corresponds more closely to the <tt>io_uring_enter2(3)</tt> wrapper, and indeed <tt>io_uring_enter(3)</tt> is defined in terms of the latter (from liburing's <tt>src/syscall.c</tt>): | |||

<syntaxhighlight lang="c"> | |||

static inline int __sys_io_uring_enter2(unsigned int fd, unsigned int to_submit, | |||

unsigned int min_complete, unsigned int flags, sigset_t *sig, size_t sz){ | |||

return (int) __do_syscall6(__NR_io_uring_enter, fd, to_submit, min_complete, flags, sig, sz); | |||

} | |||

static inline int __sys_io_uring_enter(unsigned int fd, unsigned int to_submit, | |||

unsigned int min_complete, unsigned int flags, sigset_t *sig){ | |||

return __sys_io_uring_enter2(fd, to_submit, min_complete, flags, sig, _NSIG / 8); | |||

} | |||

</syntaxhighlight> | |||

<tt>io_uring_enter(2)</tt> can both submit SQEs and wait until some number of CQEs are available. Its <tt>flags</tt> parameter is a bitmask over: | |||

{| class="wikitable" border="1" | |||

! Flag !! Description | |||

|- | |||

| <tt>IORING_ENTER_GETEVENTS</tt> || Wait until at least <tt>min_complete</tt> CQEs are ready before returning. | |||

|- | |||

| <tt>IORING_ENTER_SQ_WAKEUP</tt> || Wake up the kernel thread created when using <tt>IORING_SETUP_SQPOLL</tt>. | |||

|- | |||

| <tt>IORING_ENTER_SQ_WAIT</tt> || Wait until at least one entry is free in the submission ring before returning. | |||

|- | |||

| <tt>IORING_ENTER_EXT_ARG</tt> || (Since Linux 5.11) Interpret <tt>sig</tt> to be a <tt>io_uring_getevents_arg</tt> rather than a pointer to <tt>sigset_t</tt>. This structure can specify both a <tt>sigset_t</tt> and a timeout. | |||

<syntaxhighlight lang="c"> | |||

struct io_uring_getevents_arg { | |||

__u64 sigmask; | |||

__u32 sigmask_sz; | |||

__u32 pad; | |||

__u64 ts; | |||

}; | |||

</syntaxhighlight> | |||

Is <tt>ts</tt> nanoseconds from now? From the Epoch? Nope! <tt>ns</tt> is actually a <i>pointer</i> to a <tt>__kernel_timespec</tt>, passed to <tt>u64_to_user_ptr()</tt> in the kernel. One of the uglier aspects of uring. | |||

|- | |||

| <tt>IORING_ENTER_REGISTERED_RING</tt> || <tt>ring_fd</tt> is an offset into the registered ring pool rather than a normal file descriptor. | |||

|- | |||

| <tt>IORING_ENTER_ABS_TIMER</tt> || (Since Linux 6.12) The timeout argument in the <tt>io_uring_getevents_arg</tt> is an absolute time, using the registered clock. | |||

|- | |||

|} | |||

==Setup== | ==Setup== | ||

| Line 37: | Line 81: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

<tt>resv</tt> must be zeroed out. In the absence of flags, the uring uses interrupt-driven I/O. Calling <tt>close(2)</tt> on the returned descriptor frees all | <tt>resv</tt> must be zeroed out. In the absence of flags, the uring uses interrupt-driven I/O. Calling <tt>close(2)</tt> on the returned descriptor frees all resources associated with the uring. | ||

<tt>io_uring_setup(2)</tt> is wrapped by liburing's <tt>io_uring_queue_init(3)</tt> and <tt>io_uring_queue_init_params(3)</tt>. When using these wrappers, <tt>io_uring_queue_exit(3)</tt> should be used to clean up | <tt>io_uring_setup(2)</tt> is wrapped by liburing's <tt>io_uring_queue_init(3)</tt> and <tt>io_uring_queue_init_params(3)</tt>. When using these wrappers, <tt>io_uring_queue_exit(3)</tt> should be used to clean up. | ||

<syntaxhighlight lang="c"> | |||

int io_uring_queue_init(unsigned entries, struct io_uring *ring, unsigned flags); | |||

int io_uring_queue_init_params(unsigned entries, struct io_uring *ring, struct io_uring_params *params); | |||

void io_uring_queue_exit(struct io_uring *ring); | |||

</syntaxhighlight> | |||

These wrappers operate on a <tt>struct io_uring</tt>: | |||

<syntaxhighlight lang="c"> | |||

struct io_uring { | |||

struct io_uring_sq sq; | |||

struct io_uring_cq cq; | |||

unsigned flags; | |||

int ring_fd; | |||

unsigned features; | |||

int enter_ring_fd; | |||

__u8 int_flags; | |||

__u8 pad[3]; | |||

unsigned pad2; | |||

}; | |||

struct io_uring_sq { | |||

unsigned *khead; | |||

unsigned *ktail; | |||

unsigned *kring_mask; // Deprecated: use `ring_mask` | |||

unsigned *kring_entries; // Deprecated: use `ring_entries` | |||

unsigned *kflags; | |||

unsigned *kdropped; | |||

unsigned *array; | |||

struct io_uring_sqe *sqes; | |||

unsigned sqe_head; | |||

unsigned sqe_tail; | |||

size_t ring_sz; | |||

void *ring_ptr; | |||

unsigned ring_mask; | |||

unsigned ring_entries; | |||

unsigned pad[2]; | |||

}; | |||

struct io_uring_cq { | |||

unsigned *khead; | |||

unsigned *ktail; | |||

unsigned *kring_mask; // Deprecated: use `ring_mask` | |||

unsigned *kring_entries; // Deprecated: use `ring_entries` | |||

unsigned *kflags; | |||

unsigned *koverflow; | |||

struct io_uring_cqe *cqes; | |||

size_t ring_sz; | |||

void *ring_ptr; | |||

unsigned ring_mask; | |||

unsigned ring_entries; | |||

unsigned pad[2]; | |||

}; | |||

</syntaxhighlight> | |||

<tt>io_uring_queue_init(3)</tt> takes an <tt>unsigned flags</tt> argument, which is passed as the <tt>flags</tt> field of <tt>io_uring_params</tt>. <tt>io_uring_queue_init_params(3)</tt> takes a <tt>struct io_uring_params*</tt> argument, which is passed through directly to <tt>io_uring_setup(2)</tt>. It's best to avoid mixing the low-level API and that provided by liburing. | |||

===Ring structure=== | ===Ring structure=== | ||

The details of ring structure are only relevant when using the low-level API | The details of ring structure are typically only relevant when using the low-level API. Two offset structs are used to prepare and control the three (or two, see <tt>IORING_FEAT_SINGLE_MMAP</tt>) backing memory maps. | ||

<syntaxhighlight lang="c"> | <syntaxhighlight lang="c"> | ||

struct io_sqring_offsets { | struct io_sqring_offsets { | ||

| Line 49: | Line 149: | ||

__u32 ring_mask; | __u32 ring_mask; | ||

__u32 ring_entries; | __u32 ring_entries; | ||

__u32 flags; | __u32 flags; // IORING_SQ_* | ||

__u32 dropped; | __u32 dropped; | ||

__u32 array; | __u32 array; | ||

__u32 | __u32 resv1; | ||

__u64 user_addr; // sqe mmap for IORING_SETUP_NO_MMAP | |||

}; | }; | ||

| Line 62: | Line 163: | ||

__u32 overflow; | __u32 overflow; | ||

__u32 cqes; | __u32 cqes; | ||

__u32 flags; | __u32 flags; // IORING_CQ_* | ||

__u32 | __u32 resv1; | ||

__u64 user_addr; // sq/cq mmap for IORING_SETUP_NO_MMAP | |||

}; | }; | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| Line 83: | Line 185: | ||

Recall that when the kernel expresses <tt>IORING_FEAT_SINGLE_MMAP</tt>, the submission and completion queues can be allocated in one <tt>mmap(2)</tt> call. | Recall that when the kernel expresses <tt>IORING_FEAT_SINGLE_MMAP</tt>, the submission and completion queues can be allocated in one <tt>mmap(2)</tt> call. | ||

=== | ===Setup flags=== | ||

The <tt>flags</tt> field is set up by the caller, and is a bitmask over: | The <tt>flags</tt> field is set up by the caller, and is a bitmask over: | ||

{|class="wikitable" border="1" | {|class="wikitable" border="1" | ||

| Line 90: | Line 192: | ||

| IORING_SETUP_IOPOLL || 5.1 || Instruct the kernel to use polled (as opposed to interrupt-driven) I/O. This is intended for block devices, and requires that <tt>O_DIRECT</tt> was provided when the file descriptor was opened. | | IORING_SETUP_IOPOLL || 5.1 || Instruct the kernel to use polled (as opposed to interrupt-driven) I/O. This is intended for block devices, and requires that <tt>O_DIRECT</tt> was provided when the file descriptor was opened. | ||

|- | |- | ||

| IORING_SETUP_SQPOLL || 5.1 (5.11 for full features) || Create a kernel thread to poll on the submission queue. If the submission queue is kept busy, this thread will reap SQEs without the need for a system call. If enough time goes by without new submissions, the kernel thread goes to sleep, and <tt>io_uring_enter(2)</tt> must be called to wake it. | | IORING_SETUP_SQPOLL || 5.1 (5.11 for full features) || Create a kernel thread to poll on the submission queue. If the submission queue is kept busy, this thread will reap SQEs without the need for a system call. If enough time goes by without new submissions, the kernel thread goes to sleep, and <tt>io_uring_enter(2)</tt> must be called to wake it. Cannot be used with <tt>IORING_SETUP_COOP_TASKRUN</tt>, <tt>IORING_SETUP_TASKRUN_FLAG</tt>, or <tt>IORING_SETUP_DEFER_TASKRUN</tt>. | ||

|- | |- | ||

| IORING_SETUP_SQ_AFF || 5.1 || Only meaningful with <tt>IORING_SETUP_SQPOLL</tt>. The poll thread will be bound to the core specified in <tt>sq_thread_cpu</tt>. | | IORING_SETUP_SQ_AFF || 5.1 || Only meaningful with <tt>IORING_SETUP_SQPOLL</tt>. The poll thread will be bound to the core specified in <tt>sq_thread_cpu</tt>. | ||

| Line 104: | Line 206: | ||

| IORING_SETUP_SUBMIT_ALL || 5.18 || Continue submitting SQEs from a batch even after one results in error. | | IORING_SETUP_SUBMIT_ALL || 5.18 || Continue submitting SQEs from a batch even after one results in error. | ||

|- | |- | ||

| IORING_SETUP_COOP_TASKRUN || 5.19 || Don't interrupt userspace processes to indicate CQE availability. It's usually desirable to allow events to be processed at arbitrary kernelspace transitions, in which case this flag can be provided to improve performance. | | IORING_SETUP_COOP_TASKRUN || 5.19 || Don't interrupt userspace processes to indicate CQE availability. It's usually desirable to allow events to be processed at arbitrary kernelspace transitions, in which case this flag can be provided to improve performance. Cannot be used with <tt>IORING_SETUP_SQPOLL</tt>. | ||

|- | |- | ||

| IORING_SETUP_TASKRUN_FLAG || 5.19 || Requires <tt>IORING_SETUP_COOP_TASKRUN</tt>. When completions are pending awaiting processing, the <tt>IORING_SQ_TASKRUN</tt> flag will be set in the submission ring. This will be checked by <tt>io_uring_peek_cqe()</tt>, which will enter the kernel to process them. | | IORING_SETUP_TASKRUN_FLAG || 5.19 || Requires <tt>IORING_SETUP_COOP_TASKRUN</tt> or <tt>IORING_SETUP_DEFER_TASKRUN</tt>. When completions are pending awaiting processing, the <tt>IORING_SQ_TASKRUN</tt> flag will be set in the submission ring. This will be checked by <tt>io_uring_peek_cqe()</tt>, which will enter the kernel to process them. Cannot be used with <tt>IORING_SETUP_SQPOLL</tt>. | ||

|- | |- | ||

| IORING_SETUP_SQE128 || 5.19 || Use 128-byte SQEs, necessary for NVMe passthroughs using <tt>IORING_OP_URING_CMD</tt>. | | IORING_SETUP_SQE128 || 5.19 || Use 128-byte SQEs, necessary for NVMe passthroughs using <tt>IORING_OP_URING_CMD</tt>. | ||

| Line 114: | Line 216: | ||

| IORING_SETUP_SINGLE_ISSUER || 6.0 || Hint to the kernel that only a single thread will submit requests, allowing for optimizations. This thread must either be the thread which created the ring, or (iff <tt>IORING_SETUP_R_DISABLED</tt> is used) the thread which enables the ring. | | IORING_SETUP_SINGLE_ISSUER || 6.0 || Hint to the kernel that only a single thread will submit requests, allowing for optimizations. This thread must either be the thread which created the ring, or (iff <tt>IORING_SETUP_R_DISABLED</tt> is used) the thread which enables the ring. | ||

|- | |- | ||

| IORING_SETUP_DEFER_TASKRUN || 6.1 || Requires <tt>IORING_SETUP_SINGLE_ISSUER</tt>. Don't process completions at arbitrary kernel/scheduler transitions, but only <tt>io_uring_enter(2)</tt> when called with <tt>IORING_ENTER_GETEVENTS</tt> by the thread that submitted the SQEs. | | IORING_SETUP_DEFER_TASKRUN || 6.1 || Requires <tt>IORING_SETUP_SINGLE_ISSUER</tt>. Don't process completions at arbitrary kernel/scheduler transitions, but only <tt>io_uring_enter(2)</tt> when called with <tt>IORING_ENTER_GETEVENTS</tt> by the thread that submitted the SQEs. Cannot be used with <tt>IORING_SETUP_SQPOLL</tt>. | ||

|- | |||

| IORING_SETUP_NO_MMAP || 6.5 || The caller will provide two memory regions (the queue rings in <tt>cq_off.user_data</tt>, the SQEs in <tt>sq_off.user_data</tt>) rather than having them allocated by the kernel (they may both come from a single map). This allows for the use of huge pages. | |||

|- | |||

| IORING_SETUP_REGISTERED_FD_ONLY || 6.6 || Registers the ring fd for use with <tt>IORING_REGISTER_USE_REGISTERED_RING</tt>. Returns a registered fd index. | |||

|- | |||

| IORING_SETUP_NO_SQARRAY || 6.6 || Do not use an indirection array for the submission queue, instead submitting them strictly in order. | |||

|- | |- | ||

|} | |} | ||

When <tt>IORING_SETUP_R_DISABLED</tt> is used, the ring must be enabled before submissions can take place. If using the liburing API, this is done via <tt>io_uring_enable_rings(3)</tt>: | |||

<syntaxhighlight lang="c"> | |||

int io_uring_enable_rings(struct io_uring *ring); | |||

</syntaxhighlight> | |||

===Kernel features=== | ===Kernel features=== | ||

| Line 149: | Line 262: | ||

|- | |- | ||

| <tt>IORING_FEAT_LINKED_FILE</tt> || 5.17 || Defer file assignment until execution of a given request begins. | | <tt>IORING_FEAT_LINKED_FILE</tt> || 5.17 || Defer file assignment until execution of a given request begins. | ||

|- | |||

| <tt>IORING_FEAT_REG_REG_RING</tt> || 6.3 || Ring descriptors can be registered with <tt>IORING_REGISTER_USE_REGISTERED_RING</tt>. | |||

|- | |||

| <tt>IORING_FEAT_RECVSEND_BUNDLE</tt> || 6.10 || <tt>send</tt> and <tt>recv</tt> operations can be bundled. | |||

|- | |||

| <tt>IORING_FEAT_MIN_TIMEOUT</tt> || 6.12 || A minimum batch wait timeout is supported. | |||

|- | |- | ||

|} | |} | ||

===Registered resources=== | ===Registered resources=== | ||

Various resources can be registered with the kernel side of the uring. Registration always occurs via the <tt>io_uring_register(2)</tt> system call, multiplexed on <tt>unsigned opcode</tt>. If <tt>opcode</tt> contains the bit <tt>IORING_REGISTER_USE_REGISTERED_RING</tt>, the <tt>fd</tt> passed must be a registered ring descriptor. | |||

====Rings==== | |||

Registering the uring's file descriptor (as returned by <tt>io_uring_setup(2)</tt>) allows for less overhead in <tt>io_uring_enter(2)</tt> when called with <tt>IORING_REGISTER_RING_FDS</tt>. If more than one thread is to use the uring, each thread must distinctly register the ring fd. | |||

{| class="wikitable" border="1" | |||

! Flag !! Kernel !! Description | |||

|- | |||

| <tt>IORING_REGISTER_RING_FDS</tt> || 5.18 || Register this ring descriptor for use by this thread. | |||

|- | |||

| <tt>IORING_UNREGISTER_RING_FDS</tt> || 5.18 || Unregister the registered ring descriptor. | |||

|} | |||

====Buffers==== | ====Buffers==== | ||

Since Linux 5.7, user-allocated memory can be provided to uring in groups of buffers (each with a group ID), in which each buffer has its own ID. This was done with the <tt>io_uring_prep_provide_buffers(3)</tt> call, operating on an SQE. Since 5.19, the "ringmapped buffers" technique (<tt>io_uring_register_buf_ring(3)</tt> allows these buffers to be used much more effectively. | Since Linux 5.7, user-allocated memory can be provided to uring in groups of buffers (each with a group ID), in which each buffer has its own ID. This was done with the <tt>io_uring_prep_provide_buffers(3)</tt> call, operating on an SQE. Since 5.19, the "ringmapped buffers" technique (<tt>io_uring_register_buf_ring(3)</tt>) allows these buffers to be used much more effectively. | ||

==== | {| class="wikitable" border="1" | ||

! Flag !! Kernel !! Description | |||

|- | |||

==== | | <tt>IORING_REGISTER_BUFFERS</tt> || 5.1 || | ||

|- | |||

| <tt>IORING_UNREGISTER_BUFFERS</tt> || 5.1 || | |||

|- | |||

| <tt>IORING_REGISTER_BUFFERS2</tt> || 5.13 || <syntaxhighlight lang="c"> | |||

struct io_uring_rsrc_register { | |||

__u32 nr; | |||

__u32 resv; | |||

__u64 resv2; | |||

__aligned_u64 data; | |||

__aligned_u64 tags; | |||

}; | |||

</syntaxhighlight> | |||

|- | |||

| <tt>IORING_REGISTER_BUFFERS_UPDATE</tt> || 5.13 || <syntaxhighlight lang="c"> | |||

struct io_uring_rsrc_update2 { | |||

__u32 offset; | |||

__u32 resv; | |||

__aligned_u64 data; | |||

__aligned_u64 tags; | |||

__u32 nr; | |||

__u32 resv2; | |||

}; | |||

</syntaxhighlight> | |||

|- | |||

| <tt>IORING_REGISTER_PBUF_RING</tt> || 5.19 || <syntaxhighlight lang="c"> | |||

struct io_uring_buf_reg { | |||

__u64 ring_addr; | |||

__u32 ring_entries; | |||

__u16 bgid; | |||

__u16 pad; | |||

__u64 resv[3]; | |||

}; | |||

</syntaxhighlight> | |||

|- | |||

| <tt>IORING_UNREGISTER_PBUF_RING</tt> || 5.19 || | |||

|- | |||

|} | |||

====Registered file descriptors==== | |||

Registered (sometimes "direct") descriptors are integers corresponding to private file handle structures internal to the uring, and can be used anywhere uring wants a file descriptor through the <tt>IOSQE_FIXED_FILE</tt> flag. They have less overhead than true file descriptors, which use structures shared among threads. Note that registered files are required for submission queue polling unless the <tt>IORING_FEAT_SQPOLL_NONFIXED</tt> feature flag was returned. Registered files can be passed between rings using <tt>io_uring_prep_msg_ring_fd(3)</tt>. | |||

<syntaxhighlight lang="cpp"> | |||

<syntaxhighlight lang=" | int io_uring_register_files(struct io_uring *ring, const int *files, unsigned nr_files); | ||

int io_uring_register_files_tags(struct io_uring *ring, const int *files, const __u64 *tags, unsigned nr); | |||

int io_uring_register_files_sparse(struct io_uring *ring, unsigned nr_files); | |||

int io_uring_register_files_update(struct io_uring *ring, unsigned off, const int *files, unsigned nr_files); | |||

__u64 | int io_uring_register_files_update_tag(struct io_uring *ring, unsigned off, const int *files, const __u64 *tags, unsigned nr_files); | ||

int io_uring_unregister_files(struct io_uring *ring); | |||

</syntaxhighlight> | |||

{| class="wikitable" border="1" | |||

! Flag !! Kernel !! Description | |||

|- | |||

| <tt>IORING_REGISTER_FILES</tt> || 5.1 || | |||

|- | |||

| <tt>IORING_UNREGISTER_FILES</tt> || 5.1 || | |||

|- | |||

| <tt>IORING_REGISTER_FILES2</tt> || 5.13 || | |||

|- | |||

| <tt>IORING_REGISTER_FILES_UPDATE</tt> || 5.5 (5.12 for all features) || | |||

|- | |||

| <tt>IORING_REGISTER_FILES_UPDATE2</tt> || 5.13 || | |||

|- | |||

| <tt>IORING_REGISTER_FILE_ALLOC_RANGE</tt> || 6.0 || <syntaxhighlight lang="c"> | |||

struct io_uring_file_index_range { | |||

__u32 off; | |||

__u32 len; | |||

__u64 resv; | |||

}; | |||

</syntaxhighlight> | </syntaxhighlight> | ||

|- | |||

|} | |||

====Personalities==== | |||

{| class="wikitable" border="1" | |||

! Flag !! Kernel !! Description | |||

|- | |||

| <tt>IORING_REGISTER_PERSONALITY</tt> || 5.6 || | |||

|- | |||

| <tt>IORING_UNREGISTER_PERSONALITY</tt> || 5.6 || | |||

|- | |||

|} | |||

====NAPI settings==== | |||

{| class="wikitable" border="1" | |||

! Flag !! Kernel !! Description | |||

|- | |||

| <tt>IORING_REGISTER_NAPI</tt> || || | |||

|- | |||

| <tt>IORING_UNREGISTER_PERSONALITY</tt> || || | |||

|- | |||

|} | |||

==Submitting work== | ==Submitting work== | ||

| Line 186: | Line 396: | ||

}; | }; | ||

</syntaxhighlight> | </syntaxhighlight> | ||

Flags can be set on a per-SQE basis using <tt>io_uring_sqe_set_flags(3)</tt>, or writing to the <tt>flags</tt> field directly: | |||

<syntaxhighlight lang="c"> | |||

static inline | |||

void io_uring_sqe_set_flags(struct io_uring_sqe *sqe, unsigned flags){ | |||

sqe->flags = (__u8) flags; | |||

} | |||

</syntaxhighlight> | |||

The flags are a bitfield over: | |||

{| class="wikitable" border="1" | {| class="wikitable" border="1" | ||

! SQE flag !! Description | ! SQE flag !! Kernel !! Description | ||

|- | |||

| <tt>IOSQE_FIXED_FILE</tt> || 5.1 || References a registered descriptor. | |||

|- | |||

| <tt>IOSQE_IO_DRAIN</tt> || 5.2 || Issue after in-flight I/O, and do not issue new SQEs before this completes. Incompatible with <tt>IOSQE_CQE_SKIP_SUCCESS</tt> anywhere in the same linked set. | |||

|- | |||

| <tt>IOSQE_IO_LINK</tt> || 5.3 || Links next SQE. Linked SQEs will not be started until this is done, and any unexpected result (errors, short reads, etc.) will fail all linked SQEs with <tt>-ECANCELED</tt>. | |||

|- | |||

| <tt>IOSQE_IO_HARDLINK</tt> || 5.5 || Same as <tt>IOSQE_IO_LINK</tt>, but a completion failure does not sever the chain (a submission failure still does). | |||

|- | |||

| <tt>IOSQE_ASYNC</tt> || 5.6 || Always operate asynchronously (operations which would not block are typically executed inline). | |||

|- | |||

| <tt>IOSQE_BUFFER_SELECT</tt> || 5.7 || Use a registered buffer. | |||

|- | |- | ||

| <tt> | | <tt>IOSQE_CQE_SKIP_SUCCESS</tt> || 5.17 || Don't post a CQE on success. Incompatible with <tt>IOSQE_IO_DRAIN</tt> anywhere in the same linked set. | ||

|- | |||

|} | |||

<tt>ioprio</tt> includes the following flags: | |||

{| class="wikitable" border="1" | |||

! SQE ioprio flag !! Description | |||

|- | |- | ||

| <tt> | | <tt>IORING_RECV_MULTISHOT</tt> || | ||

|- | |- | ||

| <tt> | | <tt>IORING_SEND_MULTISHOT</tt> || Introduced in 6.8. | ||

|- | |- | ||

| <tt> | | <tt>IORING_ACCEPT_MULTISHOT</tt> || | ||

|- | |- | ||

| <tt> | | <tt>IORING_RECVSEND_FIXED_BUF</tt> || Use a registered buffer. | ||

|- | |- | ||

| <tt> | | <tt>IORING_RECVSEND_POLL_FIRST</tt> || Assume the socket in a <tt>recv(2)</tt>-like command does not have data ready, so an internal poll is set up immediately. | ||

|- | |- | ||

|} | |} | ||

===Acquiring SQEs=== | |||

If using the higher-level API, <tt>io_uring_get_sqe(3)</tt> is the primary means to acquire an SQE. If none are available, it will return <tt>NULL</tt>, and you should submit outstanding SQEs to free one up. | |||

<syntaxhighlight lang="c"> | |||

struct io_uring_sqe *io_uring_get_sqe(struct io_uring *ring); | |||

</syntaxhighlight> | |||

If <tt>IORING_SETUP_SQPOLL</tt> was used to set up kernel-side SQ polling, <tt>IORING_ENTER_EXT_ARG</tt> can be used together with <tt>io_uring_enter(2)</tt> to block on an SQE becoming available. | |||

===Prepping SQEs=== | ===Prepping SQEs=== | ||

Each SQE must be seeded with the object upon which it acts (usually a file descriptor) and any necessary arguments. You'll usually also use the user data area. | |||

====User data==== | |||

Each SQE provides 64 bits of user-controlled data which will be copied through to any generated CQEs. Since CQEs don't include the relevant file descriptor, you'll almost always be encoding some kind of lookup information into this area. | |||

<syntaxhighlight lang="c"> | |||

void io_uring_sqe_set_data(struct io_uring_sqe *sqe, void *user_data); | |||

void io_uring_sqe_set_data64(struct io_uring_sqe *sqe, __u64 data); | |||

void *io_uring_cqe_get_data(struct io_uring_cqe *cqe); | |||

__u64 io_uring_cqe_get_data64(struct io_uring_cqe *cqe); | |||

</syntaxhighlight> | |||

Here's an example [[C++]] data type that encodes eight bits as an operation type, eight bits as an index, and forty-eight bits as other data. I typically use something like this to reflect the operation which was used, the index into some relevant data structure, and other information about the operation (perhaps an offset or a length). Salt to taste, of course. | |||

<syntaxhighlight lang="cpp"> | |||

union URingCtx { | |||

struct rep { | |||

rep(uint8_t op, unsigned ix, uint64_t d): | |||

type(static_cast<URingCtx::rep::optype>(op)), idx(ix), data(d) | |||

{ | |||

if(type >= MAXOP){ | |||

throw std::invalid_argument("bad uringctx op"); | |||

} | |||

if(ix > MAXIDX){ | |||

throw std::invalid_argument("bad uringctx index"); | |||

} | |||

if(d > 0xffffffffffffull){ | |||

throw std::invalid_argument("bad uringctx data"); | |||

} | |||

} | |||

enum optype: uint8_t { | |||

...app-specific types... | |||

MAXOP // shouldn't be used | |||

} type: 8; | |||

uint8_t idx: 8; | |||

uint64_t data: 48; | |||

} r; | |||

uint64_t val; | |||

static constexpr auto MAXIDX = 255u; | |||

URingCtx(uint8_t op, unsigned idx, uint64_t d): | |||

r(op, idx, d) | |||

{} | |||

URingCtx(uint64_t v): | |||

URingCtx(v & 0xffu, (v >> 8u) & 0xffu, v >> 16u) | |||

{} | |||

}; | |||

</syntaxhighlight> | |||

The majority of I/O-related system calls have by now a uring equivalent (the one major exception of which I'm aware is directory listing; there seems to be no <tt>readdir(3)</tt>/<tt>getdents(2)</tt>). What follows is an incomplete list. | The majority of I/O-related system calls have by now a uring equivalent (the one major exception of which I'm aware is directory listing; there seems to be no <tt>readdir(3)</tt>/<tt>getdents(2)</tt>). What follows is an incomplete list. | ||

====Just chillin'==== | |||

<syntaxhighlight lang="c"> | |||

// IORING_OP_NOP | |||

void io_uring_prep_nop(struct io_uring_sqe *sqe); | |||

</syntaxhighlight> | |||

====Opening and closing file descriptors==== | ====Opening and closing file descriptors==== | ||

<syntaxhighlight lang="c"> | <syntaxhighlight lang="c"> | ||

// IORING_OP_OPENAT (5.15) | |||

void io_uring_prep_openat(struct io_uring_sqe *sqe, int dfd, const char *path, | void io_uring_prep_openat(struct io_uring_sqe *sqe, int dfd, const char *path, | ||

int flags, mode_t mode); | int flags, mode_t mode); | ||

void io_uring_prep_openat_direct(struct io_uring_sqe *sqe, int dfd, const char *path, | void io_uring_prep_openat_direct(struct io_uring_sqe *sqe, int dfd, const char *path, | ||

int flags, mode_t mode, unsigned file_index); | int flags, mode_t mode, unsigned file_index); | ||

// IORING_OP_OPENAT2 (5.15) | |||

void io_uring_prep_openat2(struct io_uring_sqe *sqe, int dfd, const char *path, | void io_uring_prep_openat2(struct io_uring_sqe *sqe, int dfd, const char *path, | ||

int flags, struct open_how *how); | int flags, struct open_how *how); | ||

void io_uring_prep_openat2_direct(struct io_uring_sqe *sqe, int dfd, const char *path, | void io_uring_prep_openat2_direct(struct io_uring_sqe *sqe, int dfd, const char *path, | ||

int flags, struct open_how *how, unsigned file_index); | int flags, struct open_how *how, unsigned file_index); | ||

// IORING_OP_ACCEPT (5.5) | |||

void io_uring_prep_accept(struct io_uring_sqe *sqe, int sockfd, struct sockaddr *addr, | void io_uring_prep_accept(struct io_uring_sqe *sqe, int sockfd, struct sockaddr *addr, | ||

socklen_t *addrlen, int flags); | socklen_t *addrlen, int flags); | ||

| Line 225: | Line 520: | ||

void io_uring_prep_multishot_accept_direct(struct io_uring_sqe *sqe, int sockfd, struct sockaddr *addr, | void io_uring_prep_multishot_accept_direct(struct io_uring_sqe *sqe, int sockfd, struct sockaddr *addr, | ||

socklen_t *addrlen, int flags); | socklen_t *addrlen, int flags); | ||

// IORING_OP_CLOSE (5.6, direct support since 5.15) | |||

void io_uring_prep_close(struct io_uring_sqe *sqe, int fd); | void io_uring_prep_close(struct io_uring_sqe *sqe, int fd); | ||

void io_uring_prep_close_direct(struct io_uring_sqe *sqe, unsigned file_index); | void io_uring_prep_close_direct(struct io_uring_sqe *sqe, unsigned file_index); | ||

// IORING_OP_SOCKET (5.19) | |||

void io_uring_prep_socket(struct io_uring_sqe *sqe, int domain, int type, | void io_uring_prep_socket(struct io_uring_sqe *sqe, int domain, int type, | ||

int protocol, unsigned int flags); | int protocol, unsigned int flags); | ||

| Line 234: | Line 531: | ||

int protocol, unsigned int flags); | int protocol, unsigned int flags); | ||

</syntaxhighlight> | </syntaxhighlight> | ||

====Reading and writing file descriptors==== | ====Reading and writing file descriptors==== | ||

The multishot receives (<tt>io_uring_prep_recv_multishot(3)</tt> and <tt>io_uring_prep_recvmsg_multishot(3)</tt>, introduced in Linux 6.0) require that registered buffers are used via <tt>IOSQE_BUFFER_SELECT</tt>, and preclude use of <tt>MSG_WAITALL</tt>. Meanwhile, use of <tt>IOSQE_IO_LINK</tt> with <tt>io_uring_prep_send(3)</tt> or <tt>io_uring_prep_sendmsg(3)</tt> <i>requires</i> <tt>MSG_WAITALL</tt> (despite <tt>MSG_WAITALL</tt> not typically being an argument to <tt>send(2)</tt>/<tt>sendmsg(2)</tt>). | |||

<syntaxhighlight lang="c"> | <syntaxhighlight lang="c"> | ||

// IORING_OP_SEND (5.6) | |||

void io_uring_prep_send(struct io_uring_sqe *sqe, int sockfd, const void *buf, size_t len, int flags); | void io_uring_prep_send(struct io_uring_sqe *sqe, int sockfd, const void *buf, size_t len, int flags); | ||

// IORING_OP_SEND_ZC (6.0) | |||

void io_uring_prep_send_zc(struct io_uring_sqe *sqe, int sockfd, const void *buf, size_t len, int flags, int zc_flags); | void io_uring_prep_send_zc(struct io_uring_sqe *sqe, int sockfd, const void *buf, size_t len, int flags, int zc_flags); | ||

// IORING_OP_SENDMSG (5.3) | |||

void io_uring_prep_sendmsg(struct io_uring_sqe *sqe, int fd, const struct msghdr *msg, unsigned flags); | void io_uring_prep_sendmsg(struct io_uring_sqe *sqe, int fd, const struct msghdr *msg, unsigned flags); | ||

void io_uring_prep_sendmsg_zc(struct io_uring_sqe *sqe, int fd, const struct msghdr *msg, unsigned flags); | void io_uring_prep_sendmsg_zc(struct io_uring_sqe *sqe, int fd, const struct msghdr *msg, unsigned flags); | ||

// IORING_OP_RECV (5.6) | |||

void io_uring_prep_recv(struct io_uring_sqe *sqe, int sockfd, void *buf, size_t len, int flags); | void io_uring_prep_recv(struct io_uring_sqe *sqe, int sockfd, void *buf, size_t len, int flags); | ||

void io_uring_prep_recv_multishot(struct io_uring_sqe *sqe, int sockfd, void *buf, size_t len, int flags); | void io_uring_prep_recv_multishot(struct io_uring_sqe *sqe, int sockfd, void *buf, size_t len, int flags); | ||

// IORING_OP_RECVMSG (5.3) | |||

void io_uring_prep_recvmsg(struct io_uring_sqe *sqe, int fd, struct msghdr *msg, unsigned flags); | void io_uring_prep_recvmsg(struct io_uring_sqe *sqe, int fd, struct msghdr *msg, unsigned flags); | ||

void io_uring_prep_recvmsg_multishot(struct io_uring_sqe *sqe, int fd, struct msghdr *msg, unsigned flags); | void io_uring_prep_recvmsg_multishot(struct io_uring_sqe *sqe, int fd, struct msghdr *msg, unsigned flags); | ||

// IORING_OP_READ (5.6) | |||

void io_uring_prep_read(struct io_uring_sqe *sqe, int fd, void *buf, unsigned nbytes, __u64 offset); | void io_uring_prep_read(struct io_uring_sqe *sqe, int fd, void *buf, unsigned nbytes, __u64 offset); | ||

// IORING_OP_READV | |||

void io_uring_prep_readv(struct io_uring_sqe *sqe, int fd, const struct iovec *iovecs, unsigned nr_vecs, __u64 offset); | void io_uring_prep_readv(struct io_uring_sqe *sqe, int fd, const struct iovec *iovecs, unsigned nr_vecs, __u64 offset); | ||

void io_uring_prep_readv2(struct io_uring_sqe *sqe, int fd, const struct iovec *iovecs, | void io_uring_prep_readv2(struct io_uring_sqe *sqe, int fd, const struct iovec *iovecs, | ||

unsigned nr_vecs, __u64 offset, int flags); | unsigned nr_vecs, __u64 offset, int flags); | ||

// IORING_OP_READV_FIXED | |||

void io_uring_prep_read_fixed(struct io_uring_sqe *sqe, int fd, void *buf, unsigned nbytes, __u64 offset, int buf_index); | |||

void io_uring_prep_shutdown(struct io_uring_sqe *sqe, int sockfd, int how); | void io_uring_prep_shutdown(struct io_uring_sqe *sqe, int sockfd, int how); | ||

void io_uring_prep_splice(struct io_uring_sqe *sqe, int fd_in, int64_t off_in, int fd_out, | void io_uring_prep_splice(struct io_uring_sqe *sqe, int fd_in, int64_t off_in, int fd_out, | ||

int64_t off_out, unsigned int nbytes, unsigned int splice_flags); | int64_t off_out, unsigned int nbytes, unsigned int splice_flags); | ||

// IORING_OP_SYNC_FILE_RANGE (5.2) | |||

void io_uring_prep_sync_file_range(struct io_uring_sqe *sqe, int fd, unsigned len, __u64 offset, int flags); | void io_uring_prep_sync_file_range(struct io_uring_sqe *sqe, int fd, unsigned len, __u64 offset, int flags); | ||

// IORING_OP_TEE (5.8) | |||

void io_uring_prep_tee(struct io_uring_sqe *sqe, int fd_in, int fd_out, unsigned int nbytes, unsigned int splice_flags); | void io_uring_prep_tee(struct io_uring_sqe *sqe, int fd_in, int fd_out, unsigned int nbytes, unsigned int splice_flags); | ||

// IORING_OP_WRITE (5.6) | |||

void io_uring_prep_write(struct io_uring_sqe *sqe, int fd, const void *buf, unsigned nbytes, __u64 offset); | void io_uring_prep_write(struct io_uring_sqe *sqe, int fd, const void *buf, unsigned nbytes, __u64 offset); | ||

// IORING_OP_WRITEV | |||

void io_uring_prep_writev(struct io_uring_sqe *sqe, int fd, const struct iovec *iovecs, | void io_uring_prep_writev(struct io_uring_sqe *sqe, int fd, const struct iovec *iovecs, | ||

unsigned nr_vecs, __u64 offset); | unsigned nr_vecs, __u64 offset); | ||

void io_uring_prep_writev2(struct io_uring_sqe *sqe, int fd, const struct iovec *iovecs, | void io_uring_prep_writev2(struct io_uring_sqe *sqe, int fd, const struct iovec *iovecs, | ||

unsigned nr_vecs, __u64 offset, int flags); | unsigned nr_vecs, __u64 offset, int flags); | ||

// IORING_OP_WRITE_FIXED | |||

void io_uring_prep_write_fixed(struct io_uring_sqe *sqe, int fd, const void *buf, | |||

unsigned nbytes, __u64 offset, int buf_index); | |||

// IORING_OP_CONNECT (5.5) | |||

void io_uring_prep_connect(struct io_uring_sqe *sqe, int sockfd, const struct sockaddr *addr, socklen_t addrlen); | |||

</syntaxhighlight> | </syntaxhighlight> | ||

====Operations on file descriptors==== | |||

<syntaxhighlight lang="c"> | |||

// IORING_OP_URING_CMD | |||

void io_uring_prep_cmd_sock(struct io_uring_sqe *sqe, int cmd_op, int fd, int level, | |||

int optname, void *optval, int optlen); | |||

// IORING_OP_GETXATTR | |||

void io_uring_prep_getxattr(struct io_uring_sqe *sqe, const char *name, char *value, | |||

const char *path, unsigned int len); | |||

// IORING_OP_SETXATTR | |||

void io_uring_prep_setxattr(struct io_uring_sqe *sqe, const char *name, const char *value, | |||

const char *path, int flags, unsigned int len); | |||

// IORING_OP_FGETXATTR | |||

void io_uring_prep_fgetxattr(struct io_uring_sqe *sqe, int fd, const char *name, | |||

char *value, unsigned int len); | |||

// IORING_OP_FSETXATTR | |||

void io_uring_prep_fsetxattr(struct io_uring_sqe *sqe, int fd, const char *name, | |||

const char *value, int flags, unsigned int len); | |||

</syntaxhighlight> | |||

====Manipulating directories==== | ====Manipulating directories==== | ||

<syntaxhighlight lang="c"> | <syntaxhighlight lang="c"> | ||

// IORING_OP_FSYNC | |||

void io_uring_prep_fsync(struct io_uring_sqe *sqe, int fd, unsigned flags); | void io_uring_prep_fsync(struct io_uring_sqe *sqe, int fd, unsigned flags); | ||

void io_uring_prep_linkat(struct io_uring_sqe *sqe, int olddirfd, const char *oldpath, | void io_uring_prep_linkat(struct io_uring_sqe *sqe, int olddirfd, const char *oldpath, | ||

| Line 280: | Line 615: | ||

void io_uring_prep_unlink(struct io_uring_sqe *sqe, const char *path, int flags); | void io_uring_prep_unlink(struct io_uring_sqe *sqe, const char *path, int flags); | ||

</syntaxhighlight> | </syntaxhighlight> | ||

====Timeouts and polling==== | ====Timeouts and polling==== | ||

<syntaxhighlight lang="c"> | <syntaxhighlight lang="c"> | ||

// IORING_OP_POLL_ADD | |||

void io_uring_prep_poll_add(struct io_uring_sqe *sqe, int fd, unsigned poll_mask); | void io_uring_prep_poll_add(struct io_uring_sqe *sqe, int fd, unsigned poll_mask); | ||

void io_uring_prep_poll_multishot(struct io_uring_sqe *sqe, int fd, unsigned poll_mask); | void io_uring_prep_poll_multishot(struct io_uring_sqe *sqe, int fd, unsigned poll_mask); | ||

// IORING_OP_POLL_REMOVE | |||

void io_uring_prep_poll_remove(struct io_uring_sqe *sqe, __u64 user_data); | void io_uring_prep_poll_remove(struct io_uring_sqe *sqe, __u64 user_data); | ||

void io_uring_prep_poll_update(struct io_uring_sqe *sqe, __u64 old_user_data, __u64 new_user_data, unsigned poll_mask, unsigned flags); | void io_uring_prep_poll_update(struct io_uring_sqe *sqe, __u64 old_user_data, __u64 new_user_data, unsigned poll_mask, unsigned flags); | ||

// IORING_OP_TIMEOUT | |||

void io_uring_prep_timeout(struct io_uring_sqe *sqe, struct __kernel_timespec *ts, unsigned count, unsigned flags); | void io_uring_prep_timeout(struct io_uring_sqe *sqe, struct __kernel_timespec *ts, unsigned count, unsigned flags); | ||

void io_uring_prep_timeout_update(struct io_uring_sqe *sqe, struct __kernel_timespec *ts, __u64 user_data, unsigned flags); | void io_uring_prep_timeout_update(struct io_uring_sqe *sqe, struct __kernel_timespec *ts, __u64 user_data, unsigned flags); | ||

// IORING_OP_TIMEOUT_REMOVE | |||

void io_uring_prep_timeout_remove(struct io_uring_sqe *sqe, __u64 user_data, unsigned flags); | void io_uring_prep_timeout_remove(struct io_uring_sqe *sqe, __u64 user_data, unsigned flags); | ||

</syntaxhighlight> | |||

====Transring communication==== | |||

All transring messages are prepared using the target uring's file descriptor, and thus aren't particularly compatible with the higher-level liburing API (of course, you can just yank <tt>ring_fd</tt> out of <tt>struct iouring</tt>). That's unfortunate, because this functionality is very useful in a multithreaded environment. Note that the ring to which the SQE is submitted can itself be the target of the message! | |||

<syntaxhighlight lang="c"> | |||

// IORING_OP_MSG_RING | |||

void io_uring_prep_msg_ring(struct io_uring_sqe *sqe, int fd, unsigned int len, __u64 data, unsigned int flags); | |||

void io_uring_prep_msg_ring_cqe_flags(struct io_uring_sqe *sqe, int fd, unsigned int len, __u64 data, unsigned int flags, unsigned int cqe_flags); | |||

void io_uring_prep_msg_ring_fd(struct io_uring_sqe *sqe, int fd, int source_fd, int target_fd, __u64 data, unsigned int flags); | |||

void io_uring_prep_msg_ring_fd_alloc(struct io_uring_sqe *sqe, int fd, int source_fd, __u64 data, unsigned int flags); | |||

</syntaxhighlight> | |||

====Futexes==== | |||

<syntaxhighlight lang="c"> | |||

// IORING_OP_FUTEX_WAKE | |||

void io_uring_prep_futex_wake(struct io_uring_sqe *sqe, uint32_t *futex, uint64_t val, | |||

uint64_t mask, uint32_t futex_flags, unsigned int flags); | |||

// IORING_OP_FUTEX_WAIT | |||

void io_uring_prep_futex_wait(struct io_uring_sqe *sqe, uint32_t *futex, uint64_t val, | |||

uint64_t mask, uint32_t futex_flags, unsigned int flags); | |||

// IORING_OP_FUTEX_WAITV | |||

void io_uring_prep_futex_waitv(struct io_uring_sqe *sqe, struct futex_waitv *futex, | |||

uint32_t nr_futex, unsigned int flags); | |||

</syntaxhighlight> | |||

====Cancellation==== | |||

<syntaxhighlight lang="c"> | |||

void io_uring_prep_cancel64(struct io_uring_sqe *sqe, __u64 user_data, int flags); | |||

void io_uring_prep_cancel(struct io_uring_sqe *sqe, void *user_data, int flags); | |||

void io_uring_prep_cancel_fd(struct io_uring_sqe *sqe, int fd, int flags); | |||

</syntaxhighlight> | </syntaxhighlight> | ||

| Line 295: | Line 665: | ||

===Sending it to the kernel=== | ===Sending it to the kernel=== | ||

If <tt>IORING_SETUP_SQPOLL</tt> was provided when creating the uring, | If <tt>IORING_SETUP_SQPOLL</tt> was provided when creating the uring, a thread was spawned to poll the submission queue. If the thread is awake, there is no need to make a system call; the kernel will ingest the SQE as soon as it is written (<tt>io_uring_submit(3)</tt> still must be used, but no system call will be made). This thread goes to sleep after <tt>sq_thread_idle</tt> milliseconds idle, in which case <tt>IORING_SQ_NEED_WAKEUP</tt> will be written to the <tt>flags</tt> field of the submission ring. | ||

<syntaxhighlight lang="c"> | <syntaxhighlight lang="c"> | ||

int io_uring_submit(struct io_uring *ring); | int io_uring_submit(struct io_uring *ring); | ||

| Line 301: | Line 671: | ||

int io_uring_submit_and_wait_timeout(struct io_uring *ring, struct io_uring_cqe **cqe_ptr, unsigned wait_nr, | int io_uring_submit_and_wait_timeout(struct io_uring *ring, struct io_uring_cqe **cqe_ptr, unsigned wait_nr, | ||

struct __kernel_timespec *ts, sigset_t *sigmask); | struct __kernel_timespec *ts, sigset_t *sigmask); | ||

int io_uring_submit_and_get_events(struct io_uring *ring); | |||

</syntaxhighlight> | </syntaxhighlight> | ||

<tt>io_uring_submit()</tt> and <tt>io_uring_submit_and_wait()</tt> are trivial wrappers around the internal <tt>__io_uring_submit_and_wait()</tt>: | |||

<syntaxhighlight lang="c"> | |||

int io_uring_submit(struct io_uring *ring){ | |||

return __io_uring_submit_and_wait(ring, 0); | |||

} | |||

int io_uring_submit_and_wait(struct io_uring *ring, unsigned wait_nr){ | |||

return __io_uring_submit_and_wait(ring, wait_nr); | |||

} | |||

</syntaxhighlight> | |||

which is in turn, along with <tt>io_uring_submit_and_get_events()</tt>, a trivial wrapper around the internal <tt>__io_uring_submit()</tt> (calling <tt>__io_uring_flush_sq()</tt> along the way): | |||

<syntaxhighlight lang="c"> | |||

static int __io_uring_submit_and_wait(struct io_uring *ring, unsigned wait_nr){ | |||

return __io_uring_submit(ring, __io_uring_flush_sq(ring), wait_nr, false); | |||

} | |||

int io_uring_submit_and_get_events(struct io_uring *ring){ | |||

return __io_uring_submit(ring, __io_uring_flush_sq(ring), 0, true); | |||

} | |||

</syntaxhighlight> | |||

<tt>__io_uring_flush_sq()</tt> updates the ring tail with a release-semantics write, and <tt>__io_uring_submit()</tt> calls <tt>io_uring_enter()</tt> if necessary (when is it necessary? If <tt>wait_nr</tt> is non-zero, if <tt>getevents</tt> is <tt>true</tt>, if the ring was created with <tt>IORING_SETUP_IOPOLL</tt>, or if either the <tt>IORING_SQ_CQ_OVERFLOW</tt> or <tt>IORING_SQ_TASKRUN</tt> SQ ring flags are set. These last three conditions are encapsulated by the internal function <tt>cq_ring_needs_enter()</tt>). Note that timeouts are implemented internally using a SQE, and thus will kick off work if the submission ring is full pursuant to acquiring the entry. | |||

When an actions requires a <tt>struct</tt> (examples would include <tt>IORING_OP_SENDMSG</tt> and its <tt>msghdr</tt>, and <tt>IORING_OP_TIMEOUT</tt> and its <tt>timespec64</tt>) as a parameter, that <tt>struct</tt> must remain valid until the SQE is submitted (though not completed), i.e. functions like <tt>io_uring_prep_sendmsg(3)</tt> do not perform deep copies of their arguments. | |||

====Submission queue polling details==== | |||

Using <tt>IORING_SETUP_SQPOLL</tt> will, by default, create two threads in your process, one named <tt>iou-sqp-TID</tt>, and the other named <tt>iou-wrk-TID</tt>. The former is created the first time work is submitted. The latter is created whenever the uring is enabled (i.e. at creation time, unless <tt>IORING_SETUP_R_DISABLED</tt> is used). Submission queue poll threads can be shared between urings via <tt>IORING_SETUP_ATTACH_WQ</tt> together with the <tt>wq_fd</tt> field of <tt>io_uring_params</tt>. | |||

These threads will be started with the same cgroup, CPU affinities, etc. as the calling thread, so watch out if you've already bound your thread to some CPU! You will now be competing with the poller threads for those CPUs' cycles, and if you're all on a single core, things will not go well. | |||

====Submission queue flags==== | |||

{| class="wikitable" border="1" | |||

! Flag !! Description | |||

|- | |||

| IORING_SQ_NEED_WAKEUP || | |||

|- | |||

| IORING_SQ_CQ_OVERFLOW || The completion queue overflowed. See below for details on CQ overflows. | |||

|- | |||

| IORING_SQ_TASKRUN || | |||

|- | |||

|} | |||

==Reaping completions== | ==Reaping completions== | ||

| Line 330: | Line 740: | ||

|- | |- | ||

| <tt>IORING_CQE_F_NOTIF</tt> || Notification CQE for zero-copy sends | | <tt>IORING_CQE_F_NOTIF</tt> || Notification CQE for zero-copy sends | ||

|- | |||

| <tt>IORING_CQE_F_BUF_MORE</tt> || There will be more CQEs to the specified registered buffer | |||

|- | |- | ||

|} | |} | ||

Completions can be detected by | Completions can be detected by at least five different means: | ||

* Checking the completion queue speculatively. This either means a periodic check, which will suffer latency up to the period, or a busy check, which will churn CPU, but is probably the lowest-latency solution. This is best accomplished with <tt>io_uring_peek_cqe(3)</tt>, perhaps in conjunction with <tt>io_uring_cq_ready(3)</tt> (neither involves a system call). | * Checking the completion queue speculatively. This either means a periodic check, which will suffer latency up to the period, or a busy check, which will churn CPU, but is probably the lowest-latency portable solution. This is best accomplished with <tt>io_uring_peek_cqe(3)</tt>, perhaps in conjunction with <tt>io_uring_cq_ready(3)</tt> (neither involves a system call). | ||

<syntaxhighlight lang="c"> | <syntaxhighlight lang="c"> | ||

int io_uring_peek_cqe(struct io_uring *ring, struct io_uring_cqe **cqe_ptr); | int io_uring_peek_cqe(struct io_uring *ring, struct io_uring_cqe **cqe_ptr); | ||

| Line 350: | Line 762: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

* Using an [[eventfd]] together with <tt>io_uring_register_eventfd(3)</tt>. See below for the full API. This eventfd can be combined with e.g. regular [[epoll]]. | * Using an [[eventfd]] together with <tt>io_uring_register_eventfd(3)</tt>. See below for the full API. This eventfd can be combined with e.g. regular [[epoll]]. | ||

* Using processor-dependent memory watch instructions. On x86, there's MONITOR+MWAIT, but they require you to be in ring 0, so you'd probably want UMONITOR/UMWAIT. This ought allow a very low-latency wake that consumes very little power. | * Polling the uring's file descriptor directly. | ||

* Using processor-dependent memory watch instructions. On x86, there's [https://www.felixcloutier.com/x86/monitor MONITOR]+[https://www.felixcloutier.com/x86/mwait MWAIT], but they require you to be in ring 0, so you'd probably want [https://www.felixcloutier.com/x86/umonitor UMONITOR]+[https://www.felixcloutier.com/x86/umwait UMWAIT]. This ought allow a very low-latency wake that consumes very little power. | |||

It's sometimes necessary to explicitly flush overflow tasks (or simply outstanding tasks in the presence of <tt>IORING_SETUP_DEFER_TASKRUN</tt>). This can be kicked off with <tt>io_uring_get_events(3)</tt>: | |||

<syntaxhighlight lang="cpp"> | |||

int io_uring_get_events(struct io_uring *ring); | |||

</syntaxhighlight> | |||

Once the CQE can be returned to the system, do so with <tt>io_uring_cqe_seen(3)</tt>, or batch it with <tt>io_uring_cq_advance(3)</tt> (the former can mark cq entries as seen out of order). | Once the CQE can be returned to the system, do so with <tt>io_uring_cqe_seen(3)</tt>, or batch it with <tt>io_uring_cq_advance(3)</tt> (the former can mark cq entries as seen out of order). | ||

| Line 358: | Line 777: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

===Multishot=== | ===Multishot=== | ||

It is possible for a single submission to result in multiple completions (e.g. <tt>io_uring_prep_multishot_accept(3)</tt>); this is known as ''multishot''. Errors on a multishot SQE will typically terminate the work request; a multishot SQE will set <tt>IORING_CQE_F_MORE</tt> high in generated CQEs so long as it remains active. A CQE without this flag indicates that the multishot is no longer operational, and must be reposted if further events are desired. Overflow of the completion queue will usually result in a drop of any firing multishot. | |||

===Zerocopy=== | |||

Since kernel 6.0, <tt>IORING_OP_SEND_ZC</tt> may be driven via <tt>io_uring_prep_send_zc(3)</tt> or <tt>io_uring_prep_send_zc_fixed(3)</tt> (the latter requires registered buffers). This will attempt to eliminate an intermediate copy, at the expense of requiring supplied data to remain unchanged longer. Elimination of the copy is not guaranteed. Protocols which do not support zerocopy will generally immediately fail with a <tt>res</tt> field of <tt>-EOPNOTSUPP</tt>. A successful zerocopy operation will result in two CQEs: | |||

* a first CQE with standard <tt>res</tt> semantics and the <tt>IORING_CQE_F_MORE</tt> flag set, and | |||

* a second CQE with <tt>res</tt> set to 0 and the <tt>IORING_CQE_F_NOTIF</tt> flag set. | |||

Both CQEs will have the same user data field. | |||

==Corner cases== | ==Corner cases== | ||

===Single fd in multiple rings=== | ===Single fd in multiple rings=== | ||

If logically equivalent SQEs are submitted to different rings, only one operation seems to take place when logical. For instance, if two rings have the same socket added for an <tt>accept(2)</tt>, a successful three-way [[TCP]] handshake will generate only one CQE, on one of the two rings. Which ring sees the event | If logically equivalent SQEs are submitted to different rings, only one operation seems to take place when logical. For instance, if two rings have the same socket added for an <tt>accept(2)</tt>, a successful three-way [[TCP]] handshake will generate only one CQE, on one of the two rings. Which ring sees the event might be different from connection to connection. | ||

===Multithreaded use=== | ===Multithreaded use=== | ||

urings (and especially the <tt>struct io_uring</tt> object of liburing) are [https://github.com/axboe/liburing/issues/850#issuecomment-1526163360 not intended for multithreaded use] (quoth Axboe, "don't share a ring between threads"), though they can be used in several threaded paradigms. A single thread submitting and | urings (and especially the <tt>struct io_uring</tt> object of liburing) are [https://github.com/axboe/liburing/issues/850#issuecomment-1526163360 not intended for multithreaded use] (quoth Axboe, "don't share a ring between threads"), though they can be used in several threaded paradigms. A single thread submitting and some different single thread reaping is definitely supported. Descriptors can be sent among rings with <tt>IORING_OP_MSG_RING</tt>. Multiple submitters definitely must be serialized in userspace. | ||

If an op will be completed via a kernel task, the thread that submitted that SQE must remain alive until the op's completion. It will otherwise error out with <tt>ECANCELED</tt>. | If an op will be completed via a kernel task, the thread that submitted that SQE must remain alive until the op's completion. It will otherwise error out with <tt>-ECANCELED</tt>. If you must submit the SQE from a thread which will die, consider creating it disabled (see <tt>IORING_SETUP_R_DISABLED</tt>), and enabling it from the thread which will reap the completion event using <tt>IORING_REGISTER_ENABLE_RINGS</tt> with <tt>io_uring_register(2)</tt>. | ||

If you can restrict all submissions (<i>and</i> creation/enabling of the uring) to a single thread, use <tt>IORING_SETUP_SINGLE_ISSUER</tt> to enable kernel optimizations. Otherwise, consider using <tt>io_uring_register_ring_fd(3)</tt> (or <tt>io_uring_register(2)</tt> directly) to register the ring descriptor with the ring itself, and thus reduce the overhead of <tt>io_uring_enter(2)</tt>. | |||

When <tt>IORING_SETUP_SQPOLL</tt> is used, the kernel poller thread is considered to have performed the submission, providing another possible way around this problem. | |||

I am aware of no unannoying way to share some elements between threads in a single uring, while also monitoring distinct urings for each thread. I suppose you could poll on both. | |||

===Coexistence with [[epoll]]/[[XDP]]=== | ===Coexistence with [[epoll]]/[[XDP]]=== | ||

If you want to monitor both an epoll and a uring in a single thread without busy waiting, you will run into problems | If you want to monitor both an epoll and a uring in a single thread without busy waiting, you will run into problems. If you set a zero timeout, you're busy waiting; if you set a non-zero timeout, one is dependent on the other's readiness. There are three solutions: | ||

* Add the epoll fd to your uring with <tt>IORING_OP_POLL_ADD</tt>, and wait only for uring readiness. When you get a CQE for this submitted event, check the epoll. | * Add the epoll fd to your uring with <tt>IORING_OP_POLL_ADD</tt> (ideally multishot), and wait only for uring readiness. When you get a CQE for this submitted event, check the epoll. | ||

* Poll on the uring's file descriptor directly for completion queue events. | |||

* Register an [[eventfd]] with your uring with <tt>io_uring_register_eventfd(3)</tt>, add that to your epoll, and when you get <tt>POLLIN</tt> for this fd, check the completion ring. | * Register an [[eventfd]] with your uring with <tt>io_uring_register_eventfd(3)</tt>, add that to your epoll, and when you get <tt>POLLIN</tt> for this fd, check the completion ring. | ||

The full API here is: | The full API here is: | ||

| Line 380: | Line 813: | ||

<tt>io_uring_register_eventfd_async(3)</tt> only posts to the eventfd for events that completed out-of-line. There is not necessarily a bijection between completion events and posts even with the regular form; multiple CQEs can post only a single event, and spurious posts can occur. | <tt>io_uring_register_eventfd_async(3)</tt> only posts to the eventfd for events that completed out-of-line. There is not necessarily a bijection between completion events and posts even with the regular form; multiple CQEs can post only a single event, and spurious posts can occur. | ||

Similarly, [[XDP]]'s native means of notification is via <tt>poll(2)</tt>; XDP can be unified with uring using | Similarly, [[XDP]]'s native means of notification is via <tt>poll(2)</tt>; XDP can be unified with uring using any of these methods. | ||

===Queue overflows=== | ===Queue overflows=== | ||

< | The <tt>io_uring_prep_msg_ring(3)</tt> family of functions will generate a local CQE with the result code <tt>-EOVERFLOW</tt> if it is unable to fill a CQE on the target ring (when the submission and target ring are the same, I assume you get no CQE, but I'm not sure of this). | ||

By default, the completion queue is twice the size of the submission queue, but it's still not difficult to overflow the completion queue. The size of the submission queue does not limit the number of iops in flight, but only the number that can be submitted at one time (the existence of multishot operations renders this moot). | |||

CQ overflow can be detected by checking the submission queue's flags for <tt>IORING_SQ_CQ_OVERFLOW</tt>. The flag is eventually unset, though I'm not sure whether it's at the time of next submission or what '''FIXME'''. The <tt>io_uring_cq_has_overflow(3)</tt> function returns true if CQ overflow is waiting to be flushed to the queue: | |||

<syntaxhighlight lang="cpp"> | |||

bool io_uring_cq_has_overflow(const struct io_uring *ring); | |||

</syntaxhighlight> | </syntaxhighlight> | ||

==What's missing== | ==What's missing== | ||

I'd like to see [[signalfd|signalfds]] and pidfds integrated (you can read from them with the existing infrastructure, but you can't create them). | I'd like to see [[signalfd|signalfds]] and [[Pidfd|pidfds]] integrated for purposes of linked operations (you can read from them with the existing infrastructure, but you can't create them, and thus can't link their creation to other events). | ||

Why is there no <tt>vmsplice(2)</tt> action? How about <tt>fork(2)</tt>/<tt>pthread_create(3)</tt> (or more generally <tt>clone(2)</tt>)? We could have coroutines with I/O. | |||

It's kind of annoying that chains can't extend over submissions. If I've got a lot of data I need delivered in order, it seems I'm limited to a single chain in-flight at a time, or else I risk out-of-order delivery due to one chain aborting, followed by items from a subsequent chain succeeding. | It's kind of annoying that chains can't extend over submissions. If I've got a lot of data I need delivered in order, it seems I'm limited to a single chain in-flight at a time, or else I risk out-of-order delivery due to one chain aborting, followed by items from a subsequent chain succeeding. <tt>recv_multishot</tt> can help with this. | ||

It seems that the obvious next step would be providing small snippets of arbitrary computation to be run in kernelspace, linked with SQEs. Perhaps [[eBPF]] would be a good starting place. | |||

It would be awesome if I could indicate in each SQE some other uring to which the CQE ought be delivered, basically combining inter-ring messages and SQE submission. This would be the <i>ne plus ultra</i> IMHO of multithreaded uring (and be very much like my [[Libtorque|masters thesis]], not that I claim any inspiration/precedence whatsoever for uring). | |||

==See also== | |||

* [[Libtorque]] | |||

* [[Fast UNIX Servers]] | |||

* [[XDP]] | |||

==External links== | ==External links== | ||

* [https://kernel.dk/io_uring.pdf Efficient IO with io_uring], Axboe's original paper | * [https://kernel.dk/io_uring.pdf Efficient IO with io_uring], Axboe's original paper, and [https://github.com/axboe/liburing/wiki/io_uring-and-networking-in-2023 io_uring and networking in 2023] | ||

* [https://unixism.net/loti/index.html Lord of the io_uring] | * [https://unixism.net/loti/index.html Lord of the io_uring] by Shuveb Hussain | ||

* Yarden Shafir's [https://windows-internals.com/ioring-vs-io_uring-a-comparison-of-windows-and-linux-implementations/ IoRing vs io_uring: A comparison of Windows and Linux implementations] and [https://windows-internals.com/i-o-rings-when-one-i-o-operation-is-not-enough/ I/O Rings—When One I/O is Not Enough] | * Yarden Shafir's [https://windows-internals.com/ioring-vs-io_uring-a-comparison-of-windows-and-linux-implementations/ IoRing vs io_uring: A comparison of Windows and Linux implementations] and [https://windows-internals.com/i-o-rings-when-one-i-o-operation-is-not-enough/ I/O Rings—When One I/O is Not Enough] | ||

* [https://developers.redhat.com/articles/2023/04/12/why-you-should-use-iouring-network-io why you should use io_uring for network io], Donald Hunter for Redhat 2023-04-12 | |||

* [https://developers.redhat.com/articles/2023/04/12/why-you-should-use-iouring-network-io why you should use io_uring for network io], | * [https://learn.microsoft.com/en-us/windows/win32/api/ioringapi/ ioringapi] at Microsoft | ||

* [https://learn.microsoft.com/en-us/windows/win32/api/ioringapi/ ioringapi] at | * Jakub Sitnicki of Cloudflare threw "[https://blog.cloudflare.com/missing-manuals-io_uring-worker-pool/ Missing Manuals: io_uring worker pool]" into the ring 2022-02-04 | ||

* Jakub Sitnicki of | * "[https://github.com/axboe/liburing/issues/324 IORING_SETUP_SQPOLL_PERCPU status?]" liburing issue 324 | ||

* "[https://github.com/axboe/liburing/issues/345 IORING_SETUP_IOPOLL, IORING_SETUP_SQPOLL impact on TCP read event latency]" liburing issue 345 | |||

* and of course, mandatory LWN coverage: | |||

** "[https://lwn.net/Articles/810414/ The Rapid Growth of io_uring]" 2020-01-24 | |||

** "[https://lwn.net/Articles/863071/ Discriptorless files for io_uring]" 2021-07-19 | |||

** "[https://lwn.net/Articles/879724/ Zero-copy network transmission with io_uring]" 2021-12-30 | |||

** "[https://lwn.net/Articles/1002371/ Process creation in io_uring]" 2024-12-20 | |||

[[CATEGORY: Networking]] | |||

Latest revision as of 14:23, 26 December 2024

Introduced in 2019 (kernel 5.1) by Jens Axboe, io_uring (henceforth uring) is a system for providing the kernel with a schedule of system calls, and receiving the results as they're generated. Whereas epoll and kqueue support multiplexing, where you're told when you can usefully perform a system call using some set of filters, uring allows you to specify the system calls themselves (and dependencies between them), and execute the schedule at the kernel dataflow limit. It combines asynchronous I/O, system call polybatching, and flexible buffer management, and is IMHO the most substantial development in the Linux I/O model since Berkeley sockets (yes, I'm aware Berkeley sockets preceded Linux. Let's then say that it's the most substantial development in the UNIX I/O model to originate in Linux):

- Asynchronous I/O without the large copy overheads and restrictions of POSIX AIO

- System call batching/linking across distinct system calls

- Provide a buffer pool, and they'll be used as needed

- Both polling- and interrupt-driven I/O on the kernel side

The core system calls of uring are wrapped by the C API of liburing. Win32 added a very similar interface, IoRing, in 2020. In my opinion, uring ought largely displace epoll in new Linux code. FreeBSD seems to be sticking with kqueue, meaning code using uring won't run there, but neither did epoll (save through FreeBSD's somewhat dubious Linux compatibility layer). Both the system calls and liburing have fairly comprehensive man page coverage, including the io_uring.7 top-level page.

Rings

Central to every uring are two ringbuffers holding CQEs (Completion Queue Entries) and SQE (Submission Queue Entries) descriptors (as best I can tell, this terminology was borrowed from the NVMe specification; I've also seen it with Mellanox NICs). Note that SQEs are allocated externally to the SQ descriptor ring (the same is not true for CQEs). SQEs roughly correspond to a single system call: they are tagged with an operation type, and filled in with the values that would traditionally be supplied as arguments to the appropriate function. Userspace is provided references to SQEs on the SQE ring, which it fills in and submits. Submission operates up through a specified SQE, and thus all SQEs before it in the ring must also be ready to go (this is likely the main reason why the SQ holds descriptors to an external ring of SQEs: you can acquire SQEs, and then submit them out of order, but see IORING_SETUP_NO_SQARRAY). The kernel places results in the CQE ring. These rings are shared between kernel- and userspace. The rings must be distinct unless the kernel specifies the IORING_FEAT_SINGLE_MMAP feature (see below).

uring does not generally make use of errno. Synchronous functions return the negative error code as their result. Completion queue entries have the negated error code placed in their res fields.

CQEs are usually 16 bytes, and SQEs are usually 64 bytes (but see IORING_SETUP_SQE128 and IORING_SETUP_CQE32 below). Either way, SQEs are allocated externally to the submission queue, which is merely a ring of 32-bit descriptors.

System calls

The liburing interface will be sufficient for most users, and it is possible to operate almost wholly without system calls when the system is busy. For the sake of completion, here are the three system calls implementing the uring core (from the kernel's io_uring/io_uring.c):

int io_uring_setup(u32 entries, struct io_uring_params *p);

int io_uring_enter(unsigned fd, u32 to_submit, u32 min_complete, u32 flags, const void* argp, size_t argsz);

int io_uring_register(unsigned fd, unsigned opcode, void *arg, unsigned int nr_args);

Note that io_uring_enter(2) corresponds more closely to the io_uring_enter2(3) wrapper, and indeed io_uring_enter(3) is defined in terms of the latter (from liburing's src/syscall.c):

static inline int __sys_io_uring_enter2(unsigned int fd, unsigned int to_submit,

unsigned int min_complete, unsigned int flags, sigset_t *sig, size_t sz){

return (int) __do_syscall6(__NR_io_uring_enter, fd, to_submit, min_complete, flags, sig, sz);

}

static inline int __sys_io_uring_enter(unsigned int fd, unsigned int to_submit,

unsigned int min_complete, unsigned int flags, sigset_t *sig){

return __sys_io_uring_enter2(fd, to_submit, min_complete, flags, sig, _NSIG / 8);

}

io_uring_enter(2) can both submit SQEs and wait until some number of CQEs are available. Its flags parameter is a bitmask over:

| Flag | Description |

|---|---|

| IORING_ENTER_GETEVENTS | Wait until at least min_complete CQEs are ready before returning. |

| IORING_ENTER_SQ_WAKEUP | Wake up the kernel thread created when using IORING_SETUP_SQPOLL. |

| IORING_ENTER_SQ_WAIT | Wait until at least one entry is free in the submission ring before returning. |

| IORING_ENTER_EXT_ARG | (Since Linux 5.11) Interpret sig to be a io_uring_getevents_arg rather than a pointer to sigset_t. This structure can specify both a sigset_t and a timeout.

struct io_uring_getevents_arg {

__u64 sigmask;

__u32 sigmask_sz;

__u32 pad;

__u64 ts;

};

Is ts nanoseconds from now? From the Epoch? Nope! ns is actually a pointer to a __kernel_timespec, passed to u64_to_user_ptr() in the kernel. One of the uglier aspects of uring. |

| IORING_ENTER_REGISTERED_RING | ring_fd is an offset into the registered ring pool rather than a normal file descriptor. |

| IORING_ENTER_ABS_TIMER | (Since Linux 6.12) The timeout argument in the io_uring_getevents_arg is an absolute time, using the registered clock. |

Setup

The io_uring_setup(2) system call returns a file descriptor, and accepts two parameters, u32 entries and struct io_uring_params *p:

int io_uring_setup(u32 entries, struct io_uring_params *p);

struct io_uring_params {

__u32 sq_entries; // number of SQEs, filled by kernel

__u32 cq_entries; // see IORING_SETUP_CQSIZE and IORING_SETUP_CLAMP

__u32 flags; // see "Flags" below

__u32 sq_thread_cpu; // see IORING_SETUP_SQ_AFF

__u32 sq_thread_idle; // see IORING_SETUP_SQPOLL

__u32 features; // see "Kernel features" below, filled by kernel

__u32 wq_fd; // see IORING_SETUP_ATTACH_WQ

__u32 resv[3]; // must be zero

struct io_sqring_offsets sq_off; // see "Ring structure" below, filled by kernel

struct io_cqring_offsets cq_off; // see "Ring structure" below, filled by kernel

};

resv must be zeroed out. In the absence of flags, the uring uses interrupt-driven I/O. Calling close(2) on the returned descriptor frees all resources associated with the uring.

io_uring_setup(2) is wrapped by liburing's io_uring_queue_init(3) and io_uring_queue_init_params(3). When using these wrappers, io_uring_queue_exit(3) should be used to clean up.

int io_uring_queue_init(unsigned entries, struct io_uring *ring, unsigned flags);

int io_uring_queue_init_params(unsigned entries, struct io_uring *ring, struct io_uring_params *params);

void io_uring_queue_exit(struct io_uring *ring);

These wrappers operate on a struct io_uring:

struct io_uring {

struct io_uring_sq sq;

struct io_uring_cq cq;

unsigned flags;

int ring_fd;

unsigned features;

int enter_ring_fd;

__u8 int_flags;

__u8 pad[3];

unsigned pad2;

};

struct io_uring_sq {

unsigned *khead;

unsigned *ktail;

unsigned *kring_mask; // Deprecated: use `ring_mask`

unsigned *kring_entries; // Deprecated: use `ring_entries`

unsigned *kflags;

unsigned *kdropped;

unsigned *array;

struct io_uring_sqe *sqes;

unsigned sqe_head;

unsigned sqe_tail;

size_t ring_sz;

void *ring_ptr;

unsigned ring_mask;

unsigned ring_entries;

unsigned pad[2];

};

struct io_uring_cq {

unsigned *khead;

unsigned *ktail;

unsigned *kring_mask; // Deprecated: use `ring_mask`

unsigned *kring_entries; // Deprecated: use `ring_entries`

unsigned *kflags;

unsigned *koverflow;

struct io_uring_cqe *cqes;

size_t ring_sz;

void *ring_ptr;

unsigned ring_mask;

unsigned ring_entries;

unsigned pad[2];

};

io_uring_queue_init(3) takes an unsigned flags argument, which is passed as the flags field of io_uring_params. io_uring_queue_init_params(3) takes a struct io_uring_params* argument, which is passed through directly to io_uring_setup(2). It's best to avoid mixing the low-level API and that provided by liburing.

Ring structure

The details of ring structure are typically only relevant when using the low-level API. Two offset structs are used to prepare and control the three (or two, see IORING_FEAT_SINGLE_MMAP) backing memory maps.

struct io_sqring_offsets {

__u32 head;

__u32 tail;

__u32 ring_mask;

__u32 ring_entries;

__u32 flags; // IORING_SQ_*

__u32 dropped;

__u32 array;

__u32 resv1;

__u64 user_addr; // sqe mmap for IORING_SETUP_NO_MMAP

};

struct io_cqring_offsets {

__u32 head;

__u32 tail;

__u32 ring_mask;

__u32 ring_entries;

__u32 overflow;

__u32 cqes;

__u32 flags; // IORING_CQ_*

__u32 resv1;

__u64 user_addr; // sq/cq mmap for IORING_SETUP_NO_MMAP

};

As explained in the io_uring_setup(2) man page, the submission queue can be mapped thusly:

mmap(0, sq_off.array + sq_entries * sizeof(__u32), PROT_READ|PROT_WRITE,

MAP_SHARED|MAP_POPULATE, ring_fd, IORING_OFF_SQ_RING);

The submission queue contains the internal data structure followed by an array of SQE descriptors. These descriptors are 32 bits each no matter the architecture, implying that they are indices into the SQE map, not pointers. The SQEs are allocated:

mmap(0, sq_entries * sizeof(struct io_uring_sqe), PROT_READ|PROT_WRITE,

MAP_SHARED|MAP_POPULATE, ring_fd, IORING_OFF_SQES);

and finally the completion queue:

mmap(0, cq_off.cqes + cq_entries * sizeof(struct io_uring_cqe), PROT_READ|PROT_WRITE,

MAP_SHARED|MAP_POPULATE, ring_fd, IORING_OFF_CQ_RING);

Recall that when the kernel expresses IORING_FEAT_SINGLE_MMAP, the submission and completion queues can be allocated in one mmap(2) call.

Setup flags

The flags field is set up by the caller, and is a bitmask over:

| Flag | Kernel version | Description |

|---|---|---|

| IORING_SETUP_IOPOLL | 5.1 | Instruct the kernel to use polled (as opposed to interrupt-driven) I/O. This is intended for block devices, and requires that O_DIRECT was provided when the file descriptor was opened. |