Check out my first novel, midnight's simulacra!

Io uring: Difference between revisions

| Line 152: | Line 152: | ||

Completions can be detected by four different means: | Completions can be detected by four different means: | ||

* Checking the completion queue speculatively. This either means a periodic check, which will suffer latency up to the period, or a busy check, which will churn CPU, but is probably the lowest-latency solution. This is best accomplished with <tt>io_uring_peek_cqe(3)</tt>, perhaps in conjunction with <tt>io_uring_cq_ready(3)</tt> (neither involves a system call). | * Checking the completion queue speculatively. This either means a periodic check, which will suffer latency up to the period, or a busy check, which will churn CPU, but is probably the lowest-latency solution. This is best accomplished with <tt>io_uring_peek_cqe(3)</tt>, perhaps in conjunction with <tt>io_uring_cq_ready(3)</tt> (neither involves a system call). | ||

<syntaxhighlight lang="c"> | |||

int io_uring_peek_cqe(struct io_uring *ring, struct io_uring_cqe **cqe_ptr); | |||

unsigned io_uring_cq_ready(const struct io_uring *ring); | |||

</syntaxhighlight> | |||

* Waiting on the ring via kernel sleep. Use <tt>io_uring_wait_cqe(3)</tt> (unbounded sleep), <tt>io_uring_wait_cqe_timeout(3)</tt> (bounded sleep), or <tt>io_uring_wait_cqes(3)</tt> (bounded sleep with atomic signal blocking and batch receive). These do not require a system call if they can be immediately satisfied. | * Waiting on the ring via kernel sleep. Use <tt>io_uring_wait_cqe(3)</tt> (unbounded sleep), <tt>io_uring_wait_cqe_timeout(3)</tt> (bounded sleep), or <tt>io_uring_wait_cqes(3)</tt> (bounded sleep with atomic signal blocking and batch receive). These do not require a system call if they can be immediately satisfied. | ||

<syntaxhighlight lang="c"> | |||

int io_uring_wait_cqe(struct io_uring *ring, struct io_uring_cqe **cqe_ptr); | |||

int io_uring_wait_cqe_nr(struct io_uring *ring, struct io_uring_cqe **cqe_ptr, unsigned wait_nr); | |||

int io_uring_wait_cqe_timeout(struct io_uring *ring, struct io_uring_cqe **cqe_ptr, | |||

struct __kernel_timespec *ts); | |||

int io_uring_wait_cqes(struct io_uring *ring, struct io_uring_cqe **cqe_ptr, unsigned wait_nr, | |||

struct __kernel_timespec *ts, sigset_t *sigmask); | |||

int io_uring_peek_cqe(struct io_uring *ring, struct io_uring_cqe **cqe_ptr); | |||

</syntaxhighlight> | |||

* Using an [[eventfd]] together with <tt>io_uring_register_eventfd(3)</tt>. See below for the full API. This eventfd can be combined with e.g. regular [[epoll]]. | * Using an [[eventfd]] together with <tt>io_uring_register_eventfd(3)</tt>. See below for the full API. This eventfd can be combined with e.g. regular [[epoll]]. | ||

* Using processor-dependent memory watch instructions. On x86, there's MONITOR+MWAIT, but they require you to be in ring 0, so you'd probably want UMONITOR/UMWAIT. This ought allow a very low-latency wake that consumes very little power. | * Using processor-dependent memory watch instructions. On x86, there's MONITOR+MWAIT, but they require you to be in ring 0, so you'd probably want UMONITOR/UMWAIT. This ought allow a very low-latency wake that consumes very little power. | ||

Revision as of 04:13, 8 May 2023

io_uring, introduced in 2019 (kernel 5.1) by Jens Axboe, is a system for providing the kernel with a schedule of system calls, and receiving the results as they're generated. It combines asynchronous I/O, system call polybatching, and flexible buffer management, and is IMHO the most substantial development in the Linux I/O model since Berkeley sockets (yes, I'm aware Berkeley sockets preceded Linux. Let's then say that they're the most substantial development in the UNIX I/O model to originate in Linux):

- Asynchronous I/O without the large copy overheads and restrictions of POSIX AIO (no more O_DIRECT, etc.)

- System call batching across distinct system calls (not just readv() and recvmmsg())

- Whole sequences of distinct system calls can be strung together

- Provide a buffer pool, and they'll be used as needed

The core system calls of io_uring (henceforth uring) are wrapped by the C API of liburing. Windows added a very similar interface, IoRing, in 2020. In my opinion, uring ought largely displace epoll in new Linux code. FreeBSD seems to be sticking with kqueue, meaning code using uring won't run there, but neither did epoll (save through FreeBSD's somewhat dubious Linux compatibility layer).

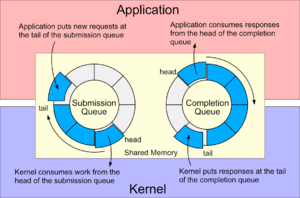

Rings

Central to every uring are two ringbuffers holding CQEs (Completion Queue Entries) and SQE (Submission Queue Entries) descriptors (as best I can tell, this terminology was used in the NVMe specification, and before that on the IBM AS400). SQEs roughly correspond to a single system call: they are tagged with an operation type, and filled in with the values that would traditionally be supplied as arguments to the appropriate function. Userspace is provided references to SQEs on the SQE ring, filled in, and submitted. Submission operates up through a specified SQE, and thus all SQEs before it in the ring must also be ready to go. The kernel places results in the CQE ring. These rings are shared between kernel- and userspace. The rings must be distinct unless the kernel specifies the IORING_FEAT_SINGLE_MMAP feature (see below). Note that SQEs are allocated externally to the SQ descriptor ring.

It is possible for a single submission to result in multiple completions (e.g. io_uring_prep_multishot_accept(3)); this is known as multishot.

uring does not generally make use of errno. Synchronous functions return the negative error code as their result. Completion queue entries have the negated error code placed in their res fields.

CQEs are usually 16 bytes, and SQEs are usually 64 bytes (but see IORING_SETUP_SQE128 and IORING_SETUP_CQE32 below). Either way, SQEs are allocated externally to the submission queue, which is merely a ring of descriptors.

Setup

The io_uring_setup(2) system call returns a file descriptor, and accepts two parameters, u32 entries and struct io_uring_params *p:

int io_uring_setup(u32 entries, struct io_uring_params *p);

struct io_uring_params {

__u32 sq_entries;

__u32 cq_entries;

__u32 flags;

__u32 sq_thread_cpu;

__u32 sq_thread_idle;

__u32 features;

__u32 wq_fd;

__u32 resv[3];

struct io_sqring_offsets sq_off;

struct io_cqring_offsets cq_off;

};

It is wrapped by liburing's io_uring_queue_init(3) and io_uring_queue_init_params(3). When using these wrappers, io_uring_queue_exit(3) should be used to clean up. These wrappers operate on a struct io_uring. io_uring_queue_init(3) takes an unsigned flags argument, which is passed as the flags field of io_uring_params. io_uring_queue_init_params(3) takes a struct io_uring_params* argument, which is passed through directly to io_uring_queue_init(3).

Flags

| Flag | Kernel version | Description |

|---|---|---|

| IORING_SETUP_IOPOLL | 5.1 | Instruct the kernel to use polled (as opposed to interrupt-driven) I/O. This is intended for block devices, and requires that O_DIRECT was provided when the file descriptor was opened. |

| IORING_SETUP_SQPOLL | 5.1 (5.11 for full features) | Create a kernel thread to poll on the submission queue. If the submission queue is kept busy, this thread will reap SQEs without the need for a system call. If enough time goes by without new submissions, the kernel thread goes to sleep, and io_uring_enter(2) must be called to wake it. |

| IORING_SETUP_SQ_AFF | 5.1 | Only meaningful with IORING_SETUP_SQPOLL. The poll thread will be bound to the core specified in sq_thread_cpu. |

| IORING_SETUP_CQSIZE | 5.1 | Create the completion queue with cq_entries entries. This value must be greater than entries, and might be rounded up to the next power of 2. |

| IORING_SETUP_CLAMP | 5.1 | |

| IORING_SETUP_ATTACH_WQ | 5.1 | |

| IORING_SETUP_R_DISABLED | 5.10 | Start the uring disabled, requiring that it be enabled with io_uring_register(2). |

| IORING_SETUP_SUBMIT_ALL | 5.18 | Continue submitting SQEs from a batch even after one results in error. |

| IORING_SETUP_COOP_TASKRUN | 5.19 | |

| IORING_SETUP_TASKRUN_FLAG | 5.19 | |

| IORING_SETUP_SQE128 | 5.19 | Use 128-byte SQEs, necessary for NVMe passthroughs using IORING_OP_URING_CMD. |

| IORING_SETUP_CQE32 | 5.19 | Use 32-byte CQEs, necessary for NVMe passthroughs using IORING_OP_URING_CMD. |

| IORING_SETUP_SINGLE_ISSUER | 6.0 | Hint to the kernel that only a single thread will submit requests, allowing for optimizations. This thread must either be the thread which created the ring, or (iff IORING_SETUP_R_DISABLED is used) the thread which enables the ring. |

| IORING_SETUP_DEFER_TASKRUN | 6.1 |

Kernel features

Various functionality was added to the kernel following the initial release of uring, and thus not necessarily available to all kernels supporting the basic system calls. The __u32 features field of the io_uring_params parameter to io_uring_setup(2) is filled in with feature flags by the kernel.

| Feature | Kernel version | Description |

|---|---|---|

| IORING_FEAT_SINGLE_MMAP | 5.4 | A single mmap(2) can be used for both the submission and completion rings. |

| IORING_FEAT_NODROP | 5.5 (5.19 for full features) | |

| IORING_FEAT_SUBMIT_STABLE | 5.5 | |

| IORING_FEAT_RW_CUR_POS | 5.6 | |

| IORING_FEAT_CUR_PERSONALITY | 5.6 | |

| IORING_FEAT_FAST_POLL | 5.7 | |

| IORING_FEAT_POLL_32BITS | 5.9 | |

| IORING_FEAT_SQPOLL_NONFIXED | 5.11 | |

| IORING_FEAT_ENTER_EXT_ARG | 5.11 | |

| IORING_FEAT_NATIVE_WORKERS | 5.12 | |

| IORING_FEAT_RSRC_TAGS | 5.13 | |

| IORING_FEAT_CQE_SKIP | 5.17 | |

| IORING_FEAT_LINKED_FILE | 5.17 |

Registered resources

Buffers

Since Linux 5.7, user-allocated memory can be provided to uring in groups of buffers (each with a group ID), in which each buffer has its own ID. This was done with the io_uring_prep_provide_buffers(3) call, operating on an SQE. Since 5.19, the "ringmapped buffers" technique (io_uring_register_buf_ring(3) allows these buffers to be used much more effectively.

Registered files

Direct descriptors

Direct descriptors are integers corresponding to private file handle structures internal to the uring, and can be used anywhere uring wants a file descriptor through the IOSQE_FIXED_FILE flag. They have less overhead than true file descriptors, which use structures shared among threads.

Submitting work

Submitting work consists of four steps:

- Acquiring free SQEs

- Filling in those SQEs

- Placing those SQEs at the tail of the submission queue

- Submitting the work, possibly using a system call

Reaping completions

Submitted actions result in completion events:

struct io_uring_cqe {

__u64 user_data; /* sqe->data submission passed back */

__s32 res; /* result code for this event */

__u32 flags;

/*

* If the ring is initialized with IORING_SETUP_CQE32, then this field

* contains 16-bytes of padding, doubling the size of the CQE.

*/

__u64 big_cqe[];

};

Recall that rather than using errno, errors are returned as their negative value in res.

| Flags | Description |

|---|---|

| IORING_CQE_F_BUFFER | |

| IORING_CQE_F_MORE | |

| IORING_CQE_F_SOCK_NONEMPTY | |

| IORING_CQE_F_NOTIF |

Completions can be detected by four different means:

- Checking the completion queue speculatively. This either means a periodic check, which will suffer latency up to the period, or a busy check, which will churn CPU, but is probably the lowest-latency solution. This is best accomplished with io_uring_peek_cqe(3), perhaps in conjunction with io_uring_cq_ready(3) (neither involves a system call).

int io_uring_peek_cqe(struct io_uring *ring, struct io_uring_cqe **cqe_ptr);

unsigned io_uring_cq_ready(const struct io_uring *ring);

- Waiting on the ring via kernel sleep. Use io_uring_wait_cqe(3) (unbounded sleep), io_uring_wait_cqe_timeout(3) (bounded sleep), or io_uring_wait_cqes(3) (bounded sleep with atomic signal blocking and batch receive). These do not require a system call if they can be immediately satisfied.

int io_uring_wait_cqe(struct io_uring *ring, struct io_uring_cqe **cqe_ptr);

int io_uring_wait_cqe_nr(struct io_uring *ring, struct io_uring_cqe **cqe_ptr, unsigned wait_nr);

int io_uring_wait_cqe_timeout(struct io_uring *ring, struct io_uring_cqe **cqe_ptr,

struct __kernel_timespec *ts);

int io_uring_wait_cqes(struct io_uring *ring, struct io_uring_cqe **cqe_ptr, unsigned wait_nr,

struct __kernel_timespec *ts, sigset_t *sigmask);

int io_uring_peek_cqe(struct io_uring *ring, struct io_uring_cqe **cqe_ptr);

- Using an eventfd together with io_uring_register_eventfd(3). See below for the full API. This eventfd can be combined with e.g. regular epoll.

- Using processor-dependent memory watch instructions. On x86, there's MONITOR+MWAIT, but they require you to be in ring 0, so you'd probably want UMONITOR/UMWAIT. This ought allow a very low-latency wake that consumes very little power.

Once the CQE can be returned to the system, do so with io_uring_cqe_seen(3).

Corner cases

Single fd in multiple rings

If logically equivalent SQEs are submitted to different rings, events seem to be returned on only one ring. For instance, if two rings have the same socket added for an `accept()`, a successful three-way TCP handshake will generate only one CQE, on one of the two rings.

Multithreaded use

urings (and especially the struct io_uring object of liburing) are not intended for multithreaded use (quoth Axboe, "don't share a ring between threads"), though they can be used in several threaded paradigms. A single thread submitting and a single thread reaping is definitely supported. Descriptors can be sent among rings with IORING_OP_MSG_RING. Multiple submitters definitely must be serialized in userspace.

Coexistence with epoll/XDP

If you want to monitor both an epoll and a uring in a single thread without busy waiting, you will run into problems. You can't directly poll() a uring for CQE readiness, so it can't be added to your epoll watchset. If you set a zero timeout, you're busy waiting; if you set a non-zero timeout, one is dependent on the other's readiness. There are two solutions:

- Add the epoll fd to your uring with IORING_OP_POLL_ADD, and wait only for uring readiness. When you get a CQE for this submitted event, check the epoll.

- Register an eventfd with your uring with io_uring_register_eventfd(3), add that to your epoll, and when you get POLLIN for this fd, check the completion ring.

The full API here is:

int io_uring_register_eventfd(struct io_uring *ring, int fd);

int io_uring_register_eventfd_async(struct io_uring *ring, int fd);

int io_uring_unregister_eventfd(struct io_uring *ring);

io_uring_register_eventfd_async(3) only posts to the eventfd for events that completed out-of-line. There is not necessarily a bijection between completion events and posts even with the regular form; multiple CQEs can post only a single event, and spurious posts can occur.

Similarly, XDP's native means of notification is via poll(2); XDP can be unified with uring using either of these two methods.

External links

- Efficient IO with io_uring, Axboe's original paper

- Lord of the io_uring

- Yarden Shafir's IoRing vs io_uring: A comparison of Windows and Linux implementations and I/O Rings—When One I/O is Not Enough

- io_uring and networking in 2023, more Axboe

- why you should use io_uring for network io, donald hunter 2023-04-12

- ioringapi at microsoft

- Jakub Sitnicki of cloudflare threw "Missing Manuals: io_uring worker pool" into the ring 2022-02-04