Check out my first novel, midnight's simulacra!

XDP: Difference between revisions

(→AF_XDP) Tags: mobile web edit mobile edit |

No edit summary |

||

| (60 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

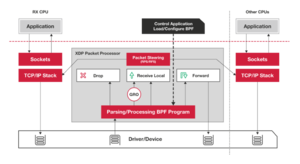

[[File:Xdp-packet-processing-1024x560.png|thumb|frame|XDP summary diagram]] | [[File:Xdp-packet-processing-1024x560.png|thumb|frame|XDP summary diagram]] | ||

In the beginning, there were applications slinging streams through the packetizing [https://en.wikipedia.org/wiki/Interface_Message_Processor Honeywell DDP-516] IMPs, and it was good. Then multiple applications needed the network, and needed it in different ways. Then the networks needed walls of fire, and traffic which was shaped. Some called for the Labeling of Multiple Protocols, and others called for timestamps, and still others wanted to SNAT and DNAT and also to masquerade. And yea, IP was fwadm'd, and then chained, and then tabled, and soon arps and bridges too were tabled. And behold now tables of "nf" and "x". And [[Van Jacobson Channels|Van Jacobson]] looked [[TCP|once more]] upon the land, and frowned, and shed a single tear which became a Channel. And then every ten years or so, we celebrate this by rediscovering Van Jacobson channels under a new name, these days complete with logos and corporate sponsorship. | In the beginning, there were applications slinging streams through the packetizing [https://en.wikipedia.org/wiki/Interface_Message_Processor Honeywell DDP-516] IMPs, and it was good. Then multiple applications needed the network, and needed it in different ways. Then the networks needed walls of fire, and traffic which was shaped. Some called for the [https://en.wikipedia.org/wiki/Multiprotocol_Label_Switching Labeling of Multiple Protocols], and others called for timestamps, and still others wanted to SNAT and DNAT and also to masquerade. And yea, IP was fwadm'd, and then chained, and then tabled, and soon arps and bridges too were tabled. And behold now tables of "nf" and "x". And [[Van Jacobson Channels|Van Jacobson]] looked [[TCP|once more]] upon the land, and frowned, and shed a single tear which became a Channel. And then every ten years or so, we celebrate this by rediscovering Van Jacobson channels under a new name, these days complete with logos and corporate sponsorship. | ||

Most recently, they were rediscovered under the name [[DPDK]], but the masters of the Linux kernel eschewed | Most recently, they were rediscovered under the name [[DPDK]], but the masters of the Linux kernel eschewed this, and instead rediscovered them under the name XDP, the eXpress Data Path, and eXpressed it using [[eBPF]] programs, sometimes even [https://www.youtube.com/watch?v=rog8ou-ZepE unto the eXtreme]. XDP was added to Linux 4.8 and has been heavily developed since then, and is often seen together with [[Io_uring|iouring]], especially with the new <tt>AF_XDP</tt> family of sockets. | ||

XDP is used to bypass large chunks of the Linux networking stack (including all allocations, particularly <tt>alloc_skb()</tt>), especially when dropping packets or shuffling them between NICs. | XDP is used to bypass large chunks of the Linux networking stack (including all allocations, particularly <tt>alloc_skb()</tt>), especially when dropping packets or shuffling them between NICs. | ||

==Differences from [[DPDK]]== | |||

DPDK is a mechanism for full kernel bypass on Linux and FreeBSD. DPDK programs run in userspace atop its [https://doc.dpdk.org/guides-19.11/prog_guide/env_abstraction_layer.html#environment-abstraction-layer Environment Abstraction Layer], and reserve entire NICs. XDP programs live in the kernel, and bind to interfaces created by standard kernel drivers. XDP runs only on the receive path, and is driven by the standard [[NAPI]] ksoftirq, whereas DPDK is always poll-driven; this keeps the CPU constantly engaged, but can lead to less latency than the interrupt-initiated NAPI. XDP selectively forwards traffic to AF_XDP userspace sockets, leaving the remainder unmolested; this untouched traffic will be delivered via the standard mechanisms, and traverse e.g. [[nftables]]. DPDK uses multiple producer/multiple consumer rings, whereas XDP is built around single producer/single consumer rings. | |||

DPDK has been around longer, is more mature, and (as of early 2023) has much more complete documentation. | |||

==Using XDP== | ==Using XDP== | ||

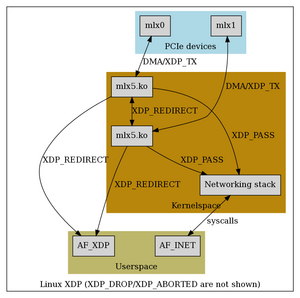

[[File:Xdp.png|right|thumb|XDP dataflow]] | [[File:Xdp.png|right|thumb|XDP dataflow]] | ||

An XDP program is an eBPF program invoked for each received packet which runs either: | An XDP program is an eBPF program invoked for each received packet which runs either: | ||

* early in the kernel receive path, with no driver support (Generic Mode) | * early in the kernel receive path, with no driver support (Generic Mode, <tt>XDP_MODE_SKB</tt>) | ||

* from the driver context following DMA sync, without any kernel networking stack touches (requires driver support) (Native mode) | * from the driver context following DMA sync, without any kernel networking stack touches (requires driver support) (Native mode, <tt>XDP_MODE_NATIVE</tt>) | ||

* on the NIC itself, without touching the CPU (requires hardware+driver support) (Offloaded mode) | * on the NIC itself, without touching the CPU (requires hardware+driver support) (Offloaded mode, <tt>XDP_MODE_HW</tt>) | ||

Offloaded mode can theoretically beat native mode, which will usually beat generic mode. | Offloaded mode can theoretically beat native mode, which will usually beat generic mode. | ||

XDP programs are specific to a NIC, and most easily attached using <tt>xdp-loader</tt>. Lower-level functionality is available from libxdp, and below that is pure eBPF machinery. Multiple XDP programs can be stacked on a single interface as of Linux 5.6 (multiprog requires BTF type information, created by using LLVM 10+ and enabling debug information). XDP is only applied to the receive path. | XDP programs are specific to a NIC, and most easily attached using <tt>xdp-loader</tt>. Lower-level functionality is available from libxdp, and below that is pure eBPF machinery. Multiple XDP programs can be stacked on a single interface as of Linux 5.6 (multiprog requires BTF type information, created by using LLVM 10+ and enabling debug information). XDP is only applied to the receive path. | ||

Note that <tt>XDP_MODE_NATIVE</tt> etc. are libxdp terminology; the kernel works in terms of <tt>XDP_FLAGS_SKB_MODE</tt>, <tt>XDP_FLAGS_DRV_MODE</tt>, and <tt>XDP_FLAGS_HW_MODE</tt>. | |||

An XDP program can modify the packet data and perform limited resizes of the packet. It receives an [https://elixir.bootlin.com/linux/latest/C/ident/xdp_md <tt>xdp_md*</tt>] and returns an action code as <tt>int</tt>: | An XDP program can modify the packet data and perform limited resizes of the packet. It receives an [https://elixir.bootlin.com/linux/latest/C/ident/xdp_md <tt>xdp_md*</tt>] and returns an action code as <tt>int</tt>: | ||

* <tt>XDP_PASS</tt>: the packet continues through the network stack | * <tt>XDP_PASS</tt>: the packet continues through the network stack | ||

* <tt>XDP_DROP</tt>: the packet is dropped | * <tt>XDP_DROP</tt>: the packet is dropped | ||

* <tt>XDP_ABORTED</tt>: indicate an error (the packet is dropped) | * <tt>XDP_ABORTED</tt>: indicate an error (the packet is dropped), trigger <tt>xdp:xdp_exception</tt> tracepoint | ||

* <tt>XDP_TX</tt>: emit the packet back out the interface on which it was received | * <tt>XDP_TX</tt>: emit the packet back out the interface on which it was received | ||

* <tt>XDP_REDIRECT</tt>: send the packet to another NIC or an <tt>AF_XDP</tt> socket | * <tt>XDP_REDIRECT</tt>: send the packet to another NIC or an <tt>AF_XDP</tt> socket | ||

Think of <tt>XDP_ABORTED</tt> as returning -1 from the eBPF program; it's meant to indicate an internal error. Theoretically, the packet could continue through the stack (as it would have if no XDP program had been running), but XDP uses "fail-close" semantics. Edits to the packet are carried through all XDP paths. | Think of <tt>XDP_ABORTED</tt> as returning -1 from the eBPF program; it's meant to indicate an internal error. Theoretically, the packet could continue through the stack (as it would have if no XDP program had been running, or <tt>XDP_PASS</tt> was returned), but XDP uses "fail-close" semantics. Edits to the packet are carried through all XDP paths. Note that everything up through and including <tt>XDP_TX</tt> can be represented in two bits; this will be important later when passing flags to bpf helpers. | ||

<tt>xdp-loader status</tt> will list NICs and show details regarding any attached XDP programs. <tt>ethtool -S</tt> shows XDP statistics. <tt>ip link</tt> will also show a summary of devices' XDP chains. <tt>xdp_dispatcher</tt> is a metaprogram loaded by <tt>xdp-loader</tt> to support chaining multiple XDP programs; it will be present whenever programs were loaded using <tt>xdp-loader</tt>. | <tt>xdp-loader status</tt> will list NICs and show details regarding any attached XDP programs. <tt>ethtool -S</tt> shows XDP statistics. <tt>ip link</tt> will also show a summary of devices' XDP chains. <tt>xdp_dispatcher</tt> is a metaprogram loaded by <tt>xdp-loader</tt> to support chaining multiple XDP programs; it will be present whenever programs were loaded using <tt>xdp-loader</tt>. | ||

Note that XDP runs prior to the packet socket copies which drive <tt>tcpdump</tt>, and thus packets dropped or redirected in XDP will not show up there. It's better to use <tt>xdpdump</tt> when interacting with XDP programs; this also shows the XDP decision made for each packet. | Note that XDP runs prior to the packet socket copies which drive <tt>tcpdump</tt>, and thus packets dropped or redirected in XDP will not show up there. It's better to use <tt>xdpdump</tt> when interacting with XDP programs; this also shows the XDP decision made for each packet. Similarly, packets hit XDP before being evaluated by [[nftables]], and thus represent a means of firewall bypass. Note also that locally-generated self-directed packets do not appear to traverse XDP (update: they do, just they always traverse the loopback device). | ||

===Writing XDP programs=== | |||

For XDP programs written in [[C]], use <tt>clang</tt> with <tt>-target bpf -g</tt> (as noted above, use <tt>-g</tt> to get BTF information, necessary for <tt>xdp-loader</tt>'s multiprogram support). If your object is rejected by the eBPF verifier, you've most likely left out packet length checks. Proper checks before accessing data are mandated by the verifier. | |||

The C standard library is not available to XDP programs (though you might be able to use <tt>__builtin_memcmp()</tt>, <tt>__builtin_memcpy()</tt>, and friends). Looping is not generally possible except through the <tt>bpf_loop()</tt> helper. | |||

Configuration of an XDP program is non-trivial. Consider an XDP program which wants to forward to an <tt>AF_XDP</tt> socket only for certain destination IP addresses, passing the remainder into the network stack. There seem to be three ways to do this: | |||

* compile the XDP program on the fly, providing the desired addresses as constants in the source, or | |||

* suitably patch the XDP object at runtime, or | |||

* load eBPF maps with the desired addresses, and check them in every iteration of the XDP program. | |||

===Modifying packet data=== | I believe the latter to be JITted down to just a few instructions, despite looking like a system call (you're in the kernel, where there are no system calls). eBPF maps are atomic, though, so there must presumably be some manner of synchronization there. Recompiling on the fly pretty much requires dragging along bpfcc. Patching the binary seems rather fragile for peacetime. | ||

Modifications should generally take place through the functions exposed in <tt>bpf-helpers(7)</tt>. Updating data in a TCP payload, for instance, requires recomputing a TCP checksum, which can done most effectively through these methods. <tt>bpf_redirect()</tt>, <tt>bpf_redirect_peer()</tt>, and <tt>bpf_redirect_map()</tt> are used to set the destination of an <tt>XDP_REDIRECT</tt> operation. <tt>bpf_clone_redirect()</tt> allows a <i>clone</i> of a packet to be redirected, while retaining the original. | |||

====Modifying packet data==== | |||

Modifications should generally take place through the functions exposed in <tt>bpf-helpers(7)</tt>. Updating data in a TCP payload, for instance, requires recomputing a TCP checksum, which can be done most effectively through these methods. <tt>bpf_redirect()</tt>, <tt>bpf_redirect_peer()</tt>, and <tt>bpf_redirect_map()</tt> are used to set the destination of an <tt>XDP_REDIRECT</tt> operation. <tt>bpf_clone_redirect()</tt> allows a <i>clone</i> of a packet to be redirected, while retaining the original. | |||

====eBPF helpers==== | |||

* <tt>long bpf_redirect_map(struct bpf_map *map, u32 key, u64 flags)</tt> | |||

Redirect the packet to the endpoint referenced by <tt>map</tt> at <tt>key</tt>. Behavior depends on the map type: | |||

** <tt>BPF_MAP_TYPE_XSKMAP</tt>: deliver to the referenced <tt>AF_XSK</tt> socket | |||

** <tt>BPF_MAP_TYPE_CPUMAP</tt>: deliver to the referenced cpu for netstack injection (software RSS). a kernel thread is bound to each cpu in the map to handle directed packets. | |||

** <tt>BPF_MAP_TYPE_DEVMAP</tt>: an array of network devices | |||

** <tt>BPF_MAP_TYPE_DEVMAP_HASH</tt>: a hash table of network devices (key needn't be ifindex) | |||

<tt>XDP_REDIRECT</tt> is returned on success. The lower two bits of <tt>flags</tt> are returned on an error. Other bits of <tt>flags</tt> are handled in a map type-dependent manner. As of 6.1.2, the only flags defined are <tt>BPF_F_BROADCAST</tt> and <tt>BPF_F_EXCLUDE_INGRESS</tt>, intended for use with device maps. <tt>BPF_F_BROADCAST</tt> will send the packet to all interfaces in the map (<tt>key</tt> is ignored in this case); <tt>BPF_F_EXCLUDE_INGRESS</tt> will exclude the ingress interface from broadcast. | |||

* <tt>long bpf_loop(u32 nr_loops, long(*callback_fn)(u32 iter, void* ctx), void *callback_ctx, u64 flags)</tt> | |||

Call <tt>callback_fn(callback_ctx)</tt> up to <tt>nr_loops</tt> times, passing <tt>callback_ctx</tt> the iteration (starting with 0 and increasing by 1) and <tt>callback_ctx</tt>. | |||

===Caveats=== | ===Caveats=== | ||

There is significant (on the order of hundreds of bytes) overhead on each packet. Furthermore, until recently (5.18), or without driver support, XDP cannot use packets larger than an architectural page. On amd64 with 4KB pages, without the new "multibuf" support, only about 3520 bytes are available within a frame. This is smaller than many jumbo MTUs, and furthermore precludes techniques like LRO. With multibuf, packets of arbitrary size can pass through XDP. | There is significant (on the order of hundreds of bytes) overhead on each packet. Furthermore, until recently (5.18), or without driver support, XDP cannot use packets larger than an architectural page. On amd64 with 4KB pages, without the new "multibuf" support, only about 3520 bytes are available within a frame. This is smaller than many jumbo MTUs, and furthermore precludes techniques like LRO. With multibuf, packets of arbitrary size can pass through XDP. | ||

A very serious problem is that of IP fragmentation if one is doing anything with Layer 4. If you're for instance only forwarding some traffic to an <tt>AF_XDP</tt> socket, based on TCP or UDP port, you'll have to reassemble fragments in your XDP program in order to deliver any secondary fragments (as they will be lacking the necessary Layer 4 headers). | |||

====Debugging XDP==== | |||

* <tt>bpftrace -e 'tracepoint:xdp:* { @cnt[probe] = count(); }'</tt> | |||

* <tt>bpftool map list</tt> (with optional <tt>--json</tt> or <tt>--pretty</tt>) | |||

* <tt>xdp-filter load NIC</tt> (for some NIC with an existing XDP program) | |||

==AF_XDP== | ==AF_XDP== | ||

When <tt>CONFIG_XDP_SOCKETS</tt> is set, <tt>bpf_redirect_map()</tt> can be used in conjunction with the eBPF map <tt>BPF_MAP_TYPE_XSKMAP</tt> to send traffic to userspace AF_XDP sockets (XSKs). With driver support, this is a zero-copy operation. Each XSK, when created, should be inserted into XSKMAP. | [[File:Netfilter-packet-flow.svg.png|right|thumb|The Diagram]] | ||

When <tt>CONFIG_XDP_SOCKETS</tt> is set, <tt>bpf_redirect_map()</tt> can be used in conjunction with the eBPF map type <tt>BPF_MAP_TYPE_XSKMAP</tt> to send traffic to userspace AF_XDP sockets (XSKs). With driver support, this is a zero-copy operation, and bypasses skbuff allocation. Each XSK, when created, should be inserted into XSKMAP. | |||

XSKs come in two varieties: <tt>XDP_SKB</tt> and <tt>XDP_DRV</tt>. <tt>XDP_DRV</tt> corresponds to Native Mode/Offload Mode XDP, and is faster than the generic <tt>XDP_SKB</tt>, but requires driver support. | XSKs come in two varieties: <tt>XDP_SKB</tt> and <tt>XDP_DRV</tt>. <tt>XDP_DRV</tt> corresponds to Native Mode/Offload Mode XDP, and is faster than the generic <tt>XDP_SKB</tt>, but requires driver support. True zero-copy requires the use of <tt>XDP_DRV</tt> XSKs together with <tt>XDP_MODE_HW</tt> or <tt>XDP_MODE_NATIVE</tt> XDP programs. | ||

Each XSK has either one or both of two RX and TX descriptor ringbuffers, along with a data ringbuffer (the UMEM) into which all descriptor rings point. The descriptor rings are registered with the kernel using <tt>XDP_RX_RING</tt>/<tt>XDP_UMEM_FILL_RING</tt> and <tt>XDP_TX_RING</tt>/<tt>XDP_UMEM_COMPLETION_RING</tt> with <tt>setsockopt(2)</tt>. UMEM is a virtually contiguous area divided into equally-sized chunks (chunk size is up to the user; it should usually be the MTU plus XDP overhead), and registered with the <tt>XDP_UMEM_REG</tt> sockopt. Packets will be written to distinct chunks. <tt>bind(2)</tt> then associates the XSK with a particular device and queueid, at which point traffic begins to flow. It is expected that chunks will be a multiple of the page size, but this does not appear to be | How is <tt>AF_XDP</tt> different from [[Packet sockets|<tt>AF_PACKET</tt>]] (especially when the latter is backed with kernel-shared ringbuffers via <tt>PACKET_RX_RING</tt>)? Without mapped ringbuffers, packet sockets will always take an skbuff allocation (see diagram to the right) into which they will clone the packet, whereas XDP can avoid this outside of Generic Mode. Even with mapped ringbuffers, there is a copy—the packet socket fundamentally <i>clones</i> frames. XDP furthermore allows more direct control over parallelism, and can be tied into the NIC's hardware Receiver-Side Scaling. XDP allows RX and TX descriptors to point into the same memory pool, potentially avoiding copies. Note that the [https://lwn.net/Articles/737947/ AF_PACKETv4] proposal also used distinct descriptor rings. | ||

Each XSK has either one or both of two RX and TX descriptor ringbuffers, along with a data ringbuffer (the UMEM) into which all descriptor rings point. The descriptor rings are registered with the kernel using <tt>XDP_RX_RING</tt>/<tt>XDP_UMEM_FILL_RING</tt> and <tt>XDP_TX_RING</tt>/<tt>XDP_UMEM_COMPLETION_RING</tt> with <tt>setsockopt(2)</tt>. UMEM is a virtually contiguous area divided into equally-sized chunks (chunk size is up to the user; it should usually be the MTU plus XDP overhead), and registered with the <tt>XDP_UMEM_REG</tt> sockopt. Packets will be written to distinct chunks. <tt>bind(2)</tt> then associates the XSK with a particular device and queueid, at which point traffic begins to flow. It is expected that chunks will be a multiple of the page size, but this does not appear to be necessary—in particular, success has been had doubling up two 2KB chunks on a 1500B MTU, despite amd64's minimum 4KB page size. | |||

The Fill and Completion rings are associated with the UMEM, which might be shared among processes (and multiple XSKs). Each XSK will need its own RX and TX rings, but the Fill and Completion rings are shared along with the UMEM. | The Fill and Completion rings are associated with the UMEM, which might be shared among processes (and multiple XSKs). Each XSK will need its own RX and TX rings, but the Fill and Completion rings are shared along with the UMEM. | ||

| Line 44: | Line 84: | ||

The application indicates using the Fill ring which chunks packets ought be placed in. The <tt>poll()</tt>able RX ring signals packets written by the kernel. The application writes packets for transmit into the TX ring, and the kernel moves descriptors from TX to the Completion ring once transmitted. | The application indicates using the Fill ring which chunks packets ought be placed in. The <tt>poll()</tt>able RX ring signals packets written by the kernel. The application writes packets for transmit into the TX ring, and the kernel moves descriptors from TX to the Completion ring once transmitted. | ||

==Driver | ===The UMEM=== | ||

<syntaxhighlight lang="c"> | |||

struct xdp_umem_reg { | |||

__u64 addr; /* Start of packet data area */ | |||

__u64 len; /* Length of packet data area */ | |||

__u32 chunk_size; | |||

__u32 headroom; | |||

__u32 flags; | |||

}; | |||

</syntaxhighlight> | |||

<tt>XDP_UMEM_REG</tt> wants a <tt>struct xdp_umem_reg</tt>, containing <tt>addr</tt> (a pointer to the map as <tt>uintptr_t</tt>), <tt>len</tt> (length of the map in bytes), <tt>chunk_size</tt> and <tt>headroom</tt>. regarding <tt>chunk_size</tt>, if you're getting <tt>EINVAL</tt>, consult the kernel's <tt>xdp_umem_reg()</tt> (from 6.1.7): | |||

<syntaxhighlight lang="c"> | |||

if (chunk_size < XDP_UMEM_MIN_CHUNK_SIZE || chunk_size > PAGE_SIZE) { | |||

/* Strictly speaking we could support this, if: | |||

* - huge pages, or | |||

* - using an IOMMU, or | |||

* - making sure the memory area is consecutive | |||

* but for now, we simply say "computer says no". | |||

*/ | |||

return -EINVAL; | |||

} | |||

if (mr->flags & ~XDP_UMEM_UNALIGNED_CHUNK_FLAG) | |||

return -EINVAL; | |||

if (!unaligned_chunks && !is_power_of_2(chunk_size)) | |||

return -EINVAL; | |||

if (!PAGE_ALIGNED(addr)) { | |||

/* Memory area has to be page size aligned. For | |||

* simplicity, this might change. | |||

*/ | |||

return -EINVAL; | |||

} | |||

if ((addr + size) < addr) | |||

return -EINVAL; | |||

npgs = div_u64_rem(size, PAGE_SIZE, &npgs_rem); | |||

if (npgs_rem) | |||

npgs++; | |||

if (npgs > U32_MAX) | |||

return -EINVAL; | |||

chunks = (unsigned int)div_u64_rem(size, chunk_size, &chunks_rem); | |||

if (chunks == 0) | |||

return -EINVAL; | |||

if (!unaligned_chunks && chunks_rem) | |||

return -EINVAL; | |||

if (headroom >= chunk_size - XDP_PACKET_HEADROOM) | |||

return -EINVAL; | |||

</syntaxhighlight> | |||

===Rings and ring descriptors=== | |||

The RX and TX descriptor rings are arrays of <tt>struct xdp_desc</tt>, a 16-byte structure: | |||

<syntaxhighlight lang="c"> | |||

struct xdp_desc { | |||

__u64 addr; | |||

__u32 len; | |||

__u32 options; | |||

}; | |||

</syntaxhighlight> | |||

<tt>addr</tt> here is a byte index into the UMEM map. The fill and completion rings are arrays of <tt>__u64</tt>; they need only an index into the UMEM. Rings themselves are managed with <tt>struct xdp_ring_offset</tt>: | |||

<syntaxhighlight lang="c"> | |||

struct xdp_ring_offset { | |||

__u64 producer; | |||

__u64 consumer; | |||

__u64 desc; | |||

__u64 flags; | |||

}; | |||

</syntaxhighlight> | |||

Only the UMEM is freely allocated by the user process. The rings are mapped into user space from a shared kernel object, via calling <tt>mmap(2)</tt> on the XSK using the results of the <tt>XDP_MAP_OFFSETS</tt> sockopt. | |||

The producer and consumer variables tend to be placed 64 bytes apart, presumably so that their cachelines do not interact. See the kernel's [https://www.kernel.org/doc/html/latest/core-api/circular-buffers.html circular buffer documentation]. XDP works in producer/consumer mode, not "overwrite" mode, and thus loses newer events when the ring is full. The producer writes to the index specified by <tt>producer</tt>, then increments it. The consumer reads from the index specified by <tt>consumer</tt>, then increments it. The consumer may not read when <tt>producer == consumer</tt>, as the buffer is empty. The producer may not write when <tt>succ(producer) == consumer</tt>, as the buffer is full. Both wrap to 0 when incremented beyond the end of the ring. Userspace is a producer for the FILL and TX rings, and a consumer for the COMPLETION and RX rings. | |||

The population of the buffer is <tt>CIRC_CNT(prod, cons, size)</tt> aka <tt>((prod) - (cons)) & ((size)-1)</tt>. The space in the buffer is <tt>CIRC_SPACE(prod, cons, size)</tt> aka <tt>CIRC_CNT(cons, prod + 1, size)</tt>. | |||

===Sizing the maps=== | |||

What size ought these maps be? Larger maps provide the ability to absorb bigger bursts, but they also give rise to higher maximum latencies, whereas drops can often provide earlier feedback to the sender (depending on the protocol). Fundamentally, if packets arrive more quickly than you clear them, no buffer is sufficient to deal with arbitrarily long time periods. Meanwhile, if there is only one writeable area, you must handle packets in less time than the propagation delay of a single packet to avoid drops (67.1ns for minimum frames at 10Gbps). | |||

XDP requires that all the map entry counts be powers of two. The UMEM may not be more than 2**32 times the smallest page size; as this would be 16TiB on a 4KiB page, you probably needn't worry about that requirement. | |||

The constants <tt>XSK_RING_CONS__DEFAULT_NUM_DESCS</tt> and <tt>XSK_RING_PROD__DEFAULT_NUM_DESCS</tt> (henceforth <tt>CONSDEF</tt> and <tt>PRODDEF</tt> respectively) are provided by libxdp; let's assume our consumer and producer rings respectively to have these as minimum sizes (if you'd like to hold more than CONSDEF, just shift it however many positions to the left). If we have ''n'' threads, each with their own RX ring of at least <tt>CONSDEF</tt> entries, that implies backing by at least ''n * CONSDEF'' UMEM entries (assuming equal distribution among threads). AFAIK there is no way to fill a packet and dispatch it to an RX ring later, so if our RX rings are full, we can't use any more than at most one extra fill descriptor. So our fill ring ought hold at least ''n * CONSDEF'' descriptors, but the UMEM needn't support more than ''n * CONSDEF + 1'', and can reasonably get away with ''n * CONSDEF''. | |||

Only one of these maps (the UMEM) is mapped in userspace. The descriptor rings are all mapped in the kernel, based off the size we provide. We probably want to use [[Pages|huge pages]] to back the UMEM (they're not currently available for the descriptor rings), and if so, it must be a multiple of some huge page size. Assuming amd64, 1GB is probably a bit much (256Ki 4KiB chunks), so that means a multiple of 2MiB (''is 1GB really too much? With an admittedly sky-high 800ms round trip time, 1GB is exactly one bandwidth-delay-product for 10Gbps...''). To minimize total allocation, then minimize waste, while satisfying our other constraints: | |||

* ''UMEM_min'' is ''n * CONSDEF * chunksize'' bytes | |||

* ''UMEM_max'' is the minimum multiple of the desired huge page size such that ''UMEM_max >= UMEM_min'' | |||

* Set ''descs = CONSDEF'' | |||

* While ''n * (descs << 1) * chunksize <= UMEM_max'', ''descs <<= 1'' | |||

''UMEM_max'' is the size of your UMEM in bytes, with support for ''n * descs'' chunks of ''chunksize'' bytes each. ''UMEM_max - n * descs * chunksize'' number of bytes wasted in this allocation. Each of 'n' RX rings supports ''descs'' descriptors (the actual size of the descriptor rings is somewhat larger than this would imply, and set by the kernel). The fill ring supports ''n * descs'' descriptors. | |||

Fill ring descriptors are currently eight bytes, while RX ring descriptors are sixteen. On a 64-byte cacheline, it thus might make sense to fetch packets four at a time per thread, and perhaps prefetch subsequent packet data. Of course, this wouldn't be useful if your packet data is already resident in cache thanks to e.g. [[DDIO]]. | |||

==Driver details== | |||

"Bounce on bind" is bad: these devices go down for a few seconds when attaching an XDP program. This is all based on personal experimentation and reading mailing lists. | |||

{|class="wikitable sortable" | {|class="wikitable sortable" | ||

! Driver !! Zero-copy !! Multibuf || | ! Driver !! Zero-copy !! Multibuf || Native/Offloaded || XDP_REDIRECT || Bounce on bind | ||

|- | |- | ||

| | | atlantic (Aquantia) || N || N || N || N || N | ||

|- | |- | ||

| | | i40e (Intel) || Y || Y || ? || Y || ? | ||

|- | |- | ||

| | | ice (Intel) || ? || ? || ? || ? || ? | ||

|- | |- | ||

| | | ixgbe (Intel) || Y || Y || Native || Y || Y | ||

|- | |- | ||

| | | cxgb3 (Chelsio) || N || N || N || Y || N | ||

|- | |- | ||

| nfp || ? || ? || Y | | mvneta (Marvell) || ? || Y || ? || Y || ? | ||

|- | |||

| mvpp2 (Marvell) || ? || ? || ? || Y || ? | |||

|- | |||

| mlx4 (Mellanox) || Y || ? || Native || ? || ? | |||

|- | |||

| mlx5 (Mellanox) || ? || ? || Native || Y || ? | |||

|- | |||

| netsec (Socionext) || ? || ? || ? || Y || ? | |||

|- | |||

| nfp (Netronome) || ? || ? || Offloaded || ? || ? | |||

|- | |||

| tuntap || ? || ? || ? || Y || ? | |||

|- | |||

| veth || ? || ? || ? || Y || ? | |||

|- | |||

| virtio_net || ? || ? || ? || Y || ? | |||

|- | |- | ||

|} | |} | ||

Aquantia is now Marvell. Mellanox is now NVIDIA. | |||

==See Also== | ==See Also== | ||

| Line 67: | Line 223: | ||

* [[Fast UNIX Servers]] | * [[Fast UNIX Servers]] | ||

* [[Io_uring|iouring]] | * [[Io_uring|iouring]] | ||

* [[100GbE]] | |||

* "[[Extended_disquisitions_pertaining_to_eXpress_data_paths_(XDP)|eXtended Disquisitions Pertaining to eXpress Data Paths]]", a [[Dankblog|DANKBLOG]] post from 2023-04-20 | |||

==External links== | ==External links== | ||

| Line 72: | Line 230: | ||

* [https://legacy.netdevconf.info/0x14/pub/slides/37/Adding%20AF_XDP%20zero-copy%20support%20to%20drivers.pdf Adding AF_XDP Zero-Copy Support to Drivers], Netdevconf 2020 | * [https://legacy.netdevconf.info/0x14/pub/slides/37/Adding%20AF_XDP%20zero-copy%20support%20to%20drivers.pdf Adding AF_XDP Zero-Copy Support to Drivers], Netdevconf 2020 | ||

* [https://lwn.net/Articles/750845/ Accelerating networking with AF_XDP], LWN 2018-04-09 | * [https://lwn.net/Articles/750845/ Accelerating networking with AF_XDP], LWN 2018-04-09 | ||

* [https://blog.packagecloud.io/monitoring-tuning-linux-networking-stack-receiving-data/ Monitoring and tuning the Linux Networking Stack: Receiving Data], PackageCloud 2016-06-21 | |||

[[CATEGORY: Networking]] | |||

Latest revision as of 02:43, 29 June 2023

In the beginning, there were applications slinging streams through the packetizing Honeywell DDP-516 IMPs, and it was good. Then multiple applications needed the network, and needed it in different ways. Then the networks needed walls of fire, and traffic which was shaped. Some called for the Labeling of Multiple Protocols, and others called for timestamps, and still others wanted to SNAT and DNAT and also to masquerade. And yea, IP was fwadm'd, and then chained, and then tabled, and soon arps and bridges too were tabled. And behold now tables of "nf" and "x". And Van Jacobson looked once more upon the land, and frowned, and shed a single tear which became a Channel. And then every ten years or so, we celebrate this by rediscovering Van Jacobson channels under a new name, these days complete with logos and corporate sponsorship.

Most recently, they were rediscovered under the name DPDK, but the masters of the Linux kernel eschewed this, and instead rediscovered them under the name XDP, the eXpress Data Path, and eXpressed it using eBPF programs, sometimes even unto the eXtreme. XDP was added to Linux 4.8 and has been heavily developed since then, and is often seen together with iouring, especially with the new AF_XDP family of sockets.

XDP is used to bypass large chunks of the Linux networking stack (including all allocations, particularly alloc_skb()), especially when dropping packets or shuffling them between NICs.

Differences from DPDK

DPDK is a mechanism for full kernel bypass on Linux and FreeBSD. DPDK programs run in userspace atop its Environment Abstraction Layer, and reserve entire NICs. XDP programs live in the kernel, and bind to interfaces created by standard kernel drivers. XDP runs only on the receive path, and is driven by the standard NAPI ksoftirq, whereas DPDK is always poll-driven; this keeps the CPU constantly engaged, but can lead to less latency than the interrupt-initiated NAPI. XDP selectively forwards traffic to AF_XDP userspace sockets, leaving the remainder unmolested; this untouched traffic will be delivered via the standard mechanisms, and traverse e.g. nftables. DPDK uses multiple producer/multiple consumer rings, whereas XDP is built around single producer/single consumer rings.

DPDK has been around longer, is more mature, and (as of early 2023) has much more complete documentation.

Using XDP

An XDP program is an eBPF program invoked for each received packet which runs either:

- early in the kernel receive path, with no driver support (Generic Mode, XDP_MODE_SKB)

- from the driver context following DMA sync, without any kernel networking stack touches (requires driver support) (Native mode, XDP_MODE_NATIVE)

- on the NIC itself, without touching the CPU (requires hardware+driver support) (Offloaded mode, XDP_MODE_HW)

Offloaded mode can theoretically beat native mode, which will usually beat generic mode. XDP programs are specific to a NIC, and most easily attached using xdp-loader. Lower-level functionality is available from libxdp, and below that is pure eBPF machinery. Multiple XDP programs can be stacked on a single interface as of Linux 5.6 (multiprog requires BTF type information, created by using LLVM 10+ and enabling debug information). XDP is only applied to the receive path.

Note that XDP_MODE_NATIVE etc. are libxdp terminology; the kernel works in terms of XDP_FLAGS_SKB_MODE, XDP_FLAGS_DRV_MODE, and XDP_FLAGS_HW_MODE.

An XDP program can modify the packet data and perform limited resizes of the packet. It receives an xdp_md* and returns an action code as int:

- XDP_PASS: the packet continues through the network stack

- XDP_DROP: the packet is dropped

- XDP_ABORTED: indicate an error (the packet is dropped), trigger xdp:xdp_exception tracepoint

- XDP_TX: emit the packet back out the interface on which it was received

- XDP_REDIRECT: send the packet to another NIC or an AF_XDP socket

Think of XDP_ABORTED as returning -1 from the eBPF program; it's meant to indicate an internal error. Theoretically, the packet could continue through the stack (as it would have if no XDP program had been running, or XDP_PASS was returned), but XDP uses "fail-close" semantics. Edits to the packet are carried through all XDP paths. Note that everything up through and including XDP_TX can be represented in two bits; this will be important later when passing flags to bpf helpers.

xdp-loader status will list NICs and show details regarding any attached XDP programs. ethtool -S shows XDP statistics. ip link will also show a summary of devices' XDP chains. xdp_dispatcher is a metaprogram loaded by xdp-loader to support chaining multiple XDP programs; it will be present whenever programs were loaded using xdp-loader.

Note that XDP runs prior to the packet socket copies which drive tcpdump, and thus packets dropped or redirected in XDP will not show up there. It's better to use xdpdump when interacting with XDP programs; this also shows the XDP decision made for each packet. Similarly, packets hit XDP before being evaluated by nftables, and thus represent a means of firewall bypass. Note also that locally-generated self-directed packets do not appear to traverse XDP (update: they do, just they always traverse the loopback device).

Writing XDP programs

For XDP programs written in C, use clang with -target bpf -g (as noted above, use -g to get BTF information, necessary for xdp-loader's multiprogram support). If your object is rejected by the eBPF verifier, you've most likely left out packet length checks. Proper checks before accessing data are mandated by the verifier.

The C standard library is not available to XDP programs (though you might be able to use __builtin_memcmp(), __builtin_memcpy(), and friends). Looping is not generally possible except through the bpf_loop() helper.

Configuration of an XDP program is non-trivial. Consider an XDP program which wants to forward to an AF_XDP socket only for certain destination IP addresses, passing the remainder into the network stack. There seem to be three ways to do this:

- compile the XDP program on the fly, providing the desired addresses as constants in the source, or

- suitably patch the XDP object at runtime, or

- load eBPF maps with the desired addresses, and check them in every iteration of the XDP program.

I believe the latter to be JITted down to just a few instructions, despite looking like a system call (you're in the kernel, where there are no system calls). eBPF maps are atomic, though, so there must presumably be some manner of synchronization there. Recompiling on the fly pretty much requires dragging along bpfcc. Patching the binary seems rather fragile for peacetime.

Modifying packet data

Modifications should generally take place through the functions exposed in bpf-helpers(7). Updating data in a TCP payload, for instance, requires recomputing a TCP checksum, which can be done most effectively through these methods. bpf_redirect(), bpf_redirect_peer(), and bpf_redirect_map() are used to set the destination of an XDP_REDIRECT operation. bpf_clone_redirect() allows a clone of a packet to be redirected, while retaining the original.

eBPF helpers

- long bpf_redirect_map(struct bpf_map *map, u32 key, u64 flags)

Redirect the packet to the endpoint referenced by map at key. Behavior depends on the map type:

- BPF_MAP_TYPE_XSKMAP: deliver to the referenced AF_XSK socket

- BPF_MAP_TYPE_CPUMAP: deliver to the referenced cpu for netstack injection (software RSS). a kernel thread is bound to each cpu in the map to handle directed packets.

- BPF_MAP_TYPE_DEVMAP: an array of network devices

- BPF_MAP_TYPE_DEVMAP_HASH: a hash table of network devices (key needn't be ifindex)

XDP_REDIRECT is returned on success. The lower two bits of flags are returned on an error. Other bits of flags are handled in a map type-dependent manner. As of 6.1.2, the only flags defined are BPF_F_BROADCAST and BPF_F_EXCLUDE_INGRESS, intended for use with device maps. BPF_F_BROADCAST will send the packet to all interfaces in the map (key is ignored in this case); BPF_F_EXCLUDE_INGRESS will exclude the ingress interface from broadcast.

- long bpf_loop(u32 nr_loops, long(*callback_fn)(u32 iter, void* ctx), void *callback_ctx, u64 flags)

Call callback_fn(callback_ctx) up to nr_loops times, passing callback_ctx the iteration (starting with 0 and increasing by 1) and callback_ctx.

Caveats

There is significant (on the order of hundreds of bytes) overhead on each packet. Furthermore, until recently (5.18), or without driver support, XDP cannot use packets larger than an architectural page. On amd64 with 4KB pages, without the new "multibuf" support, only about 3520 bytes are available within a frame. This is smaller than many jumbo MTUs, and furthermore precludes techniques like LRO. With multibuf, packets of arbitrary size can pass through XDP.

A very serious problem is that of IP fragmentation if one is doing anything with Layer 4. If you're for instance only forwarding some traffic to an AF_XDP socket, based on TCP or UDP port, you'll have to reassemble fragments in your XDP program in order to deliver any secondary fragments (as they will be lacking the necessary Layer 4 headers).

Debugging XDP

- bpftrace -e 'tracepoint:xdp:* { @cnt[probe] = count(); }'

- bpftool map list (with optional --json or --pretty)

- xdp-filter load NIC (for some NIC with an existing XDP program)

AF_XDP

When CONFIG_XDP_SOCKETS is set, bpf_redirect_map() can be used in conjunction with the eBPF map type BPF_MAP_TYPE_XSKMAP to send traffic to userspace AF_XDP sockets (XSKs). With driver support, this is a zero-copy operation, and bypasses skbuff allocation. Each XSK, when created, should be inserted into XSKMAP.

XSKs come in two varieties: XDP_SKB and XDP_DRV. XDP_DRV corresponds to Native Mode/Offload Mode XDP, and is faster than the generic XDP_SKB, but requires driver support. True zero-copy requires the use of XDP_DRV XSKs together with XDP_MODE_HW or XDP_MODE_NATIVE XDP programs.

How is AF_XDP different from AF_PACKET (especially when the latter is backed with kernel-shared ringbuffers via PACKET_RX_RING)? Without mapped ringbuffers, packet sockets will always take an skbuff allocation (see diagram to the right) into which they will clone the packet, whereas XDP can avoid this outside of Generic Mode. Even with mapped ringbuffers, there is a copy—the packet socket fundamentally clones frames. XDP furthermore allows more direct control over parallelism, and can be tied into the NIC's hardware Receiver-Side Scaling. XDP allows RX and TX descriptors to point into the same memory pool, potentially avoiding copies. Note that the AF_PACKETv4 proposal also used distinct descriptor rings.

Each XSK has either one or both of two RX and TX descriptor ringbuffers, along with a data ringbuffer (the UMEM) into which all descriptor rings point. The descriptor rings are registered with the kernel using XDP_RX_RING/XDP_UMEM_FILL_RING and XDP_TX_RING/XDP_UMEM_COMPLETION_RING with setsockopt(2). UMEM is a virtually contiguous area divided into equally-sized chunks (chunk size is up to the user; it should usually be the MTU plus XDP overhead), and registered with the XDP_UMEM_REG sockopt. Packets will be written to distinct chunks. bind(2) then associates the XSK with a particular device and queueid, at which point traffic begins to flow. It is expected that chunks will be a multiple of the page size, but this does not appear to be necessary—in particular, success has been had doubling up two 2KB chunks on a 1500B MTU, despite amd64's minimum 4KB page size.

The Fill and Completion rings are associated with the UMEM, which might be shared among processes (and multiple XSKs). Each XSK will need its own RX and TX rings, but the Fill and Completion rings are shared along with the UMEM.

The application indicates using the Fill ring which chunks packets ought be placed in. The poll()able RX ring signals packets written by the kernel. The application writes packets for transmit into the TX ring, and the kernel moves descriptors from TX to the Completion ring once transmitted.

The UMEM

struct xdp_umem_reg {

__u64 addr; /* Start of packet data area */

__u64 len; /* Length of packet data area */

__u32 chunk_size;

__u32 headroom;

__u32 flags;

};

XDP_UMEM_REG wants a struct xdp_umem_reg, containing addr (a pointer to the map as uintptr_t), len (length of the map in bytes), chunk_size and headroom. regarding chunk_size, if you're getting EINVAL, consult the kernel's xdp_umem_reg() (from 6.1.7):

if (chunk_size < XDP_UMEM_MIN_CHUNK_SIZE || chunk_size > PAGE_SIZE) {

/* Strictly speaking we could support this, if:

* - huge pages, or

* - using an IOMMU, or

* - making sure the memory area is consecutive

* but for now, we simply say "computer says no".

*/

return -EINVAL;

}

if (mr->flags & ~XDP_UMEM_UNALIGNED_CHUNK_FLAG)

return -EINVAL;

if (!unaligned_chunks && !is_power_of_2(chunk_size))

return -EINVAL;

if (!PAGE_ALIGNED(addr)) {

/* Memory area has to be page size aligned. For

* simplicity, this might change.

*/

return -EINVAL;

}

if ((addr + size) < addr)

return -EINVAL;

npgs = div_u64_rem(size, PAGE_SIZE, &npgs_rem);

if (npgs_rem)

npgs++;

if (npgs > U32_MAX)

return -EINVAL;

chunks = (unsigned int)div_u64_rem(size, chunk_size, &chunks_rem);

if (chunks == 0)

return -EINVAL;

if (!unaligned_chunks && chunks_rem)

return -EINVAL;

if (headroom >= chunk_size - XDP_PACKET_HEADROOM)

return -EINVAL;

Rings and ring descriptors

The RX and TX descriptor rings are arrays of struct xdp_desc, a 16-byte structure:

struct xdp_desc {

__u64 addr;

__u32 len;

__u32 options;

};

addr here is a byte index into the UMEM map. The fill and completion rings are arrays of __u64; they need only an index into the UMEM. Rings themselves are managed with struct xdp_ring_offset:

struct xdp_ring_offset {

__u64 producer;

__u64 consumer;

__u64 desc;

__u64 flags;

};

Only the UMEM is freely allocated by the user process. The rings are mapped into user space from a shared kernel object, via calling mmap(2) on the XSK using the results of the XDP_MAP_OFFSETS sockopt.

The producer and consumer variables tend to be placed 64 bytes apart, presumably so that their cachelines do not interact. See the kernel's circular buffer documentation. XDP works in producer/consumer mode, not "overwrite" mode, and thus loses newer events when the ring is full. The producer writes to the index specified by producer, then increments it. The consumer reads from the index specified by consumer, then increments it. The consumer may not read when producer == consumer, as the buffer is empty. The producer may not write when succ(producer) == consumer, as the buffer is full. Both wrap to 0 when incremented beyond the end of the ring. Userspace is a producer for the FILL and TX rings, and a consumer for the COMPLETION and RX rings.

The population of the buffer is CIRC_CNT(prod, cons, size) aka ((prod) - (cons)) & ((size)-1). The space in the buffer is CIRC_SPACE(prod, cons, size) aka CIRC_CNT(cons, prod + 1, size).

Sizing the maps

What size ought these maps be? Larger maps provide the ability to absorb bigger bursts, but they also give rise to higher maximum latencies, whereas drops can often provide earlier feedback to the sender (depending on the protocol). Fundamentally, if packets arrive more quickly than you clear them, no buffer is sufficient to deal with arbitrarily long time periods. Meanwhile, if there is only one writeable area, you must handle packets in less time than the propagation delay of a single packet to avoid drops (67.1ns for minimum frames at 10Gbps).

XDP requires that all the map entry counts be powers of two. The UMEM may not be more than 2**32 times the smallest page size; as this would be 16TiB on a 4KiB page, you probably needn't worry about that requirement.

The constants XSK_RING_CONS__DEFAULT_NUM_DESCS and XSK_RING_PROD__DEFAULT_NUM_DESCS (henceforth CONSDEF and PRODDEF respectively) are provided by libxdp; let's assume our consumer and producer rings respectively to have these as minimum sizes (if you'd like to hold more than CONSDEF, just shift it however many positions to the left). If we have n threads, each with their own RX ring of at least CONSDEF entries, that implies backing by at least n * CONSDEF UMEM entries (assuming equal distribution among threads). AFAIK there is no way to fill a packet and dispatch it to an RX ring later, so if our RX rings are full, we can't use any more than at most one extra fill descriptor. So our fill ring ought hold at least n * CONSDEF descriptors, but the UMEM needn't support more than n * CONSDEF + 1, and can reasonably get away with n * CONSDEF.

Only one of these maps (the UMEM) is mapped in userspace. The descriptor rings are all mapped in the kernel, based off the size we provide. We probably want to use huge pages to back the UMEM (they're not currently available for the descriptor rings), and if so, it must be a multiple of some huge page size. Assuming amd64, 1GB is probably a bit much (256Ki 4KiB chunks), so that means a multiple of 2MiB (is 1GB really too much? With an admittedly sky-high 800ms round trip time, 1GB is exactly one bandwidth-delay-product for 10Gbps...). To minimize total allocation, then minimize waste, while satisfying our other constraints:

- UMEM_min is n * CONSDEF * chunksize bytes

- UMEM_max is the minimum multiple of the desired huge page size such that UMEM_max >= UMEM_min

- Set descs = CONSDEF

- While n * (descs << 1) * chunksize <= UMEM_max, descs <<= 1

UMEM_max is the size of your UMEM in bytes, with support for n * descs chunks of chunksize bytes each. UMEM_max - n * descs * chunksize number of bytes wasted in this allocation. Each of 'n' RX rings supports descs descriptors (the actual size of the descriptor rings is somewhat larger than this would imply, and set by the kernel). The fill ring supports n * descs descriptors.

Fill ring descriptors are currently eight bytes, while RX ring descriptors are sixteen. On a 64-byte cacheline, it thus might make sense to fetch packets four at a time per thread, and perhaps prefetch subsequent packet data. Of course, this wouldn't be useful if your packet data is already resident in cache thanks to e.g. DDIO.

Driver details

"Bounce on bind" is bad: these devices go down for a few seconds when attaching an XDP program. This is all based on personal experimentation and reading mailing lists.

| Driver | Zero-copy | Multibuf | Native/Offloaded | XDP_REDIRECT | Bounce on bind |

|---|---|---|---|---|---|

| atlantic (Aquantia) | N | N | N | N | N |

| i40e (Intel) | Y | Y | ? | Y | ? |

| ice (Intel) | ? | ? | ? | ? | ? |

| ixgbe (Intel) | Y | Y | Native | Y | Y |

| cxgb3 (Chelsio) | N | N | N | Y | N |

| mvneta (Marvell) | ? | Y | ? | Y | ? |

| mvpp2 (Marvell) | ? | ? | ? | Y | ? |

| mlx4 (Mellanox) | Y | ? | Native | ? | ? |

| mlx5 (Mellanox) | ? | ? | Native | Y | ? |

| netsec (Socionext) | ? | ? | ? | Y | ? |

| nfp (Netronome) | ? | ? | Offloaded | ? | ? |

| tuntap | ? | ? | ? | Y | ? |

| veth | ? | ? | ? | Y | ? |

| virtio_net | ? | ? | ? | Y | ? |

Aquantia is now Marvell. Mellanox is now NVIDIA.

See Also

- eBPF

- DPDK

- Fast UNIX Servers

- iouring

- 100GbE

- "eXtended Disquisitions Pertaining to eXpress Data Paths", a DANKBLOG post from 2023-04-20

External links

- Bringing TSO/GRO and Jumbo Frames to XDP, Linux Plumbers 2021

- Adding AF_XDP Zero-Copy Support to Drivers, Netdevconf 2020

- Accelerating networking with AF_XDP, LWN 2018-04-09

- Monitoring and tuning the Linux Networking Stack: Receiving Data, PackageCloud 2016-06-21