Check out my first novel, midnight's simulacra!

XDP: Difference between revisions

(→AF_XDP) Tags: mobile web edit mobile edit |

Tags: mobile web edit mobile edit |

||

| Line 22: | Line 22: | ||

Think of <tt>XDP_ABORTED</tt> as returning -1 from the eBPF program; it's meant to indicate an internal error. Theoretically, the packet could continue through the stack (as it would have if no XDP program had been running), but XDP uses "fail-close" semantics. Edits to the packet are carried through all XDP paths. | Think of <tt>XDP_ABORTED</tt> as returning -1 from the eBPF program; it's meant to indicate an internal error. Theoretically, the packet could continue through the stack (as it would have if no XDP program had been running), but XDP uses "fail-close" semantics. Edits to the packet are carried through all XDP paths. | ||

<tt>xdp-loader status</tt> will list NICs and show any attached XDP programs. | <tt>xdp-loader status</tt> will list NICs and show any attached XDP programs. <tt>ethtool -S</tt> shows XDP statistics. | ||

Note that XDP runs prior to the packet socket copies which drive <tt>tcpdump</tt>, and thus packets dropped or redirected in XDP will not show up there. It's better to use <tt>xdpdump</tt> when interacting with XDP programs; this also shows the XDP decision made for each packet. | Note that XDP runs prior to the packet socket copies which drive <tt>tcpdump</tt>, and thus packets dropped or redirected in XDP will not show up there. It's better to use <tt>xdpdump</tt> when interacting with XDP programs; this also shows the XDP decision made for each packet. | ||

Revision as of 19:57, 4 December 2022

In the beginning, there were applications slinging streams through the packetizing Honeywell DDP-516 IMPs, and it was good. Then multiple applications needed the network, and needed it in different ways. Then the networks needed walls of fire, and traffic which was shaped. Some called for the Labeling of Multiple Protocols, and others called for timestamps, and still others wanted to SNAT and DNAT and also to masquerade. And yea, IP was fwadm'd, and then chained, and then tabled, and soon arps and bridges too were tabled. And behold now tables of "nf" and "x". And Van Jacobson looked once more upon the land, and frowned, and shed a single tear which became a Channel. And then every ten years or so, we celebrate this by rediscovering Van Jacobson channels under a new name, these days complete with logo.

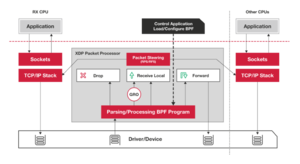

Most recently, they were rediscovered under the name DPDK, but the masters of the Linux kernel eschewed it, and instead rediscovered them under the name XDP, the eXpress Data Path, expressed using eBPF programs. XDP was added to Linux 4.8 and has been heavily developed since then, and is often seen together with iouring, especially with the new AF_XDP family of sockets.

XDP is used to bypass large chunks of the Linux networking stack (including all allocations, particularly alloc_skb()), especially when dropping packets or shuffling them between NICs.

Using XDP

An XDP program is an eBPF program invoked for each received packet which runs either:

- early in the kernel receive path, with no driver support (Generic Mode)

- from the driver context following DMA sync, without any kernel networking stack touches (requires driver support) (Native mode)

- on the NIC itself, without touching the CPU (requires hardware+driver support) (Offloaded mode)

Offloaded mode can theoretically beat native mode, which will usually beat generic mode. XDP programs are specific to a NIC, and most easily attached using xdp-loader. Lower-level functionality is available from libxdp, and below that is pure eBPF machinery. Multiple XDP programs can be stacked on a single interface as of Linux 5.6 (multiprog requires BTF type information, created by using LLVM 10+ and enabling debug information). XDP is only applied to the receive path.

An XDP program can modify the packet data and perform limited resizes of the packet. It returns an action code:

- XDP_PASS: the packet continues through the network stack

- XDP_DROP: the packet is dropped

- XDP_ABORTED: indicate an error (the packet is dropped)

- XDP_TX: emit the packet back out the interface on which it was received

- XDP_REDIRECT: send the packet to another NIC or an AF_XDP socket

Think of XDP_ABORTED as returning -1 from the eBPF program; it's meant to indicate an internal error. Theoretically, the packet could continue through the stack (as it would have if no XDP program had been running), but XDP uses "fail-close" semantics. Edits to the packet are carried through all XDP paths.

xdp-loader status will list NICs and show any attached XDP programs. ethtool -S shows XDP statistics.

Note that XDP runs prior to the packet socket copies which drive tcpdump, and thus packets dropped or redirected in XDP will not show up there. It's better to use xdpdump when interacting with XDP programs; this also shows the XDP decision made for each packet.

Modifying packet data

Modifications should generally take place through the functions exposed in bpf-helpers(7). Updating data in a TCP payload, for instance, requires recomputing a TCP checksum, which can done most effectively through these methods. bpf_redirect(), bpf_redirect_peer(), and bpf_redirect_map() are used to set the destination of an XDP_REDIRECT operation. bpf_clone_redirect() allows a clone of a packet to be redirected, while retaining the original.

Caveats

There is significant (on the order of hundreds of bytes) overhead on each packet. Furthermore, until recently (5.18), or without driver support, XDP cannot use packets larger than an architectural page. On amd64 with 4KB pages, without the new "multibuf" support, only about 3520 bytes are available within a frame. This is smaller than many jumbo MTUs, and furthermore precludes techniques like LRO. With multibuf, packets of arbitrary size can pass through XDP.

AF_XDP

bpf_redirect_map() can be used in conjunction with the eBPF map BPF_MAP_TYPE_XSKMAP to send traffic to userspace AF_XDP sockets (XSKs). With driver support, this is a zero-copy operation. Each XSK, when created, should be inserted into XSKMAP.

XSKs come in two varieties: XDP_SKB and XDP_DRV. XDP_DRV corresponds to Native Mode/Offload Mode XDP, and is faster than the generic XDP_SKB, but requires driver support.

Each XSK has either one or both of two RX and TX descriptor ringbuffers, along with a data ringbuffer (the UMEM) into which all descriptor rings point. The descriptor rings are registered with the kernel using XDP_RX_RING/XDP_UMEM_FILL_RING and XDP_TX_RING/XDP_UMEM_COMPLETION_RING with setsockopt(2). UMEM is a virtually contiguous area divided into equally-sized chunks, and registered with the XDP_UMEM_REG sockopt. bind(2) then associates the XSK with a particular device and queueid, at which point traffic begins to flow.

The Fill and Completion rings are associated with the UMEM, which might be shared among processes (and multiple XSKs). Each XSK will need its own RX and TX rings, but the Fill and Completion rings are shared along with the UMEM.

Driver support

| Driver | Zero-copy | Multibuf | xdpoffload |

|---|---|---|---|

| mvneta | ? | Y | ? |

| ixgbe | Y | ? | ? |

| mlx5 | ? | ? | ? |

| i40e | Y | ? | ? |

| ice | ? | ? | ? |

| nfp | ? | ? | Y |

See Also

External links

- Bringing TSO/GRO and Jumbo Frames to XDP, Linux Plumbers 2021

- Adding AF_XDP Zero-Copy Support to Drivers, Netdevconf 2020