Check out my first novel, midnight's simulacra!

Libtorque: Difference between revisions

update links |

|||

| (139 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

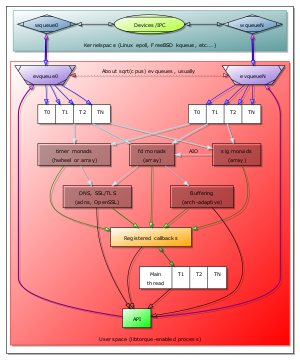

[[File:Libtorque.svg|thumb|right|alt="architecture model"|Architecture of a libtorque-enabled process.]] | |||

'''My [[Fast UNIX Servers]] page is a useful companion to this article.''' | |||

<tt>libtorque</tt> is a multithreaded event library for UNIX designed to take full advantage of the manycore, heterogenous, [[NUMA]] future. The [[media:Libtorque-proposal.pdf|project proposal]] suggests motivation for <tt>libtorque</tt>: I believe it necessary to take scheduling and memory-placement decisions into account to most optimally handle events, especially on manycore machines and ''especially'' to handle unexpected traffic sets (denial of service attacks, oversubscribed pipes, mixed-latency connections, etc). Along the way, I intend to shake up the UNIX programming idiom; my hope is that <tt>libtorque</tt> leads to more network programmers thinking about the complex issues involved, but simplifies rather than aggravates a task already fraught with difficulty. | |||

Other open source event libraries include [http://www.monkey.org/~provos/libevent/ libevent], [http://software.schmorp.de/pkg/libev.html libev] and [http://liboop.ofb.net liboop]. | |||

==Resources== | ==Resources== | ||

* [[git]] hosting from [http://github.com GitHub]: | * "[[Media:hotpar2010.pdf|libtorque: Portable Multithreaded Continuations for Scalable Event-Driven Programs]]" | ||

* [[git]] hosting from [http://github.com GitHub] (dankamongmen/libtorque [http://github.com/dankamongmen/libtorque project page]) | |||

** Lots of good data in the [http://github.com/dankamongmen/libtorque/blob/master/README README!] | ** Lots of good data in the [http://github.com/dankamongmen/libtorque/blob/master/README README!] | ||

** <tt>git clone</tt> from git://github.com/dankamongmen/libtorque.git | ** <tt>git clone</tt> from git://github.com/dankamongmen/libtorque.git | ||

* [http://www.bugzilla.org/ bugzilla], hosted here on http:// | * [http://www.bugzilla.org/ bugzilla], hosted here on https://nick-black.com/bugzilla/. See [[#Current_Issues|below]] for a snapshot bug report. | ||

=== | * A [[Media:Libtorque-presentation.pdf|presentation]] I did for GT's [http://comparch.gatech.edu/arch_whisky/fall09.html Arch-Whiskey] seminar, 2009-11-13 | ||

* | * Fo sho there's a [http://groups.google.com/group/libtorque-devel mailing list]! | ||

===Documentation from the source tree=== | |||

The [http://github.com/dankamongmen/libtorque/tree/master/doc/ doc/ subdirectory] of a libtorque checkout contains several pieces of documentation, most of it quite technical. Some highlights include: | |||

* <tt>[http://github.com/dankamongmen/libtorque/raw/master/doc/mteventqueues mteventqueues]</tt> - "Multithreaded Event Queues". Details of [[epoll]] and [[kqueue]] semantics, especially with regard to locking. Correctness and performance implications thereof. | |||

* <tt>[http://github.com/dankamongmen/libtorque/raw/master/doc/termination termination]</tt> - "Termination". Interaction with POSIX cancellation and signals. API and semantics for initiating and blocking on a libtorque context's shutdown. Design justification and details. | |||

<!-- ===Recent commits (via [http://github.com/feeds/dankamongmen/commits/libtorque/master Atom])=== | |||

<rss>http://github.com/feeds/dankamongmen/commits/libtorque/master</rss> --> | |||

==Design/Functionality== | ==Design/Functionality== | ||

| Line 15: | Line 26: | ||

*'''Q:''' Why aren't you letting the OS manage scheduling, thus following the advice of just about everyone (assuming no real-time requirements)? | *'''Q:''' Why aren't you letting the OS manage scheduling, thus following the advice of just about everyone (assuming no real-time requirements)? | ||

*'''A:''' Scalable event notification on UNIX is based around stateful polling (even in signal-driven POSIX asynchronous I/O, we want to use stateful polling on signals both for performance and event unification). The distribution of these events, at heart, drives scheduling. Since we must influence this distribution, we must make scheduling decisons. | *'''A:''' Scalable event notification on UNIX is based around stateful polling (even in signal-driven POSIX asynchronous I/O, we want to use stateful polling on signals both for performance and event unification). The distribution of these events, at heart, drives scheduling. Since we must influence this distribution, we must make scheduling decisons. | ||

*'''Q:''' Why not just launch a thread per configured processing element, and use an existing event-handling library? | |||

*'''A:''' These threads will have to communicate with one another to spread around event sources. If reshuffling is desired, they must communicate further; if not, the system can be forced into dynamic behavior. The information most pertinent to this shuffling is at the event-handling level; we thus make the decisions there. | |||

*'''Q''' How (aside from being open source) is this any better than Win32's [http://msdn.microsoft.com/en-us/library/aa365198%28VS.85%29.aspx I/O Completion Ports] or Solaris 10's [http://developers.sun.com/solaris/articles/event_completion.html Event Completion Framework]? | |||

*'''A''' These two systems are laudable, and provide much of libtorque's functionality (on different operating systems, of course). An algorithm expressible in IOCP can be expressed in libtorque's CPS; the opposite might not always be true (that is, I suspect IOCP ⊆ libtorque, but that the converse is not strictly true '''FIXME detail'''). More importantly, libtorque performs architecture- and topology-aware event handling within its CPUSet, which a user of IOCP must provide. | |||

** This having been said, they ought be much easier to build atop than the linux and FreeBSD primitives. | |||

===Event Unification=== | |||

Libtorque handles a wide variety of event sources, including: | |||

* any <tt>poll(2)</tt>able file descriptor | |||

* regular and realtime signals (via [[signalfd|signalfd()s]] and <tt>EVFILT_SIGNAL</tt>) | |||

* optionally-periodic absolute and interval timers (via [[timerfd|timerfd()s]] and <tt>EVFILT_TIMER</tt>) | |||

* filesystem changes (via <tt>inotify(9)</tt> and <tt>EVFILT_NODE</tt>) | |||

* network changes (via <tt>netlink(7)</tt> and <tt>EVFILT_MII</tt>) | |||

* condition variables | |||

===System discovery=== | ===System discovery=== | ||

* '''Exciting news!''' Thanks to the efforts of Dr. David Bader and Dr. Richard Vuduc of Georgia Tech's Computational Science and Engineering program, I now have access to a Niagara 2 machine. Expect SPARC and OpenSolaris support soon! | |||

** '''THANK YOU!''', Professors Bader and Vuduc! | |||

* Full support for [[Cpuid|CPUID]] as most recently defined by Intel and AMD (more advanced, as of 2009-10-31, than [http://www.codemonkey.org.uk/projects/x86info/ x86info]) | * Full support for [[Cpuid|CPUID]] as most recently defined by Intel and AMD (more advanced, as of 2009-10-31, than [http://www.codemonkey.org.uk/projects/x86info/ x86info]) | ||

* Full support for [[Linux APIs|Linux]] and [[FreeBSD APIs|FreeBSD's]] native [[cpuset]] libraries, and SGI's <tt>[[cpuset|libcpuset]]</tt> and <tt>[http://oss.sgi.com/projects/libnuma libNUMA]</tt> | * Full support for [[Linux APIs|Linux]] and [[FreeBSD APIs|FreeBSD's]] native [[cpuset]] libraries, and SGI's <tt>[[cpuset|libcpuset]]</tt> and <tt>[http://oss.sgi.com/projects/libnuma libNUMA]</tt> | ||

| Line 28: | Line 55: | ||

** Connected processor groups and relative distance information | ** Connected processor groups and relative distance information | ||

** Number of pages and bank geometry | ** Number of pages and bank geometry | ||

** More: OS page prefetching | ** More: OS page NUMA/prefetching policies, error-recovery info | ||

* Win32's [http://msdn.microsoft.com/en-us/library/ms684847%28VS.85%29.aspx Process and Thread Functions] are pretty well-designed | |||

====archdetect==== | |||

* Utility built/packaged with libtorque: | |||

<pre>Testing archdetect: env LD_LIBRARY_PATH=.out/lib .out/bin/archdetect | |||

Package 0: (8 threads total) | |||

Core 0: 0 4 (2x processor type 1) | |||

Core 1: 1 5 (2x processor type 1) | |||

Core 2: 2 6 (2x processor type 1) | |||

Core 3: 3 7 (2x processor type 1) | |||

( 1x) Memory node 1 of 1: | |||

8,131,244KB (7.754 GB) total in 4KB and 4MB pages | |||

( 8x) Processing unit type 1 of 2: x86 | |||

Extensions: MMX SSE SSE2 SSE3 SSSE3 SSE4.1 SSE4.2 SSE4a | |||

Family: 0x006 (6) Model: 0x1e (30) Stepping: 5 (OEM) | |||

Brand name: Intel(R) Core(TM) i7 CPU Q 720 @ 1.60GHz | |||

2 threads per processing core, 8 cores (4 logical) per package | |||

Cache 1 of 4: 32KB total, 64B line, 4-assoc, 2-shared (L1 code) | |||

Cache 2 of 4: 32KB total, 64B line, 8-assoc, 2-shared (L1 data) | |||

Cache 3 of 4: 256KB total, 64B line, 8-assoc, 2-shared (L2 unified) | |||

Cache 4 of 4: 6MB total, 64B line, 12-assoc, 16-shared (L3 unified) | |||

TLB 1 of 5: 4KB pages, 64-entry, 4-assoc, 2-shared (L1 code) | |||

TLB 2 of 5: 4MB pages, 32-entry, 4-assoc, 2-shared (L1 data) | |||

TLB 3 of 5: 4KB pages, 7-entry, 7-assoc, 2-shared (L2 code) | |||

TLB 4 of 5: 4KB pages, 64-entry, 4-assoc, 2-shared (L2 data) | |||

TLB 5 of 5: 4KB pages, 512-entry, 4-assoc, 2-shared (L2 data) | |||

( 1x) Processing unit type 2 of 2: CUDA | |||

CUDA compute capabilities: 1.2 | |||

Brand name: GeForce GTS 360M | |||

1 thread per processing core, 96 cores per package</pre> | |||

===Scheduling=== | ===Scheduling=== | ||

System architecture, allocations, continuation targets, event pattern and of course processing itself play a role in our scheduling. We first construct the ''scheduling universe'', a scheduling model using the hardware detected during library initialization. Taken from [http://github.com/dankamongmen/libtorque/blob/master/src/libtorque/topology.h src/libtorque/topology.h] (commit a03fc592d03ccc9c20b016faaa1362b88bc07417):<pre>// We are not considering distributed systems in this model. | System architecture, allocations, continuation targets, event pattern and of course processing itself play a role in our scheduling. We first construct the ''scheduling universe'', a scheduling model using the hardware detected during library initialization. Taken from [http://github.com/dankamongmen/libtorque/blob/master/src/libtorque/topology.h src/libtorque/topology.h] (commit a03fc592d03ccc9c20b016faaa1362b88bc07417):<pre>// We are not considering distributed systems in this model. | ||

| Line 60: | Line 117: | ||

// - shared data is allocated which fits entirely within the group's cache, or | // - shared data is allocated which fits entirely within the group's cache, or | ||

// - we need to.</pre> | // - we need to.</pre> | ||

* If the memory being used is distinct, space things out as far as possible: | |||

** Use different NUMA nodes, to spread memory bandwidth | |||

** Use different packages, to engage different caches | |||

** Use different cores, to engage different execution units | |||

** Use different threads, to engage different architectural state | |||

* If the memory being used is shared, compress as much as possible: | |||

** Use the same core, to maximize per-core cache utilization and minimize trips off-core | |||

** Use the same package, to maximize per-package cache utilization and minimize trips off-die | |||

** Use the same NUMA node, to maximize per-node memory utilization and minimize trips off-node | |||

* Whether to use the same core is a function of ''proportion/distribution of shared accesses''... | |||

** Shared writes are even more important to keep close, to minimize true coherence overhead | |||

** Unshared writes are even more important to space out, to minimize false coherence overhead | |||

** Buffers (both RX and TX) are written to once, then read from N (N>0) times -- worst case for coherence protocols | |||

* ...and SMT-affability of the instruction load (in other words, ''degree of fine-grained parallelism'') | |||

** Network servers are not matrix kernels! Assume crappy IPC (syscalls, branching, I/O), and exploit sharing purely in terms of memory | |||

* Let's ''color'' connections | |||

====Some techniques from networking theory==== | |||

* We have an event queue backed by ''n'' elements, but we can artificially limit the amount filled in | |||

** Use [[TCP|TCP's]] slow-start algorithm to find balanced stasis points | |||

* Stochastic Fair Queueing is trying to solve a semi-similar problem | |||

** Use its concepts of [http://en.wikipedia.org/wiki/Pareto_efficiency Pareto frontiers] for distribution | |||

====Fail gracefully and usefully==== | |||

A denial-of-service attack that doesn't degrade the service isn't one to worry about, so we can postpone DoS detection/response to the point of maximum service at some quality level. Once we start failing, either due to: | |||

* Resource acquisition failure, or | |||

* Event backup (really a special case of resource acquisition failure) | |||

we might start prefailing some connections. How ought we choose connections to prefail? Are we reinventing the OOM killer? | |||

* Fail on resource fail. Fairly nondeterministic, and if a connection can hold arbitrary resources at length, vulnerable to DoS (slowloris, sockstress) | |||

* Kill state-hoggy connections. Non-trivial to identify, tends to kill important connections. | |||

* Better to abort early than late, so as not to waste work, but... | |||

* Want to keep traffic flowing at all times | |||

There is a thin line between attack and underprovision. | |||

====Edge-triggered event handling==== | |||

* libtorque deals only in edge-triggered event notification, thus evading an entire level of otherwise necessary locking. '''FIXME EXPLAIN''' | |||

* Multiple threads share a kernel event queue, but write results to distinct portions (|largest cache line|-spaced). Only one goes into the event dispatch system call at a time. If there's a good spread of events, they'll each get a portion. If not, we're either way ahead of current traffic, or there's some lengthy events. | |||

** We can steal work in the case of lengthy events. | |||

** We must implement an event cache anyway in such cases (lengthy unboundable receipts) | |||

===Allocation=== | |||

'''FIXME EXPLAIN''' | |||

* Networks imply buffers. Big, unshared, easily-colored buffers. | |||

** As much as possible, parameterize buffer allocation and move it into libtorque. | |||

*** We get slabs/recycling for free, and only need lock when we enlarge slabs (kernel also locks, so try to match) | |||

** Expose allocators for explicitly shared/unshared data | |||

** When done based on connection type, libtorque can wait for data's arrival to allocate | |||

*** Excellent way to introduce L5+ [[Van Jacobson Channels|van Jacobson channels]] (a [[Hackery#Zetetic|zetetic]]-like engine, perhaps driven by [[Hackery#Parvenu|parvenu]]) | |||

===Scalability=== | |||

Commonly-used algorithms (anything in the event-handling hotpath) oughtn't depend on the number of processors, depth of the scheduling topology, or number of memories -- that is, O(1) as these grow. Performance per processor ought exhibit linear growth from top to bottom, save perhaps superlinear growth when a scheduling level shares caches (event queues also must not be O(n) on cpus; sharing is a critical feature). | |||

===Robustness=== | ===Robustness=== | ||

| Line 65: | Line 170: | ||

* Determine: how are Linux/FreeBSD processes notified/affected when their processors or memories fail? | * Determine: how are Linux/FreeBSD processes notified/affected when their processors or memories fail? | ||

* Determine: how to recover/redetect/redistribute? | * Determine: how to recover/redetect/redistribute? | ||

===Compatibility wrappers=== | |||

* '''All of this is very hand-wavy thus far...''' | |||

====Unthreaded, blocking I/O==== | |||

* Anything done purely via shim can be done without even relinking the binary, by launching it with an LD_PRELOAD wrapper | |||

* For an unthreaded, blocking program, we can shim <tt>read()</tt>, <tt>lseek()</tt> and <tt>write()</tt> -- the blocking I/O operations -- and parallelize them *but* | |||

** we'd have to play some COW game (difficult), or consider the input gifted (breaks semantics) | |||

*** analogous to the issues faced by zero-copy networking implementations | |||

** breaks any ordering-dependent control (analogous to memory reordering), although most of this is already broken | |||

*** this can be automatically and easily done, although the analysis for safety cannot be automated AFAICT | |||

* Instead of making blocking I/O asynchronous, parallelize the I/O | |||

** Only works for very large I/O, not a bunch of small I/O | |||

====Threaded, blocking I/O==== | |||

* Common (if terrible) paradigm -- bundle state into per-thread state, run main loop per-thread | |||

* "Threads are for people who don't understand state machines." -- Alan Cox | |||

** Issue here isn't scheduling flexibility, but overhead. Not much we can do. Don't write code like this. | |||

====Unthreaded, nonblocking I/O==== | |||

* Hand-rolled or libev/etc-based <tt>poll(2)</tt> or <tt>epoll(2)</tt>-like control, one thread | |||

** If hand-rolled: already using a continuations model? Yes? Good. If not, rewrite in continuations. | |||

* Can't help out much with the locking side of things in callback code, but... | |||

* Once callbacks are MT-safe, we're just like libev et al. | |||

** We ought be able to do a trivial mapping from libev/libevent/liboop to our primitives | |||

*** (if not, that's likely a bad reflection on our design!) | |||

====Threaded, nonblocking I/O==== | |||

* We assume multiple threads, each wrapping a libev context | |||

** if you wrote your own multithreaded async continuations library, why do you need libtorque? | |||

* Good! You're MT-safe already, or at least won't become any less MT-safe. | |||

* Strip your shallow, pedestrian, static threading away. See "[[libtorque#Threaded,_nonblocking_I/O|Threaded, nonblocking I/O]]" above. | |||

==References/Prior Art== | ==References/Prior Art== | ||

* | ===Papers/Presentations=== | ||

* | * Mucci. "[http://icl.cs.utk.edu/~mucci/latest/pubs/Notur2009-new.pdf Linux Multicore Performance Analysis and Optimization in a Nutshell]", NOTUR 2009. | ||

* | * Sibai. "[http://journal.info.unlp.edu.ar/journal/journal24/papers/JCST-Oct08-3.pdf Nearest Neighbor Affinity Scheduling in Heterogeneous Multicore Architectures]", JCST 2008. | ||

* Veal, Foong. "[http://www.cse.wustl.edu/ANCS/2007/slides/Bryan%20Veal%20ANCS%20Presentation.pdf Performance Scalability of a Multicore Web Server]", ANCS 2007. | |||

* Eker. "[http://www.ece.ncsu.edu/news/theses/etd-03302007-161856 Characterization of Context Switch Effects on L2 Cache]", 2007. | |||

* Chen et al. "Scheduling Threads for Constructive Cache Sharing on CMPs", SPAA 2007. | |||

* Milfled, Goto, Purkayastha, Guiang, Schulz. Effective Use of Multi-Core Commodity Systems in HPC, LCI 2007. | |||

* Williams, Vuduc, Oliker, Shalf, Yelick, Demmel. "[http://bebop.cs.berkeley.edu/pubs/williams2007-multicore-spmv-slides.pdf Tuning Sparse Matrix Vector Multiplication for Multicore SMPs]", 2007. | |||

* Tsafrir. "[http://www.citeulike.org/user/dankamongmen/article/2052076 The Context-Switch Overhead Inflicted by Hardware Interrupts (and the enigma of do-nothing loops)]", 2007. | |||

* Yelick. "[http://www.sdsc.edu/pmac/workshops/geo2006/pubs/Yelick.pdf Performance and Productivity Opportunities using Global Address Space Programming Models]", 2006. | |||

* Mohamood. "[http://smartech.gatech.edu/handle/1853/10560 DLL-Conscious Instruction Fetch Optimization for SMT Processors]", 2006. | |||

* Elmeleegy, Chanda, Cox, Zwaenepeol. "[http://www.cs.rice.edu/~kdiaa/laio/ Lazy Asynchronous I/O for Event-Driven Servers]", USENIX 2004. | |||

* Sibai's "[http://journal.info.unlp.edu.ar/journal/journal24/papers/JCST-Oct08-3.pdf Nearest neighbor affinity scheduling in heterogeneous multicore architectures]", 2003. | |||

* Suh, Devadas, Rudolph. "[http://portal.acm.org/citation.cfm?id=377792.377797 Analytical cache models with applications to cache partitioning]", Supercomputing 2001. | |||

* Anderson, Bershad, Lazowska, Levy. "[http://www.cs.washington.edu/homes/tom/pubs/sched_act.pdf Scheduler Activations: Effective Kernel Support for the User-Level Management of Parallelism]", 1992. | |||

===Projects=== | |||

* Matt Welsh's [http://www.eecs.harvard.edu/~mdw/proj/seda/ SEDA] (Staged Event-Driven Architecture) | |||

* [http://eventlet.net/ Eventlet] is a python implementation of asynchronous triggers | |||

* The [[Radovic-Hagersten lock]] team has a page on [http://www.it.uu.se/research/group/uart/projects/nucasynch NUMA locking] | |||

* Emery Berger's [http://www.hoard.org/ Hoard] and other manycore-capable [[allocators|allocators]] (libumem aka magazined slab, Google's [http://goog-perftools.sourceforge.net/doc/tcmalloc.html ctmalloc], etc). | * Emery Berger's [http://www.hoard.org/ Hoard] and other manycore-capable [[allocators|allocators]] (libumem aka magazined slab, Google's [http://goog-perftools.sourceforge.net/doc/tcmalloc.html ctmalloc], etc). | ||

* | * The [http://netstreamline.org/general/pipesfs.php PipesFS project] at Vrije Universiteit Amsterdam | ||

* A lot of discursive theorizing/ruminating is captured on my [[Fast UNIX Servers]] page | * A lot of discursive theorizing/ruminating is captured on my [[Fast UNIX Servers]] page | ||

====libev==== | |||

* Works on (refcounted) loop contexts, with an implicit "default" loop. | |||

* <tt>ev_signal_init</tt> operates on an <tt>int</tt> signal rather than a <tt>sigset_t</tt> | |||

** it doesn't compile under -fstrict-aliasing (use -Werror and -Wstrict-aliasing) :/ | |||

==Miscellanea== | |||

===History=== | |||

<tt>libtorque</tt>, under that name, began as a project for Professor [http://vuduc.org/ Rich Vuduc's] Fall 2009 [[High Performance Parallel Computing|CSE6230]]. It really began gestating in three parts: | |||

* Work on intrusion prevention at [http://www.reflexsystems.com/ Reflex] got me hooked on automata-based networking activations and [[Fast UNIX Servers|fast networking]] | |||

* Work on [[ICAP]] servers and [http://en.wikipedia.org/wiki/Reverse_proxy reverse proxies] at [http://www.mcafee.com/ McAfee], especially <tt>snare</tt>, got me thinking about networking APIs | |||

* Professor [http://www.cc.gatech.edu/directory/faculty/faculty/school-of-computer-science/directory/thomas-conte Tom Conte's] Spring 2009 "CS 8803 MCA: Multicore and Manycore Architecture" lit up the parallelism fire | |||

===Milestones=== | |||

* 2009-10-22: [http://github.com/dankamongmen/libtorque/commit/e7429294beb9dc581a7cdab2371d2ddca3169047 First commit] (e7429294beb9dc581a7cdab2371d2ddca3169047) | |||

* 2009-11-12: CSE 6230 checkpoint (see [https://nick-black.com/tabpower/cse6230proposal.pdf proposal]) | |||

* 2009-11-13: CS 8001-CAS [http://comparch.gatech.edu/arch_whisky/fall09.html Arch-Whiskey] presentation | |||

* 2009-12-10: CSE 6230 [https://nick-black.com/tabpower/cse6230finalpaper.pdf final report] (again, see proposal) | |||

* 2010-01-24: [http://www.usenix.org/events/hotpar10/cfp/ HotPar 2010] submission deadline | |||

== | ===Logo=== | ||

<pre>888 ,e, 888 d8 "...tear the roof off the sucka..." | <pre>888 ,e, 888 d8 "...tear the roof off the sucka..." | ||

888 " 888 88e d88 e88 88e 888,8, e88 888 8888 8888 ,e e, | 888 " 888 88e d88 e88 88e 888,8, e88 888 8888 8888 ,e e, | ||

| Line 82: | Line 253: | ||

_____________________________________________ 888 _________________ | _____________________________________________ 888 _________________ | ||

continuation-based unix i/o for manycore numa\888/© nick black 2009</pre> | continuation-based unix i/o for manycore numa\888/© nick black 2009</pre> | ||

==Corrections== | |||

* Marc Lehmann of the [http://software.schmorp.de/pkg/libev.html libev] project pointed out some errors regarding my characterization of that library. Thanks, Marc! | |||

[[Category: Projects]] | |||

Latest revision as of 00:00, 2 October 2017

My Fast UNIX Servers page is a useful companion to this article.

libtorque is a multithreaded event library for UNIX designed to take full advantage of the manycore, heterogenous, NUMA future. The project proposal suggests motivation for libtorque: I believe it necessary to take scheduling and memory-placement decisions into account to most optimally handle events, especially on manycore machines and especially to handle unexpected traffic sets (denial of service attacks, oversubscribed pipes, mixed-latency connections, etc). Along the way, I intend to shake up the UNIX programming idiom; my hope is that libtorque leads to more network programmers thinking about the complex issues involved, but simplifies rather than aggravates a task already fraught with difficulty.

Other open source event libraries include libevent, libev and liboop.

Resources

- "libtorque: Portable Multithreaded Continuations for Scalable Event-Driven Programs"

- git hosting from GitHub (dankamongmen/libtorque project page)

- Lots of good data in the README!

- git clone from git://github.com/dankamongmen/libtorque.git

- bugzilla, hosted here on https://nick-black.com/bugzilla/. See below for a snapshot bug report.

- A presentation I did for GT's Arch-Whiskey seminar, 2009-11-13

- Fo sho there's a mailing list!

Documentation from the source tree

The doc/ subdirectory of a libtorque checkout contains several pieces of documentation, most of it quite technical. Some highlights include:

- mteventqueues - "Multithreaded Event Queues". Details of epoll and kqueue semantics, especially with regard to locking. Correctness and performance implications thereof.

- termination - "Termination". Interaction with POSIX cancellation and signals. API and semantics for initiating and blocking on a libtorque context's shutdown. Design justification and details.

Design/Functionality

libtorque exposes an affinity-managing, continuations-based, architecture-aware, multithreaded scheduler and various utility functions, including an architecture-, OS-, and thread-aware allocator with strong scheduler feedbacks. It can analyze arbitrary object code via libdl and libelf, discover where instructions live, and allocate around those areas. It can take dynamically balanced or (static) asymmetric interrupt loads into account. By making service decisions and allocations based on whole-system effects, libtorque provides low latency and high throughput under even pedantically asymmetric, irregular loads. By carefully distributing edge-triggered event descriptors among various processors' notification sets, highly scalable use is made of advanced low-level primitives such as kqueue and epoll. Through the use of lazy asynchronous I/O, each (expensive!) core is kept busy doing real work.

- Q: Why aren't you letting the OS manage scheduling, thus following the advice of just about everyone (assuming no real-time requirements)?

- A: Scalable event notification on UNIX is based around stateful polling (even in signal-driven POSIX asynchronous I/O, we want to use stateful polling on signals both for performance and event unification). The distribution of these events, at heart, drives scheduling. Since we must influence this distribution, we must make scheduling decisons.

- Q: Why not just launch a thread per configured processing element, and use an existing event-handling library?

- A: These threads will have to communicate with one another to spread around event sources. If reshuffling is desired, they must communicate further; if not, the system can be forced into dynamic behavior. The information most pertinent to this shuffling is at the event-handling level; we thus make the decisions there.

- Q How (aside from being open source) is this any better than Win32's I/O Completion Ports or Solaris 10's Event Completion Framework?

- A These two systems are laudable, and provide much of libtorque's functionality (on different operating systems, of course). An algorithm expressible in IOCP can be expressed in libtorque's CPS; the opposite might not always be true (that is, I suspect IOCP ⊆ libtorque, but that the converse is not strictly true FIXME detail). More importantly, libtorque performs architecture- and topology-aware event handling within its CPUSet, which a user of IOCP must provide.

- This having been said, they ought be much easier to build atop than the linux and FreeBSD primitives.

Event Unification

Libtorque handles a wide variety of event sources, including:

- any poll(2)able file descriptor

- regular and realtime signals (via signalfd()s and EVFILT_SIGNAL)

- optionally-periodic absolute and interval timers (via timerfd()s and EVFILT_TIMER)

- filesystem changes (via inotify(9) and EVFILT_NODE)

- network changes (via netlink(7) and EVFILT_MII)

- condition variables

System discovery

- Exciting news! Thanks to the efforts of Dr. David Bader and Dr. Richard Vuduc of Georgia Tech's Computational Science and Engineering program, I now have access to a Niagara 2 machine. Expect SPARC and OpenSolaris support soon!

- THANK YOU!, Professors Bader and Vuduc!

- Full support for CPUID as most recently defined by Intel and AMD (more advanced, as of 2009-10-31, than x86info)

- Full support for Linux and FreeBSD's native cpuset libraries, and SGI's libcpuset and libNUMA

- Discovers and makes available, for each processor type:

- ISA, ISA-specific capabilities, and number of concurrent threads supported (degrees of SMT)

- Line count, associativity, line length, geometry, and type of all caches

- Entry count, associativity, page size and type of all TLBs

- Inclusiveness relationships among cache and TLB levels

- Interconnection topology, APIC ID's, and how caches are shared among processors

- More: properties of hardware prefetching, ability to support non-temporal loads (MOVNTDQA, PREFETCHNTA, etc)

- Discovers and makes available, for each memory node type:

- Connected processor groups and relative distance information

- Number of pages and bank geometry

- More: OS page NUMA/prefetching policies, error-recovery info

- Win32's Process and Thread Functions are pretty well-designed

archdetect

- Utility built/packaged with libtorque:

Testing archdetect: env LD_LIBRARY_PATH=.out/lib .out/bin/archdetect Package 0: (8 threads total) Core 0: 0 4 (2x processor type 1) Core 1: 1 5 (2x processor type 1) Core 2: 2 6 (2x processor type 1) Core 3: 3 7 (2x processor type 1) ( 1x) Memory node 1 of 1: 8,131,244KB (7.754 GB) total in 4KB and 4MB pages ( 8x) Processing unit type 1 of 2: x86 Extensions: MMX SSE SSE2 SSE3 SSSE3 SSE4.1 SSE4.2 SSE4a Family: 0x006 (6) Model: 0x1e (30) Stepping: 5 (OEM) Brand name: Intel(R) Core(TM) i7 CPU Q 720 @ 1.60GHz 2 threads per processing core, 8 cores (4 logical) per package Cache 1 of 4: 32KB total, 64B line, 4-assoc, 2-shared (L1 code) Cache 2 of 4: 32KB total, 64B line, 8-assoc, 2-shared (L1 data) Cache 3 of 4: 256KB total, 64B line, 8-assoc, 2-shared (L2 unified) Cache 4 of 4: 6MB total, 64B line, 12-assoc, 16-shared (L3 unified) TLB 1 of 5: 4KB pages, 64-entry, 4-assoc, 2-shared (L1 code) TLB 2 of 5: 4MB pages, 32-entry, 4-assoc, 2-shared (L1 data) TLB 3 of 5: 4KB pages, 7-entry, 7-assoc, 2-shared (L2 code) TLB 4 of 5: 4KB pages, 64-entry, 4-assoc, 2-shared (L2 data) TLB 5 of 5: 4KB pages, 512-entry, 4-assoc, 2-shared (L2 data) ( 1x) Processing unit type 2 of 2: CUDA CUDA compute capabilities: 1.2 Brand name: GeForce GTS 360M 1 thread per processing core, 96 cores per package

Scheduling

System architecture, allocations, continuation targets, event pattern and of course processing itself play a role in our scheduling. We first construct the scheduling universe, a scheduling model using the hardware detected during library initialization. Taken from src/libtorque/topology.h (commit a03fc592d03ccc9c20b016faaa1362b88bc07417):

// We are not considering distributed systems in this model.

//

// The scheduling universe is defined by an ordered set of $N > 1$ levels

// $L_0..L_n$. A scheduling group is a structural isomorphism, a counter $C$ of

// instances, and the $C$ affinity masks of corresponding processing elements.

// A level contains $N > 0$ scheduling groups and a description of hardware

// unique to that level. A unique surjection from classes of usable hardware to

// levels (no hardware class is described at two levels, and all usable

// hardware is described by the scheduling universe) is defined by via our

// discovery algorithm.

//

// The reality compactifies things a bit, but in theory:

// Level L_0 consists of scheduling groups over threads and processor types.

// Each successive level $L_{n+1}, n >= 0$ extends $L_n$'s elements. For each

// element $E \in L_n$, extend $E$ through those paths reachable at equal cost

// from all elements in $E$.

//

// Examples:

// A uniprocessor, no matter its memories, is a single topology level.

// 2 unicore, unithread SMP processors with distinct L1 and shared L2 are two

// topology levels: 2x{proc + L1} and {L2 + memory}. Split the L2, and they

// remain two topology levels: 2x{proc + L1 + L2} and {memory}. Combine both

// caches for a single level: {2proc + L1 + L2 + memory}. Sum over a level's

// processors' threads for a thread count.

//

// Note that SMT does not come into play in shaping the topology hierarchy,

// only in calculating the number of threads in a topological group. We only

// schedule to SMT processors if

// - shared data is allocated which fits entirely within the group's cache, or

// - we need to.

- If the memory being used is distinct, space things out as far as possible:

- Use different NUMA nodes, to spread memory bandwidth

- Use different packages, to engage different caches

- Use different cores, to engage different execution units

- Use different threads, to engage different architectural state

- If the memory being used is shared, compress as much as possible:

- Use the same core, to maximize per-core cache utilization and minimize trips off-core

- Use the same package, to maximize per-package cache utilization and minimize trips off-die

- Use the same NUMA node, to maximize per-node memory utilization and minimize trips off-node

- Whether to use the same core is a function of proportion/distribution of shared accesses...

- Shared writes are even more important to keep close, to minimize true coherence overhead

- Unshared writes are even more important to space out, to minimize false coherence overhead

- Buffers (both RX and TX) are written to once, then read from N (N>0) times -- worst case for coherence protocols

- ...and SMT-affability of the instruction load (in other words, degree of fine-grained parallelism)

- Network servers are not matrix kernels! Assume crappy IPC (syscalls, branching, I/O), and exploit sharing purely in terms of memory

- Let's color connections

Some techniques from networking theory

- We have an event queue backed by n elements, but we can artificially limit the amount filled in

- Use TCP's slow-start algorithm to find balanced stasis points

- Stochastic Fair Queueing is trying to solve a semi-similar problem

- Use its concepts of Pareto frontiers for distribution

Fail gracefully and usefully

A denial-of-service attack that doesn't degrade the service isn't one to worry about, so we can postpone DoS detection/response to the point of maximum service at some quality level. Once we start failing, either due to:

- Resource acquisition failure, or

- Event backup (really a special case of resource acquisition failure)

we might start prefailing some connections. How ought we choose connections to prefail? Are we reinventing the OOM killer?

- Fail on resource fail. Fairly nondeterministic, and if a connection can hold arbitrary resources at length, vulnerable to DoS (slowloris, sockstress)

- Kill state-hoggy connections. Non-trivial to identify, tends to kill important connections.

- Better to abort early than late, so as not to waste work, but...

- Want to keep traffic flowing at all times

There is a thin line between attack and underprovision.

Edge-triggered event handling

- libtorque deals only in edge-triggered event notification, thus evading an entire level of otherwise necessary locking. FIXME EXPLAIN

- Multiple threads share a kernel event queue, but write results to distinct portions (|largest cache line|-spaced). Only one goes into the event dispatch system call at a time. If there's a good spread of events, they'll each get a portion. If not, we're either way ahead of current traffic, or there's some lengthy events.

- We can steal work in the case of lengthy events.

- We must implement an event cache anyway in such cases (lengthy unboundable receipts)

Allocation

FIXME EXPLAIN

- Networks imply buffers. Big, unshared, easily-colored buffers.

- As much as possible, parameterize buffer allocation and move it into libtorque.

- We get slabs/recycling for free, and only need lock when we enlarge slabs (kernel also locks, so try to match)

- Expose allocators for explicitly shared/unshared data

- When done based on connection type, libtorque can wait for data's arrival to allocate

- Excellent way to introduce L5+ van Jacobson channels (a zetetic-like engine, perhaps driven by parvenu)

- As much as possible, parameterize buffer allocation and move it into libtorque.

Scalability

Commonly-used algorithms (anything in the event-handling hotpath) oughtn't depend on the number of processors, depth of the scheduling topology, or number of memories -- that is, O(1) as these grow. Performance per processor ought exhibit linear growth from top to bottom, save perhaps superlinear growth when a scheduling level shares caches (event queues also must not be O(n) on cpus; sharing is a critical feature).

Robustness

As the number of dies grow, so do interconnects. We're extending Moore's Law, but what about Weibull's distribution? As the number of cores rises, so too does the likelyhood that a processor or DIMM failure will be seen across the lifetime of a machine (remember, a failure distribution exponential as a function of time suggests only ~37% likelyhood of any given component reaching MTBF). Hot-pluggable processors and memories are also likely to be encountered, especially in cloud/virtual environments. For that matter, sysadmins can reconfigure cpusets and NUMA on the fly, or even migrate our process between sets. We ought design with all this in mind.

- Determine: how are Linux/FreeBSD processes notified/affected when their processors or memories fail?

- Determine: how to recover/redetect/redistribute?

Compatibility wrappers

- All of this is very hand-wavy thus far...

Unthreaded, blocking I/O

- Anything done purely via shim can be done without even relinking the binary, by launching it with an LD_PRELOAD wrapper

- For an unthreaded, blocking program, we can shim read(), lseek() and write() -- the blocking I/O operations -- and parallelize them *but*

- we'd have to play some COW game (difficult), or consider the input gifted (breaks semantics)

- analogous to the issues faced by zero-copy networking implementations

- breaks any ordering-dependent control (analogous to memory reordering), although most of this is already broken

- this can be automatically and easily done, although the analysis for safety cannot be automated AFAICT

- we'd have to play some COW game (difficult), or consider the input gifted (breaks semantics)

- Instead of making blocking I/O asynchronous, parallelize the I/O

- Only works for very large I/O, not a bunch of small I/O

Threaded, blocking I/O

- Common (if terrible) paradigm -- bundle state into per-thread state, run main loop per-thread

- "Threads are for people who don't understand state machines." -- Alan Cox

- Issue here isn't scheduling flexibility, but overhead. Not much we can do. Don't write code like this.

Unthreaded, nonblocking I/O

- Hand-rolled or libev/etc-based poll(2) or epoll(2)-like control, one thread

- If hand-rolled: already using a continuations model? Yes? Good. If not, rewrite in continuations.

- Can't help out much with the locking side of things in callback code, but...

- Once callbacks are MT-safe, we're just like libev et al.

- We ought be able to do a trivial mapping from libev/libevent/liboop to our primitives

- (if not, that's likely a bad reflection on our design!)

- We ought be able to do a trivial mapping from libev/libevent/liboop to our primitives

Threaded, nonblocking I/O

- We assume multiple threads, each wrapping a libev context

- if you wrote your own multithreaded async continuations library, why do you need libtorque?

- Good! You're MT-safe already, or at least won't become any less MT-safe.

- Strip your shallow, pedestrian, static threading away. See "Threaded, nonblocking I/O" above.

References/Prior Art

Papers/Presentations

- Mucci. "Linux Multicore Performance Analysis and Optimization in a Nutshell", NOTUR 2009.

- Sibai. "Nearest Neighbor Affinity Scheduling in Heterogeneous Multicore Architectures", JCST 2008.

- Veal, Foong. "Performance Scalability of a Multicore Web Server", ANCS 2007.

- Eker. "Characterization of Context Switch Effects on L2 Cache", 2007.

- Chen et al. "Scheduling Threads for Constructive Cache Sharing on CMPs", SPAA 2007.

- Milfled, Goto, Purkayastha, Guiang, Schulz. Effective Use of Multi-Core Commodity Systems in HPC, LCI 2007.

- Williams, Vuduc, Oliker, Shalf, Yelick, Demmel. "Tuning Sparse Matrix Vector Multiplication for Multicore SMPs", 2007.

- Tsafrir. "The Context-Switch Overhead Inflicted by Hardware Interrupts (and the enigma of do-nothing loops)", 2007.

- Yelick. "Performance and Productivity Opportunities using Global Address Space Programming Models", 2006.

- Mohamood. "DLL-Conscious Instruction Fetch Optimization for SMT Processors", 2006.

- Elmeleegy, Chanda, Cox, Zwaenepeol. "Lazy Asynchronous I/O for Event-Driven Servers", USENIX 2004.

- Sibai's "Nearest neighbor affinity scheduling in heterogeneous multicore architectures", 2003.

- Suh, Devadas, Rudolph. "Analytical cache models with applications to cache partitioning", Supercomputing 2001.

- Anderson, Bershad, Lazowska, Levy. "Scheduler Activations: Effective Kernel Support for the User-Level Management of Parallelism", 1992.

Projects

- Matt Welsh's SEDA (Staged Event-Driven Architecture)

- Eventlet is a python implementation of asynchronous triggers

- The Radovic-Hagersten lock team has a page on NUMA locking

- Emery Berger's Hoard and other manycore-capable allocators (libumem aka magazined slab, Google's ctmalloc, etc).

- The PipesFS project at Vrije Universiteit Amsterdam

- A lot of discursive theorizing/ruminating is captured on my Fast UNIX Servers page

libev

- Works on (refcounted) loop contexts, with an implicit "default" loop.

- ev_signal_init operates on an int signal rather than a sigset_t

- it doesn't compile under -fstrict-aliasing (use -Werror and -Wstrict-aliasing) :/

Miscellanea

History

libtorque, under that name, began as a project for Professor Rich Vuduc's Fall 2009 CSE6230. It really began gestating in three parts:

- Work on intrusion prevention at Reflex got me hooked on automata-based networking activations and fast networking

- Work on ICAP servers and reverse proxies at McAfee, especially snare, got me thinking about networking APIs

- Professor Tom Conte's Spring 2009 "CS 8803 MCA: Multicore and Manycore Architecture" lit up the parallelism fire

Milestones

- 2009-10-22: First commit (e7429294beb9dc581a7cdab2371d2ddca3169047)

- 2009-11-12: CSE 6230 checkpoint (see proposal)

- 2009-11-13: CS 8001-CAS Arch-Whiskey presentation

- 2009-12-10: CSE 6230 final report (again, see proposal)

- 2010-01-24: HotPar 2010 submission deadline

Logo

888 ,e, 888 d8 "...tear the roof off the sucka..." 888 " 888 88e d88 e88 88e 888,8, e88 888 8888 8888 ,e e, 888 888 888 888b d88888 d888 888b 888 " d888 888 8888 8888 d88 88b 888 888 888 888P 888 Y888 888P 888 Y888 888 Y888 888P 888 , 888 888 888 88" 888 "88 88" 888 "88 888 "88 88" "YeeP" _____________________________________________ 888 _________________ continuation-based unix i/o for manycore numa\888/© nick black 2009

Corrections

- Marc Lehmann of the libev project pointed out some errors regarding my characterization of that library. Thanks, Marc!